Deepfake Detection Technology

The synthetic media revolution has reached an inflection point. While deepfake technology enables creative applications in entertainment and education, it simultaneously weaponizes misinformation at unprecedented scale. Financial institutions report fraud losses exceeding $400 million annually from deepfake attacks, with sophisticated voice clones authorizing fraudulent wire transfers and AI-generated videos manipulating stock prices. Mastercard research reveals that 37% of businesses have been targeted by identity fraud fueled by deepfakes, tandis que deepfake incidents increased 900% year-over-year globally in 2024.

The authentication crisis extends beyond corporate boardrooms. Political campaigns face fabricated candidate statements spreading across social media before fact-checkers can respond. Journalists struggle to verify source legitimacy when audio clips and video evidence can be synthetically generated in minutes. Law enforcement encounters falsified evidence challenging judicial proceedings. The erosion of trust in digital media represents more than a technical challenge; it threatens the foundational assumption that seeing and hearing constitute believing.

Detection technology has evolved from academic curiosity to mission-critical infrastructure. Yet research from Columbia Journalism Review demonstrates that most automated detection tools struggle with generalization beyond their training data, achieving impressive laboratory results while failing against real-world manipulations. The arms race between generation and detection technologies creates a moving target where today’s cutting-edge detector becomes tomorrow’s antiquated system.

This comprehensive guide examines the complete landscape of deepfake detection technology in 2025. You’ll discover how advanced neural networks identify synthetic media artifacts, evaluate enterprise-ready detection solutions, understand the limitations threatening reliability, and implement defense strategies that actually work when sophisticated adversaries deploy state-of-the-art generation techniques. Whether you’re securing a financial institution, protecting brand reputation, or implementing content moderation systems, you’ll gain the knowledge needed to deploy effective deepfake detection in production environments.

Understanding Deepfake Technology: How Synthesis Works

Effective detection requires understanding generation techniques. Deepfakes leverage sophisticated machine learning models that learn to mimic human appearances, voices, and behaviors with remarkable fidelity. The underlying technology has progressed from crude face-swapping experiments to photorealistic synthesis indistinguishable from authentic recordings to casual observers.

Generative Adversarial Networks: The Foundation

Generative Adversarial Networks (GANs) power most contemporary deepfake generation. The GAN architecture consists of two neural networks engaged in an adversarial game. The generator network creates synthetic content, attempting to produce outputs indistinguishable from real examples. The discriminator network evaluates content, classifying samples as authentic or fabricated. Through iterative training, the generator improves its synthesis capabilities while the discriminator sharpens its detection abilities.

This adversarial training process creates a natural arms race within the model itself. As the discriminator becomes more skilled at identifying fake content, the generator must produce increasingly realistic outputs to deceive it. After thousands or millions of training iterations, the generator learns to create synthetic media that successfully fools not only its paired discriminator but often human observers as well.

StyleGAN and its subsequent iterations represent the state-of-the-art in GAN-based face generation. These architectures introduce style-based generation techniques that separate high-level attributes like pose and identity from fine-grained details like texture and color. This separation enables unprecedented control over synthetic face generation, allowing manipulation of specific features while maintaining overall realism. StyleGAN3 and StyleGAN-XL push fidelity even further, generating high-resolution faces with minimal artifacts.

Face-swapping applications utilize variations of GAN architectures optimized for transferring source faces onto target videos while preserving original expressions and head movements. DeepFaceLab, FaceSwap-GAN, and similar tools have democratized face-swapping technology, making capabilities that once required specialized expertise accessible to users with consumer-grade hardware and minimal technical knowledge.

Diffusion Models: The New Generation Frontier

Diffusion models represent the latest evolution in generative technology, fundamentally changing the synthesis landscape. Unlike GANs’ adversarial training, diffusion models learn through a sequential denoising process. The model trains by gradually adding noise to real images until they become pure random noise, then learns to reverse this process, progressively denoising random inputs into realistic synthetic content.

This approach offers several advantages over traditional GANs. Training stability improves dramatically, eliminating the notorious mode collapse and convergence problems that plague GAN training. Sample quality and diversity increase, with diffusion models generating a wider variety of realistic outputs. Most significantly for detection, diffusion models produce synthetic content with different artifact patterns than GAN-generated media, potentially rendering traditional detection approaches less effective.

Stable Diffusion, DALL-E 3, and Midjourney demonstrate diffusion models’ capabilities in image generation. These systems create photorealistic images from text descriptions, enabling synthesis of arbitrary scenes and subjects. While primarily marketed for creative applications, the same technologies enable malicious actors to generate fabricated evidence, create non-consensual intimate imagery, and produce misleading visual content at scale.

Research published in 2025 confirms that diffusion-based deepfakes present unique detection challenges. Traditional CNN-based detectors trained primarily on GAN artifacts experience significant performance degradation when encountering diffusion-generated content. The subtle differences in how these models synthesize images create blind spots in detection systems not specifically designed to handle them.

Audio Synthesis: Voice Cloning and Speech Generation

Audio deepfakes pose distinct threats, particularly in scenarios where visual verification isn’t possible. Voice cloning technologies can now replicate individual speech patterns from remarkably short audio samples. Some systems claim to generate convincing voice clones from as little as three seconds of source audio, though quality generally improves with more training data.

Text-to-speech synthesis has evolved beyond robotic-sounding output to natural, expressive speech that captures emotional nuance and individual speaking styles. Neural text-to-speech systems like Microsoft Azure’s Neural TTS, Google’s WaveNet, and open-source alternatives produce audio nearly indistinguishable from human recordings in many contexts.

Voice conversion techniques transform one speaker’s voice to sound like another while preserving linguistic content and prosodic features. These technologies enable sophisticated audio forgeries where attackers record their own speech, then convert it to match a target victim’s voice. The resulting audio contains genuine speech patterns and natural variation, making detection significantly more challenging than with pure text-to-speech synthesis.

Pindrop Security research demonstrates that audio deepfakes achieve 99% accuracy in mimicking target voices under controlled conditions. However, subtle artifacts often remain detectable through specialized analysis techniques. Unnatural intonation patterns, irregular breathing sounds, inconsistent background noise, and spectral anomalies provide detection signals when present. The challenge lies in reliably identifying these artifacts when sophisticated attackers actively attempt to conceal them.

Detection Methodologies: From Theory to Practice

Multiple detection approaches have emerged, each with distinct strengths and limitations. Understanding these methodologies enables informed tool selection and realistic expectations about detection capabilities in various deployment scenarios.

Convolutional Neural Network Approaches

Convolutional Neural Networks form the backbone of most contemporary deepfake detection systems. These architectures excel at automatically learning hierarchical visual features from training data, identifying patterns that distinguish authentic from manipulated content without requiring manual feature engineering.

CNN-based detectors typically extract individual frames from videos, process each frame independently through the neural network, then aggregate frame-level predictions into a final video-level classification. This spatial analysis approach focuses on visual inconsistencies within single frames rather than temporal coherence across frame sequences.

Research published in Neural Computing and Applications demonstrates that specialized CNN architectures achieve strong performance on benchmark datasets. Xception networks, originally designed for image classification, prove particularly effective for deepfake detection. The Xception architecture employs depthwise separable convolutions that efficiently extract features at multiple scales, capturing both coarse structural abnormalities and fine-grained textural artifacts.

ResNet architectures provide another popular foundation for detection systems. ResNet’s residual connections enable training of very deep networks without performance degradation, allowing detectors to learn complex feature hierarchies that capture subtle manipulation artifacts. ResNet-50 and ResNet-101 variants frequently appear in academic research and commercial detection products.

VGG networks, despite being older architectures, continue finding applications in deepfake detection. Their straightforward design philosophy and strong transfer learning characteristics make them attractive choices, particularly when training data is limited. VGG16’s performance often proves sufficient for applications where inference speed matters less than detection accuracy.

The primary limitation of pure CNN approaches centers on their focus on spatial features within individual frames. Sophisticated deepfakes may exhibit minimal per-frame artifacts while containing temporal inconsistencies across frame sequences. A face might appear realistic in any single frame, yet display unnatural motion patterns or inconsistent lighting as the video progresses. CNN-only detectors miss these temporal signals entirely.

Recurrent Neural Network and Temporal Analysis

Recurrent Neural Networks address the temporal blind spot of CNN-only approaches by explicitly modeling frame sequences. LSTM (Long Short-Term Memory) networks, a sophisticated RNN variant, excel at capturing long-range dependencies in sequential data, making them well-suited for identifying temporal anomalies in video deepfakes.

Hybrid CNN-LSTM architectures represent the current best practice for video deepfake detection. These systems employ CNNs to extract spatial features from individual frames, then feed these feature sequences into LSTM networks that model temporal patterns. Research from the International Journal of Scientific Research in Science and Technology shows this hybrid approach achieves superior performance compared to either component alone.

The CNN component captures spatial artifacts like unnatural facial textures, inconsistent lighting patterns, and boundary artifacts around manipulated regions. The LSTM component identifies temporal inconsistencies such as jittery motion, frame-to-frame discontinuities, and unnatural eye movements or blinking patterns. Together, they provide comprehensive analysis across both spatial and temporal dimensions.

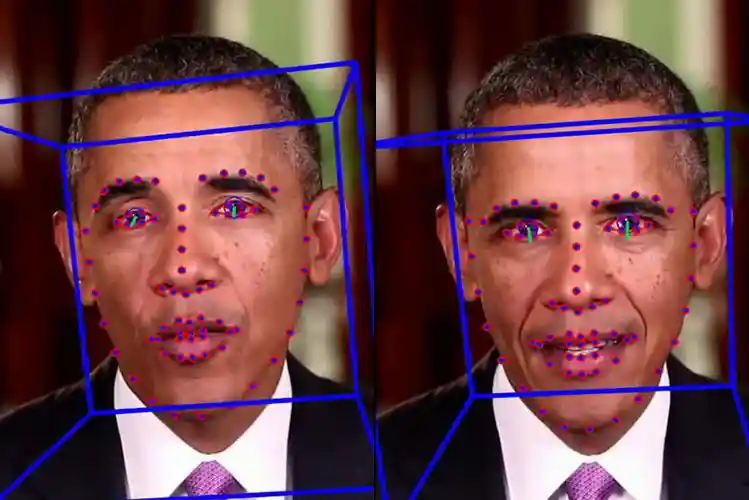

Temporal analysis proves especially valuable for detecting sophisticated face-swapping attacks. While individual swapped faces may appear realistic, maintaining perfect consistency across all frames remains challenging for synthesis models. Subtle variations in facial landmarks between adjacent frames, inconsistent head pose estimation, or momentary failures in expression transfer create detection signals invisible to frame-by-frame analysis.

However, temporal approaches face their own limitations. They require processing multiple frames, increasing computational costs compared to single-frame CNN detectors. Real-time application becomes more challenging when analyzing sequences rather than individual frames. Additionally, heavily compressed videos with low frame rates may lack sufficient temporal resolution for effective sequence-based detection.

Transformer-Based Detection Models

Transformer architectures, originally developed for natural language processing, have recently revolutionized computer vision tasks including deepfake detection. Vision Transformers (ViTs) process images as sequences of patches, using self-attention mechanisms to capture both local and global dependencies.

Convolutional Vision Transformers (CVTs) combine CNN feature extraction with transformer-based processing, leveraging the complementary strengths of both approaches. Studies demonstrate that CVT-based detectors achieve state-of-the-art performance on challenging benchmark datasets, particularly for detecting novel manipulation techniques not represented in training data.

The self-attention mechanism enables transformers to capture long-range dependencies more effectively than CNNs or RNNs. In deepfake detection context, this means identifying relationships between distant image regions, detecting global inconsistencies that local convolution operations might miss. For example, inconsistent lighting across a face or incompatible shadows from different scene elements become more apparent through global attention patterns.

Transformer-based models also demonstrate improved generalization capabilities, a critical requirement given the rapid evolution of synthesis techniques. Models that perform well only on specific generators or manipulation methods provide limited real-world utility. Transformers’ ability to learn more robust, transferable representations helps maintain detection accuracy when encountering novel deepfake types.

The primary drawbacks center on computational requirements and training data needs. Transformer models typically require more parameters than comparable CNNs, translating to higher inference costs and memory consumption. They also generally need larger training datasets to avoid overfitting, potentially limiting applicability in specialized domains with limited labeled examples.

Frequency Domain Analysis

Frequency domain detection represents an orthogonal approach, analyzing images in the Fourier or wavelet transform space rather than directly examining pixel values. This technique exploits the observation that synthesis artifacts often manifest more clearly in frequency representations than spatial domains.

GAN-generated images frequently exhibit characteristic frequency patterns resulting from the generation process. Periodic artifacts, spectral anomalies, and unnatural frequency distributions provide detection signals invisible to spatial analysis. Discrete Cosine Transform (DCT) coefficients, commonly used in image compression, reveal manipulation artifacts that survive JPEG compression.

Research on frequency-domain detection shows promise for detecting manipulations that leave minimal spatial traces. Face-swapping operations that carefully match textures and lighting in the spatial domain often introduce frequency-domain inconsistencies at manipulation boundaries. These frequency artifacts persist even when spatial traces are effectively hidden.

Frequency analysis also demonstrates robustness to certain evasion techniques. Adversaries attempting to conceal spatial artifacts through post-processing may inadvertently introduce new frequency-domain signatures. The dual-domain approach creates a more challenging evasion problem for attackers who must simultaneously fool both spatial and frequency-based detectors.

However, frequency methods face limitations including sensitivity to image compression and preprocessing. Heavy JPEG compression can mask or distort the frequency-domain artifacts that detection algorithms rely upon. Additionally, frequency-based approaches often work best in combination with spatial methods rather than as standalone detection systems.

Biological Signal Detection

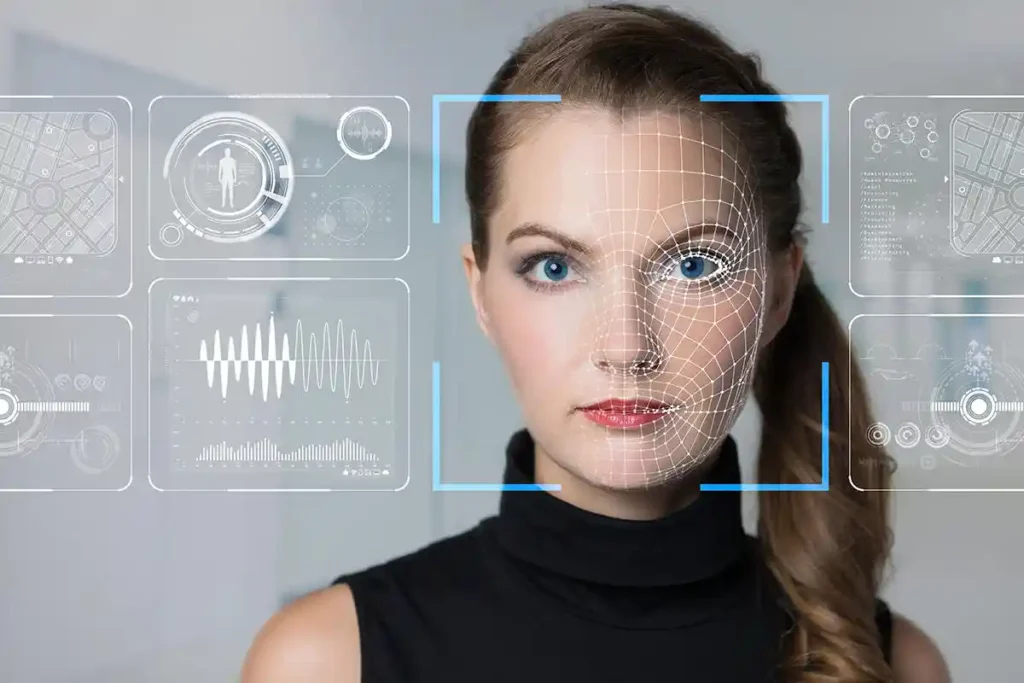

Biological signal-based detection represents a fascinating approach exploiting physiological phenomena difficult for synthesis models to perfectly replicate. These methods analyze subtle biological signals like blood flow patterns visible through photoplethysmography (PPG) analysis, eye movement characteristics, and natural blinking patterns.

Intel’s FakeCatcher technology pioneered commercial application of PPG-based detection. This technique measures subtle color changes in facial skin caused by blood flow, extracting heart rate signals from video. Deepfakes often fail to accurately replicate these periodic color variations, either omitting them entirely or introducing unnatural patterns that contradict physiological norms.

Blink detection algorithms analyze the frequency, duration, and naturalness of eye blinks in video content. Real humans blink according to predictable patterns influenced by factors like speech, cognitive load, and environmental conditions. Deepfake videos frequently exhibit abnormal blinking behavior, either blinking too infrequently due to training data biases or producing unnaturally mechanical blink animations.

The advantages of biological signal detection include resilience to certain types of adversarial manipulation and strong generalization across different synthesis techniques. Since these approaches don’t rely on learning specific artifacts of particular generation methods, they potentially maintain effectiveness even against novel deepfake types.

Limitations arise from the need for sufficient video quality and length to extract reliable biological signals. Low-resolution or heavily compressed videos may lack the fidelity required for accurate PPG analysis. Short clips might not contain enough blink events for statistical analysis. Additionally, sophisticated attackers aware of these detection methods may attempt to inject synthetic biological signals into generated content, though doing so convincingly remains challenging.

Enterprise Detection Solutions: Commercial Tools and Platforms

Organizations requiring production-ready deepfake detection have multiple commercial options, each optimized for different use cases and deployment environments. Understanding the capabilities and limitations of available solutions enables informed procurement decisions aligned with specific organizational needs.

Reality Defender: Multi-Modal Enterprise Protection

Reality Defender positions itself as a comprehensive enterprise deepfake detection platform providing real-time analysis across multiple media types. The system employs an ensemble approach, combining multiple specialized models to achieve robust detection across diverse manipulation techniques.

The platform supports integration with existing communication infrastructure including contact centers, video conferencing systems, and content moderation pipelines. API and SDK access enables developers to embed detection capabilities directly into custom applications. Real-time processing capabilities allow for live detection during ongoing communications rather than only post-hoc analysis.

Reality Defender reports detecting manipulation across audio, video, and image modalities. The ensemble methodology provides resilience against individual model failures, with multiple independent detectors analyzing each piece of content. If consensus emerges that content is manipulated, the platform flags it for review or automated response based on configured policies.

The system has been deployed by financial institutions for fraud prevention, government agencies for disinformation monitoring, and media organizations for content verification. Reported detection accuracy exceeds 95% on standard benchmarks, though real-world performance depends heavily on the specific manipulation techniques encountered and content quality.

Pricing structures typically follow enterprise licensing models with costs based on volume, deployment mode, and support requirements. Organizations should expect significant implementation effort for proper integration with existing systems and processes.

Pindrop: Audio-Specific Voice Deepfake Detection

Pindrop Security specializes in audio deepfake detection with particular focus on contact center security and voice authentication scenarios. The platform analyzes unique characteristics of human speech including intonation patterns, rhythm, speech cadence, and acoustic properties to distinguish authentic voices from synthetic audio.

Pindrop Pulse liveness detection operates in real-time during phone calls, generating liveness scores indicating the probability that a caller represents a real human rather than synthetic audio playback. This capability proves especially valuable for financial services organizations defending against voice-based social engineering attacks where fraudsters use cloned voices to impersonate account holders.

The system can analyze both live phone calls and uploaded audio files, supporting retrospective analysis of recorded content alongside real-time protection. Integration with existing contact center infrastructure enables deployment without requiring replacement of telephony systems or customer-facing applications.

Pindrop claims 99% accuracy for synthetic voice detection with analysis completing in under two seconds. The platform processes over 300 million authentication attempts annually across banking, insurance, and healthcare sectors. A deepfake warranty program backs the technology, though specific terms and conditions apply.

Limitations include focus exclusively on audio modality without video or image analysis capabilities. Organizations requiring comprehensive multi-modal detection must combine Pindrop with complementary solutions covering visual media.

Sensity: Brand and Executive Protection

Sensity provides deepfake detection with emphasis on brand protection and executive impersonation monitoring. The platform continuously scans social media, online forums, and other public channels for synthetic media impersonating protected individuals or misusing brand assets.

Real-time monitoring across over 9,000 sources enables rapid detection of emerging deepfake threats. Automated alerts notify security teams when synthetic content involving monitored entities appears online, enabling swift response before misinformation spreads widely.

The system enhances Know Your Customer (KYC) processes by detecting synthetic identities during onboarding and authentication workflows. Financial institutions and cryptocurrency exchanges use Sensity to prevent account creation using deepfaked identity documents or biometric spoofing during facial recognition verification.

Sensity integrates with content moderation platforms and security operation centers, providing deepfake intelligence alongside other threat indicators. This holistic approach enables correlation of deepfake incidents with broader attack campaigns or threat actor activity.

OpenAI Deepfake Detector

OpenAI’s deepfake detector specifically targets content generated using OpenAI’s DALL-E 3 model, achieving 98.8% accuracy for identifying these AI-generated images. This specialized approach demonstrates both the potential and limitations of narrowly-focused detection systems.

The detector excels at identifying images created by the specific model it’s trained to detect, leveraging deep understanding of DALL-E 3’s generation artifacts. However, performance degrades substantially against images created using different generation techniques. This limitation highlights a fundamental challenge in deepfake detection where model-specific detectors provide high accuracy within their domain but struggle with generalization.

OpenAI provides API access enabling integration of the detector into content moderation workflows, publishing platforms, and verification systems. Free tier access allows limited scanning volume for evaluation and small-scale deployment.

Hive AI Deepfake Detection

Hive AI offers moderation and detection services including deepfake identification capabilities. The platform provides probabilistic detection scores indicating confidence that content has been manipulated, allowing organizations to establish custom thresholds based on their risk tolerance.

Media organizations, governments, and financial institutions deploy Hive AI for content verification and misinformation detection. The platform analyzes both images and videos, identifying face replacements, lip-sync alterations, and other common manipulation techniques.

Integration capabilities include REST APIs for programmatic access and pre-built connectors for popular content management and moderation platforms. Real-time processing enables inline content screening during upload or submission workflows.

Attestiv: Digital Fingerprinting and Authentication

Attestiv takes a complementary approach focused on content authentication rather than purely detection-based analysis. The platform captures digital fingerprints and metadata at content creation time, enabling later verification that media hasn’t been tampered with since original capture.

This proactive authentication approach proves valuable for applications where content integrity verification matters more than general deepfake detection. Insurance claim processing, evidence collection, and compliance documentation benefit from being able to prove that submitted media represents authentic, unaltered recordings.

The system assigns Overall Suspicion Ratings combining multiple analysis factors into actionable scores. Content originators cryptographically sign media at capture time using mobile SDKs or integrated camera systems. Later verification checks these signatures and performs forensic analysis to detect any post-capture manipulation.

Limitations include requiring adoption by content creators and inability to verify media that wasn’t captured using Attestiv-enabled systems. The approach works best within controlled ecosystems rather than for verifying arbitrary internet content.

Critical Limitations and the Detection Gap

Understanding detection limitations proves as important as recognizing capabilities. Deploying detection technology with unrealistic expectations leads to false confidence and inadequate risk mitigation. Multiple fundamental challenges constrain what current detection systems can reliably achieve.

The Generalization Problem

Research from Columbia Journalism Review identifies generalization failure as the most critical limitation facing detection technology. Detection models trained on specific deepfake types or generators frequently fail when encountering novel synthesis techniques, even when the new manipulations are similar to training examples.

A meta-analysis of audio deepfake detection methods found that although most techniques achieve impressive results on in-domain tests, their performance drops sharply when dealing with out-of-domain datasets representing real-world scenarios. This occurs because detection algorithms learn specific artifact patterns present in training data. When asked to detect content generated using techniques not covered in their training, they struggle to yield accurate results.

Similar patterns appear across video and image detection research. A detector trained primarily on GAN-generated faces may perform poorly against diffusion model outputs, even though both create synthetic faces. The underlying artifacts differ enough that the detector’s learned patterns don’t transfer effectively.

This limitation creates a fundamental vulnerability: as generative AI software continues advancing and proliferating, it remains one step ahead of detection tools. Organizations must continuously retrain or update detection systems to maintain effectiveness against evolving threats. The detection gap widens whenever new generation techniques emerge faster than detectors can adapt.

Adversarial Evasion and Arms Race Dynamics

Research demonstrates that deepfake detection models remain highly vulnerable to adversarial attacks where malicious actors deliberately attempt to evade detection. Even slight perturbations to synthetic content can significantly reduce detection accuracy, with sophisticated attacks reducing performance by over 90% in some cases.

Fast Gradient Sign Method (FGSM) attacks and similar adversarial techniques exploit architectural vulnerabilities in CNN-based detectors. These attacks introduce imperceptible modifications to synthetic media that cause detectors to misclassify manipulated content as authentic. The perturbations remain invisible to human observers yet effectively blind automated detection systems.

Defensive techniques like adversarial training and input preprocessing improve robustness but often come at the cost of reduced accuracy on clean, unperturbed content. This trade-off creates a dilemma: hardening against adversarial attacks may degrade baseline detection performance, while optimizing for accuracy leaves systems vulnerable to evasion.

The arms race dynamic ensures no static detection solution remains effective indefinitely. As detection capabilities improve, synthesis techniques evolve to evade them. As defenses strengthen, attacks become more sophisticated. Organizations must view deepfake detection as an ongoing program requiring continuous investment rather than a one-time deployment achieving permanent protection.

Quality and Compression Challenges

Detection accuracy degrades substantially on low-resolution or heavily compressed content. Many real-world scenarios involve media shared through platforms that apply aggressive compression, reducing file sizes for efficient distribution but simultaneously degrading detection signals.

Social media platforms, messaging applications, and video conferencing systems commonly compress uploaded content, often through multiple generation cycles as users download and re-upload media. This compression destroys subtle artifacts that detection algorithms rely upon while potentially introducing compression artifacts that confuse detection systems.

Low-light videos, poor-quality audio recordings, and low-resolution images lack the fidelity required for reliable detection. Many biological signal-based approaches require high-quality video to extract PPG signals or analyze fine-grained facial features. Audio detection suffers when background noise, codec artifacts, or low bitrates obscure the acoustic properties that distinguish human from synthetic speech.

Organizations deploying detection must account for the quality of content they’ll analyze. Solutions performing well on high-quality benchmark datasets may prove inadequate when applied to compressed, degraded real-world media.

Production Deployment Strategies: Building Robust Detection Systems

Successful deployment extends beyond selecting detection technology. Organizations must design comprehensive systems addressing integration challenges, performance requirements, operational workflows, and risk management considerations that emerge when moving from proof-of-concept to production-scale operations.

Multi-Model Ensemble Approaches

Single-model detection systems create vulnerability to evasion techniques targeting specific architectural weaknesses. Ensemble approaches combining multiple independent detection models provide superior robustness by requiring adversaries to simultaneously evade all component detectors.

Effective ensembles incorporate diverse detection methodologies rather than multiple variants of similar approaches. Combining CNN-based spatial analysis with RNN temporal detection and frequency-domain analysis creates a system where weaknesses in one component are compensated by strengths in others. An adversary successfully evading spatial artifact detection may still be caught by temporal inconsistency analysis or frequency-domain signatures.

Voting mechanisms aggregate predictions from ensemble components into final classifications. Simple majority voting provides a straightforward approach where content is flagged as manipulated if most component models classify it as synthetic. Weighted voting assigns different importance to component predictions based on historical performance or confidence scores, giving more influence to models demonstrating higher reliability.

Stacking approaches train a meta-classifier that learns to optimally combine component model predictions, potentially identifying patterns in how different models respond to various manipulation types. This sophisticated ensemble technique can achieve better performance than simple voting when sufficient labeled data exists for training the meta-classifier.

The primary trade-off involves computational costs and latency. Running multiple models multiplies processing requirements compared to single-model detection. Organizations must balance improved accuracy and robustness against infrastructure costs and response time requirements. Real-time applications may necessitate compromises, perhaps running lightweight models for initial screening and reserving expensive ensemble analysis for flagged content requiring high-confidence verification.

Threshold Configuration and Risk Calibration

Detection models output probability scores rather than binary classifications. Organizations must establish thresholds determining when content is flagged as manipulated, blocked from distribution, or escalated for manual review. Threshold selection profoundly impacts operational characteristics and should align with specific use case requirements and risk tolerance.

Conservative thresholds flag only content with very high manipulation probability, minimizing false positives at the cost of missing some actual deepfakes. This approach suits scenarios where false positives create significant costs or user friction. Content platforms might choose conservative thresholds to avoid frustrating legitimate users with incorrect removal or restrictions.

Aggressive thresholds catch more manipulated content but generate increased false positives, flagging some authentic media as synthetic. This trade-off may be acceptable in high-security contexts where missing deepfakes poses greater risk than occasionally challenging authentic content. Financial institutions defending against fraud might prefer aggressive detection even if it sometimes questions legitimate transactions.

Adaptive thresholding adjusts detection sensitivity based on contextual factors. Higher-risk scenarios like large financial transactions or identity verification workflows might employ aggressive thresholds, while lower-stakes contexts like social media comments use more permissive settings. Time-based adaptation can increase sensitivity during elevated threat periods like election cycles or corporate earnings announcements.

Calibration requires continuous monitoring of false positive and false negative rates in production. Organizations should establish feedback loops capturing ground truth about flagged content, enabling measurement of actual detection performance and threshold refinement based on observed outcomes rather than theoretical estimates.

Integration Architecture Patterns

Detection systems must integrate with existing infrastructure and workflows. Multiple architectural patterns address different deployment contexts and organizational requirements.

Inline Detection analyzes content in real-time during upload, submission, or transmission workflows. This approach enables prevention of synthetic content distribution by blocking or quarantining manipulated media before it reaches end users. Financial platforms processing identity verification documents might employ inline detection to reject deepfaked credentials immediately rather than allowing them into downstream systems.

Inline deployment requires careful attention to latency and availability. Detection processing must complete quickly enough to avoid unacceptable user experience degradation. System failures or temporary detector outages must be handled gracefully, either through failover to backup detection services or fallback to permissive policies that allow content through when detection is unavailable.

Asynchronous Detection performs analysis after content has been published or distributed, flagging manipulated media for retrospective action. This pattern proves suitable when immediate blocking isn’t feasible due to latency constraints or when the cost of temporary exposure is acceptable. Social media platforms might allow posts to go live immediately while queuing them for detection analysis that completes within minutes or hours.

Asynchronous deployment enables using more computationally expensive detection models without impacting user-facing latency. Organizations can run comprehensive ensemble analysis requiring seconds or minutes of processing time without users waiting during upload. The trade-off involves temporary exposure where synthetic content reaches audiences before detection completes and remediation actions take effect.

Hybrid Approaches combine inline and asynchronous detection, using lightweight models for fast initial screening and reserving expensive analysis for suspicious content or high-risk scenarios. Content passing quick inline checks publishes immediately while also queuing for thorough asynchronous analysis. Content triggering initial concerns can be held pending comprehensive detection results.

API-based integration enables loose coupling between detection services and content applications. Detection providers expose REST or gRPC APIs that content platforms invoke, submitting media for analysis and receiving manipulation scores or binary classifications. This pattern facilitates using multiple detection providers, A/B testing different solutions, and switching vendors without extensive application changes.

Monitoring and Continuous Improvement

Production detection systems require ongoing monitoring to maintain effectiveness as threat landscapes evolve and system performance drifts over time. Organizations should establish comprehensive observability practices tracking multiple dimensions of detection system health.

Performance metrics including detection accuracy, false positive rates, and false negative rates provide fundamental measures of system effectiveness. However, obtaining ground truth for production traffic challenges measurement since the authenticity of most content remains uncertain. Organizations can employ several approaches to estimate real-world performance.

Periodic ground truth testing submits known authentic and synthetic content through production detection systems, measuring how accurately the system classifies this labeled data. Test sets should represent diverse content types, quality levels, and manipulation techniques likely to appear in production. Regular testing, perhaps weekly or monthly, tracks whether detection performance remains stable or degrades over time.

User feedback mechanisms enable reporting when detection makes errors, either blocking authentic content or failing to catch obvious fakes. While user reports may not perfectly represent overall error rates due to reporting bias, they provide valuable signals about egregious failures that frustrate users or allow harmful content to circulate.

Adversarial red teaming involves deliberately attempting to evade detection systems using latest synthesis techniques and evasion tactics. Security teams or external consultants periodically test whether new manipulation methods successfully bypass detection, identifying blind spots requiring model updates or architectural improvements.

Operational metrics track system latency, throughput, error rates, and availability. Detection systems exhibiting degraded response times may require infrastructure scaling. Increased API error rates might indicate integration problems or service instability requiring investigation and resolution.

Detection model updates incorporate newly identified manipulation techniques, addressing drift where production threat landscapes diverge from training data distributions. Organizations should establish processes for rapidly deploying updated models when novel deepfake types emerge or when periodic retraining improves performance on production traffic patterns.

Industry-Specific Applications and Use Cases

Deepfake detection requirements and deployment patterns vary significantly across industries based on different threat models, regulatory environments, and operational constraints. Understanding sector-specific applications enables tailored implementations addressing unique organizational needs.

Financial Services: Fraud Prevention and Identity Verification

Financial institutions face estimated annual losses exceeding $400 million from deepfake-enabled fraud, making detection technology mission-critical for protecting customers and organizational assets. Multiple attack vectors exploit synthetic media to circumvent authentication and authorization controls.

Voice-based social engineering represents a primary threat where fraudsters use cloned voices impersonating account holders to authorize fraudulent wire transfers, account changes, or information disclosure. Attackers record brief audio samples from publicly available sources like social media videos, earnings calls, or media interviews, then use voice synthesis technology to generate convincing clones. Contact center agents hearing what sounds like an authentic customer voice may comply with fraudulent requests, especially when attackers combine voice clones with stolen personal information demonstrating knowledge of account details.

Pindrop Security deployments in banking contact centers analyze incoming calls in real-time, generating liveness scores indicating whether callers represent real humans or synthetic audio playback. High suspicion scores trigger additional authentication challenges or flag calls for fraud investigation. Some implementations route suspicious calls to specialized agents trained in handling potential fraud attempts rather than immediately denying service.

Video-based identity verification used during account opening or high-value transaction authentication faces deepfake threats where synthetic videos impersonate legitimate customers or generate entirely fictitious identities. Attackers submit deepfaked identity documents paired with face-swapped video demonstrating the synthetic identity “holder” during liveness checks.

Detection systems analyze submitted videos for manipulation artifacts, biological signal inconsistencies, and temporal anomalies indicating synthesis rather than authentic camera capture. Some implementations require specific challenge-response actions like reading random numbers or following on-screen instructions, making it harder for pre-generated deepfake videos to pass verification.

Document forgery detection identifies synthetically generated or manipulated identity documents. Generative AI can create realistic but entirely fabricated driver’s licenses, passports, and utility bills used to establish false identities or support synthetic persona creation at scale. Detection tools analyze document images for generation artifacts, inconsistent fonts or formatting, and impossible document numbers not matching official issuance patterns.

Media and Journalism: Content Verification and Source Authentication

News organizations and content platforms face deepfake-enabled misinformation campaigns threatening information integrity and eroding public trust. Research from Columbia Journalism Review emphasizes that journalists must understand detection technology limitations while employing it as one tool among multiple verification approaches.

Source authentication workflows integrate detection technology with traditional journalistic verification practices. When newsrooms receive potentially newsworthy audio or video, especially content depicting controversial events or sensitive political subjects, they employ detection analysis alongside fact-checking, source verification, and technical forensics.

Detection tools provide rapid initial screening, flagging suspicious content for deeper investigation while clearing obviously authentic material for expedited verification. However, journalists cannot rely solely on automated detection given generalization limitations and adversarial evasion risks. Negative detection results, indicating no manipulation detected, don’t guarantee authenticity, especially for high-stakes content where sophisticated adversaries may employ evasion techniques.

User-generated content moderation at scale requires automated detection systems processing millions of submissions daily. Platforms hosting video sharing, image uploads, or voice recordings employ detection to identify and potentially restrict synthetic media violating community guidelines or policy restrictions on misleading manipulated content.

Implementation challenges include balancing accuracy requirements against processing costs and latency constraints. Platforms must analyze enormous content volumes quickly enough to avoid backlog accumulation while maintaining sufficient accuracy to avoid both missing harmful deepfakes and incorrectly flagging legitimate user content. Hybrid approaches using fast lightweight detection for initial screening combined with more thorough analysis of flagged content help manage this trade-off.

Elections and political content present heightened deepfake risks where fabricated candidate statements, staged crisis events, or manufactured scandals can influence voter behavior before fact-checkers can respond. Media organizations covering elections establish specialized verification teams with access to detection technology, forensic analysis capabilities, and direct communication channels with campaigns and government officials for rapid verification.

Some jurisdictions have passed legislation requiring disclosure when political advertisements include synthetic media. Enforcement of these regulations may involve detection technology helping identify undisclosed deepfakes in campaign materials. However, the intentional nature of political deepfakes, where creators actively attempt evasion, makes detection particularly challenging.

Enterprise and Corporate: Brand Protection and Executive Security

Organizations face reputational and security risks from deepfakes impersonating executives, misusing brand assets, or fabricating corporate communications. Protection strategies combine detection technology with monitoring and rapid response capabilities.

Executive impersonation attacks deploy synthetic videos or audio purporting to show CEOs, CFOs, or other leaders making false statements about financial performance, strategic decisions, or crisis situations. These attacks aim to manipulate stock prices, damage reputations, or create confusion during sensitive corporate events. Sensity platform deployments continuously monitor social media and online channels for synthetic content impersonating protected executives, alerting security teams to emerging threats.

Brand misuse involves incorporating company logos, products, or trademarks into synthetic media for scams, misinformation, or reputational attacks. Fraudsters create fake promotional videos appearing to offer deep discounts or giveaways, using company branding to lend credibility while directing victims to phishing sites or malware distribution. Detection systems integrated with brand monitoring tools identify these synthetic assets, enabling legal takedown actions and consumer protection alerts.

Internal communications security addresses risks where deepfaked video conferences or voice messages appear to originate from legitimate organizational channels. Remote work environments rely heavily on digital communications, creating opportunities for social engineering attacks exploiting deepfake technology. Organizations implement detection in communication platforms, flagging suspicious audio or video that may represent impersonation attempts.

Employee training complements technological defenses by educating staff about deepfake threats and verification practices. Security awareness programs demonstrate deepfake capabilities, teach recognition of manipulation indicators, and establish protocols for verifying unusual requests even when they appear to come from authenticated sources. Human judgment remains essential for catching sophisticated attacks that evade automated detection.

Government and Public Sector: National Security and Critical Infrastructure

Government agencies deploy detection technology addressing threats ranging from foreign influence operations to critical infrastructure protection. Use cases often involve classified or sensitive contexts with limited public visibility but substantial impact on national security.

Disinformation campaigns by foreign adversaries increasingly incorporate deepfake technology for creating fabricated evidence, staged events, or manipulated statements attributed to government officials or military leaders. Intelligence agencies employ detection capabilities for analyzing suspicious media, though specific implementation details remain classified. Research indicates that nation-state actors from Iran, China, North Korea, and Russia use deepfakes for phishing, reconnaissance, and information warfare.

Border security and immigration applications utilize detection for verifying identity documents and biometric authentication. Fraudulent asylum applications, visa requests, or immigration petitions may include synthetically generated identity documents or manipulated biographical information. Detection systems analyze submitted materials for forgery indicators, though implementation must balance security requirements against legitimate applicant rights and privacy protections.

Law enforcement and judicial proceedings face challenges when deepfaked evidence enters criminal or civil cases. Defense attorneys may question video evidence authenticity, while prosecutors must ensure submitted materials haven’t been manipulated. Forensic laboratories integrate detection technology with traditional forensics, though legal standards for admissibility of detection results remain evolving. Chain of custody procedures, expert witness testimony, and judicial understanding of detection technology limitations all influence how courts handle deepfake-related evidence questions.

Election integrity initiatives deploy detection for monitoring synthetic media targeting political candidates, fabricating voter fraud evidence, or creating misleading campaign materials. Government cybersecurity agencies often establish election-specific task forces incorporating detection capabilities during campaign periods. However, the challenges of real-time verification during fast-moving political events limit detection technology’s impact without complementary rapid response mechanisms.

Healthcare: Medical Imaging and Telemedicine Security

Healthcare applications of deepfake detection address emerging threats in medical imaging authentication and telemedicine platform security. While less publicized than other sectors, healthcare faces unique risks given life-or-death consequences of medical decision-making based on potentially manipulated diagnostic information.

Medical imaging authentication ensures X-rays, MRIs, CT scans, and other diagnostic images represent authentic captures rather than synthetic or manipulated files. Adversaries might alter imaging to frame insurance fraud, conceal medical conditions, or manufacture false medical histories. Some scenarios involve patients manipulating their own images to obtain desired diagnoses, prescriptions, or disability accommodations.

Detection systems analyze medical images for generation artifacts, inconsistent noise patterns, and anatomical impossibilities suggesting manipulation. However, medical imaging presents unique challenges since clinical images differ dramatically from natural photographs. Detection models trained on general image datasets may not transfer effectively to medical imaging domains. Specialized models trained on medical image datasets provide better performance but require substantial labeled training data.

Telemedicine platform security addresses risks where deepfaked video consultations impersonate patients or healthcare providers. As telehealth adoption increased following pandemic-related changes, synthetic video attacks became theoretically possible, though few documented cases exist. Prevention involves liveness detection during video consultations, authentication protocols requiring multiple verification factors, and provider training recognizing suspicious behavior patterns.

Prescription fraud prevention identifies synthetic documents or manipulated electronic health records used to obtain controlled substances or expensive medications illegally. While traditional prescription forgery predates deepfake technology, AI-generated documents can create more convincing forgeries at scale. Pharmacies and health insurance companies implement verification systems checking prescription authenticity against prescriber databases and detecting manipulated documentation.

Implementation Roadmap: Deploying Detection in Four Phases

Organizations new to deepfake detection should follow a structured implementation approach, building capabilities incrementally while managing risk and resource constraints. This phased roadmap provides a template adaptable to specific organizational contexts.

Phase 1: Assessment and Foundation Building

Implementation begins with comprehensive assessment of organizational needs, threat exposure, and existing infrastructure capabilities. Organizations should identify specific use cases where deepfake risks pose greatest threats, whether through fraud impact, reputational damage, operational disruption, or regulatory compliance challenges.

Threat modeling exercises map potential attack scenarios, adversary capabilities, and impact magnitudes. Financial institutions might prioritize voice clone attacks targeting account takeover, while media organizations focus on fabricated newsworthy events. Understanding threat priorities guides technology selection and deployment sequencing.

Stakeholder engagement involves security teams, legal counsel, compliance officers, operational managers, and technology leadership. Each group brings different perspectives on requirements, constraints, and success criteria. Security teams emphasize detection accuracy and evasion resistance. Legal counsel addresses regulatory compliance, evidence admissibility, and liability concerns. Compliance officers ensure alignment with industry regulations and privacy requirements. Operational managers focus on workflow integration and user experience impacts.

Existing infrastructure assessment examines authentication systems, content pipelines, API capabilities, and computing resources available for detection workloads. Organizations may discover gaps requiring infrastructure upgrades before detection deployment becomes feasible. Content platforms need API integration points for submitting media to detection services. Contact centers require audio stream capture and analysis insertion points.

Vendor evaluation involves assessing commercial detection solutions against organizational requirements. Organizations should request demonstrations using realistic content samples representative of expected production scenarios. Pilot programs with shortlisted vendors provide hands-on experience with detection accuracy, integration complexity, and operational characteristics before commitment to enterprise contracts.

Technology selection considers detection accuracy, supported media types, API capabilities, pricing models, vendor support quality, and long-term roadmap alignment. Exclusive reliance on single vendors creates lock-in risk, so organizations should evaluate multi-vendor approaches or open-source alternatives providing greater flexibility.

Phase 2: Pilot Deployment and Validation

With technology selected and foundational requirements established, organizations implement limited pilots in controlled environments before production rollout. Pilot deployments validate detection performance, expose integration challenges, and build organizational familiarity with the technology.

Pilot scope should be narrow enough for manageable implementation but representative enough to provide meaningful insights. Financial institutions might pilot voice detection in one contact center location rather than enterprise-wide deployment. Media platforms could pilot detection on specific content categories or user segments before expanding coverage.

Ground truth dataset creation assembles labeled examples of authentic and manipulated content representative of expected production scenarios. Organizations should curate diverse examples spanning different manipulation techniques, content qualities, and edge cases. This labeled dataset enables objective measurement of detection accuracy in organizational context rather than relying solely on vendor-provided benchmark claims.

Integration implementation connects detection services with content pipelines, authentication workflows, or monitoring systems through API calls, webhooks, or message queue integration. Development teams build necessary data transformations, error handling, retry logic, and monitoring instrumentation. Thorough testing validates correct operation under various scenarios including system failures, network issues, and malformed inputs.

Performance testing evaluates detection latency, throughput, and resource consumption under realistic loads. Organizations should test at expected peak volumes to ensure detection doesn’t become a bottleneck or introduce unacceptable delays. Load testing might reveal needs for scaling detection infrastructure, implementing caching strategies, or adjusting detection depth based on traffic patterns.

Operational workflow development establishes procedures for handling detection alerts, investigating flagged content, and taking remediation actions. Organizations should define escalation paths, decision authorities, and documentation requirements for consistency and auditability. Playbooks codify responses to different detection scenarios, helping teams respond effectively to identified deepfakes.

User acceptance testing involves stakeholders validating that detection implementation meets functional requirements without introducing excessive friction or false positives. Content moderators, fraud analysts, or customer service representatives should confirm that detection alerts provide actionable information and that workflows enable efficient processing.

Phase 3: Production Rollout and Scaling

Successful pilots transition to production deployment, expanding detection coverage while maintaining system reliability and performance. Phased rollout strategies enable gradual scaling that surfaces issues before they affect entire user populations.

Geographic or organizational unit staging deploys detection region-by-region or business-unit-by-business-unit. Financial institutions might enable detection in North American operations before international expansion. Media platforms could roll out by country or language, starting with English-language content before adding additional languages.

Traffic percentage rollout gradually increases the proportion of content analyzed, starting with small percentages and monitoring for issues before expansion. Organizations might analyze 1% of traffic initially, then 5%, 10%, 25%, until reaching full coverage. This approach enables rapid rollback if problems emerge without impacting most users.

Shadow mode operation runs detection analysis without taking automated actions, generating alerts for human review but not blocking content or restricting accounts. This conservative approach builds confidence in detection accuracy and operational processes before enabling automated enforcement. Organizations observe false positive and false negative rates, refine thresholds, and improve workflows before granting detection systems authority to automatically block content.

Infrastructure scaling addresses increased computational demands as detection coverage expands. Organizations should monitor system resource utilization, latency trends, and queue depths, proactively scaling infrastructure before performance degradation impacts users. Cloud-based deployments enable elastic scaling, automatically adding detection capacity during traffic spikes and reducing it during low-demand periods.

Runbook development codifies operational procedures for routine management and incident response. Documentation should cover system monitoring, threshold adjustments, model updates, troubleshooting common issues, and escalation procedures for complex problems. Well-maintained runbooks enable operational teams to manage detection systems effectively without excessive reliance on original implementation teams.

Phase 4: Continuous Improvement and Adaptation

Production deployment represents the beginning of ongoing detection operations rather than implementation completion. Organizations must continuously adapt detection capabilities as threat landscapes evolve, synthesis techniques advance, and organizational requirements change.

Threat intelligence integration incorporates information about emerging deepfake techniques, new synthesis models, and observed attack patterns. Security teams should monitor research publications, attend relevant conferences, and participate in industry information-sharing programs. When novel manipulation methods emerge, organizations assess whether existing detection systems remain effective or require updates.

Model retraining addresses detection drift where production performance degrades as threat distributions diverge from training data. Organizations should establish regular retraining schedules, perhaps quarterly or semi-annually, incorporating newly collected labeled data. Continuous learning pipelines may automate retraining triggered by performance metric degradation or novel manipulation technique emergence.

A/B testing evaluates potential detection improvements without risking production stability. Organizations might deploy candidate model updates to small traffic percentages, comparing performance against established baselines. Statistically significant improvements warrant gradual expansion while underperforming updates are abandoned.

Feedback loop optimization refines thresholds, tunes model parameters, and adjusts operational workflows based on production experience. Organizations should track metrics including false positive rates, false negative rates, user satisfaction, and operational efficiency. Regular review of these metrics identifies improvement opportunities.

Vendor relationship management maintains engagement with detection technology providers, ensuring access to latest model updates, new feature releases, and technical support. Organizations should establish regular business reviews with vendors, communicating evolving requirements and providing feedback on product capabilities and gaps.

Cross-functional collaboration maintains alignment between security, operations, legal, and business stakeholders. Quarterly or semi-annual reviews assess whether detection continues meeting organizational needs, address concerns raised by different groups, and adjust strategy based on changing business priorities or threat landscapes.

FAQ: Deepfake Detection Technology

1. Can deepfakes be detected by the human eye?

Human detection of deepfakes varies dramatically based on content quality and viewer expertise. Research from MIT and PNAS demonstrates that untrained individuals identify high-quality deepfakes with only 55 to 60% accuracy, barely better than random chance. iProov’s 2025 study reveals that only 0.1% of participants correctly identified all deepfake and real content when specifically asked to look for fakes, even though 60% expressed confidence in their detection abilities.

However, trained observers achieve substantially higher accuracy by focusing on specific indicators. Unnatural facial movements, irregular blinking patterns (deepfakes often blink too infrequently), inconsistent lighting and shadows, lip-sync mismatches where mouth movements don’t perfectly align with speech, and skin texture anomalies provide detection signals. Audio artifacts including unnatural voice tone variations, irregular breathing sounds, and inconsistent background noise also suggest synthesis.

The challenge intensifies with sophisticated deepfakes created using latest diffusion models and GAN architectures. Studies show that participants were 36% less likely to spot fake videos compared to fake images, highlighting video manipulation’s particular difficulty. Training dramatically improves detection capabilities, with security professionals and forensic analysts identifying manipulations that untrained observers miss entirely. Organizations should combine human judgment with automated detection systems rather than relying exclusively on either approach for optimal verification.

2. How accurate are humans at detecting deepfakes?

Human deepfake detection accuracy hovers around 55 to 65% for untrained observers viewing high-quality synthetic media, representing marginal improvement over random guessing. Research published in PNAS examining 15,016 participants found detection accuracy ranging from 57.6% to 75.4% depending on video quality and manipulation technique, with performance varying based on both person-level factors like cognitive ability and analytical thinking, and stimuli-level factors including deepfake quality and subject familiarity.

The accuracy gap widens with sophisticated synthesis methods. Studies indicate that human subjects identified high-quality deepfake videos only 24.5% of the time, demonstrating how advanced generation techniques effectively fool human visual processing systems. Factors influencing detection include viewing duration (longer observation improves accuracy), content familiarity (people recognize manipulated videos of familiar individuals more accurately), and cognitive load (rushed judgments decrease performance).

Training substantially improves outcomes, with specialized education increasing detection rates by 20 to 30 percentage points. However, even experts struggle with state-of-the-art deepfakes that eliminate obvious artifacts. The dangerous overconfidence phenomenon compounds this limitation, where 60% of people remain confident in their assessments regardless of actual accuracy. Organizations cannot rely solely on human detection given these limitations but should instead combine trained human review with automated detection systems, leveraging complementary strengths where humans excel at contextual reasoning while machines identify subtle technical artifacts.

3. What percentage of people can identify deepfakes correctly?

Detection accuracy varies dramatically across population segments and content types. iProov’s comprehensive 2025 survey revealed that only 0.1% of participants correctly identified all deepfake and real content when explicitly instructed to look for fakes, representing just 1 in 1,000 people. Perhaps more concerning, 22% had never heard of deepfakes before participating, indicating substantial awareness gaps persist despite growing media coverage.

For general population samples viewing mixed authentic and synthetic content, accuracy ranges from 55% to 65%, with performance degrading as deepfake quality increases. Videos prove particularly challenging, with participants 36% less likely to spot fake videos compared to fake images. This video detection difficulty stems from temporal complexity and the human visual system’s tendency to focus on motion and narrative rather than subtle frame-by-frame inconsistencies.

Demographic and training factors significantly impact performance. Younger participants (ages 18 to 34) slightly outperform older demographics, potentially reflecting greater exposure to digital media manipulation. Technical education correlates with improved detection, with computer science and digital media professionals achieving 10 to 15 percentage point higher accuracy than general populations. However, even among technically trained individuals, sophisticated deepfakes maintain deception rates exceeding 30%. The concerning overconfidence phenomenon persists across skill levels, where self-assessed detection ability correlates weakly with actual performance. Organizations designing security protocols must account for these human limitations rather than assuming users will reliably detect synthetic media.

4. How much money has been stolen through deepfake fraud?

Deepfake-enabled fraud has generated staggering financial losses across multiple sectors. The engineering firm Arup lost $25.5 million in January 2024 when a Hong Kong employee authorized 15 wire transfers during a video call where all participants except the victim were AI-generated deepfakes convincingly impersonating company executives including the CFO. This single incident represents one of the largest documented deepfake fraud cases, though many organizations underreport losses due to reputational concerns.

Industry-wide statistics paint an alarming picture. Financial losses in Q1 2025 alone exceeded $200 million, with North America experiencing a 1,740% increase in deepfake fraud cases between 2022 and 2023. The cryptocurrency sector bears particular vulnerability, accounting for 88% of detected deepfake fraud in 2023. Financial institutions report voice clone attacks costing hundreds of millions annually as fraudsters impersonate account holders to authorize fraudulent transactions.

Projections suggest escalating damage. Deloitte forecasts that fraud losses facilitated by generative AI will reach $40 billion in the United States by 2027, representing exponential growth from current levels. The true cost extends beyond direct theft to include incident response expenses, increased security investments, legal fees, regulatory fines for inadequate controls, and brand damage from publicized breaches. Organizations across sectors must view deepfake detection as essential security infrastructure rather than optional enhancement given these escalating financial risks.

5. What is the ROI of implementing deepfake detection?

Return on investment for deepfake detection depends heavily on organizational risk exposure and implementation scale. Financial services organizations facing substantial fraud risks typically achieve positive ROI within 6 to 12 months. Consider that preventing a single Arup-scale attack ($25.5 million loss) would justify detection implementation costs exceeding $1 million annually. Even smaller prevented frauds of $100,000 to $500,000 rapidly offset detection expenses.

Quantifiable benefits extend beyond direct fraud prevention. Regulatory compliance costs decrease when detection systems demonstrate due diligence in authentication processes, potentially reducing audit expenses and fine risks. Customer trust preservation maintains relationship value, with acquisition costs for replacement customers far exceeding retention investments. Operational efficiency improves as automated detection reduces manual verification workloads, reallocating security analyst time to higher-value investigations.

However, ROI calculation must account for comprehensive costs including licensing fees ($50,000 to $500,000 annually depending on scale), implementation expenses (20% to 100% of licensing costs), infrastructure for running detection workloads ($5,000 to $50,000 monthly), personnel for operating and maintaining systems, and ongoing model updates and retraining. Organizations should establish baseline measurements before implementation tracking fraud losses, authentication delays, and customer friction, enabling quantifiable before-after comparisons.

The strategic value proposition transcends pure financial ROI. Market research firm forecasts predict deepfake detection addressing 9.9 billion attacks by 2027, generating $5 billion industry revenue. Organizations implementing detection now position themselves ahead of competitors, building expertise and infrastructure before deepfake threats reach crisis proportions. Early movers establish brand reputation for security leadership while late adopters scramble to catch up after suffering preventable losses.

6. How do I spot a deepfake video?

Spotting deepfake videos requires systematic examination of multiple indicators, though sophisticated fakes may exhibit minimal detectable artifacts. Start by scrutinizing facial features and movements. Unnatural blinking patterns represent a common tell, as many deepfakes blink too infrequently due to training data biases. Research from MIT identifies several key observations: examine whether eye movements appear natural, check if eyebrow expressions match emotional context, and look for inconsistencies in aging markers where skin texture doesn’t match hair graying or eye characteristics.

Pay attention to face boundaries and edges where manipulated regions meet original backgrounds. Artifacts often appear at hairlines, jawlines, and neck transitions where blending algorithms struggle. Lighting inconsistencies provide another signal, particularly when facial lighting doesn’t match environmental shadows or when multiple inconsistent light sources appear. Audio-visual synchronization matters, where lip movements should precisely match speech timing. Even slight delays or mismatches suggest manipulation.

Contextual analysis complements technical examination. Question whether the content aligns with what you know about the purported speaker. Would this person realistically say or do what the video depicts? Verify through alternative sources, checking whether other credible outlets report the same content. When stakes are high, request video calls with spontaneous challenges like “hold up three fingers” or “read this random number” that pre-generated deepfakes cannot accommodate. However, recognize that detection grows increasingly difficult as technology advances. Studies show humans identify sophisticated deepfakes correctly only 24.5% of the time, emphasizing the need for automated detection tools rather than relying exclusively on visual inspection.

7. What are the telltale signs of audio deepfakes?

Audio deepfake detection requires attentive listening for subtle artifacts that betray synthetic generation. Unnatural voice tone variations represent primary indicators, where pitch, timbre, or resonance shift inconsistently in ways human vocal cords wouldn’t produce. Listen for glitches or discontinuities, particularly during transitions between words or sentences where generative models sometimes create audible seams. Background noise inconsistencies provide another signal when environmental sound doesn’t match expected recording conditions or changes unnaturally mid-sentence.

Breathing patterns offer detection clues since natural human speech includes periodic breath intake aligned with linguistic phrasing. Deepfakes often omit these breathing sounds or insert them at unnatural intervals. Emotional expression authenticity matters, checking whether vocal affect genuinely matches stated content rather than exhibiting uncanny valley flatness or exaggerated emotion. Listen for audio compression artifacts that might suggest multiple generation and encoding cycles rather than direct recording.

Context verification complements technical listening. Does the speaker use characteristic phrases, pronunciation patterns, or linguistic quirks consistent with their known communication style? Would they realistically make the statements attributed to them? Pindrop Security research demonstrates that analysis of unique speech characteristics including intonation patterns, rhythm, and cadence enables distinguishing authentic voices from synthetic clones with 99% accuracy under controlled conditions, though real-world performance varies.

For high-stakes scenarios like financial authorizations or identity verification, implement callback verification where you independently call known legitimate numbers rather than trusting caller-provided contacts. Request spontaneous responses to unexpected questions that pre-recorded synthetic audio cannot accommodate. However, recognize detection limitations, as meta-analysis reveals that audio deepfake detection methods experience sharp performance drops on out-of-domain datasets. Professional detection tools provide more reliable analysis than unaided human listening.

8. Can I detect deepfakes on my phone?

Mobile deepfake detection exists through several approaches, though capabilities remain limited compared to enterprise systems. Consumer apps like AI or Not, Deepware Scanner, and similar tools provide basic detection functionality for uploaded images and videos. These apps typically employ lightweight convolutional neural networks optimized for mobile processors, analyzing content for common manipulation artifacts. Users upload suspicious media, wait for processing (usually 10 to 60 seconds), and receive probability scores indicating whether content appears synthetic.