IBM Quantum Nighthawk 120 Qubit 2026

TL;DR: IBM unveiled Quantum Nighthawk on November 12, 2025, its most advanced quantum processor featuring 120 superconducting qubits connected by 218 next-generation tunable couplers in a dense square lattice architecture. This represents a fundamental shift from IBM’s previous heavy-hex design, enabling circuits with 30% greater complexity than the Heron processor while maintaining sub-1% error rates. Nighthawk targets 5,000 two-qubit gates at launch (end of 2025), scaling to 15,000 gates and 1,000+ connected qubits by 2028. IBM projects achieving verified quantum advantage by December 2026, positioning Nighthawk as the hardware foundation for solving real-world problems that would take classical supercomputers millennia. With production shifted to 300mm wafer fabrication at Albany NanoTech Complex, IBM has doubled R&D speed while increasing chip complexity tenfold. The announcement comes amid intensifying quantum competition from Google’s Willow chip (105 qubits, exponential error reduction) and establishes IBM’s path toward fault-tolerant quantum computing via the Starling system by 2029.

The Quantum Race Just Accelerated

In a dimly lit conference hall in Atlanta, Jay Gambetta, IBM Fellow and Director of IBM Research, held up a small chip no larger than a postage stamp. This wasn’t just another processor announcement. This was IBM staking its claim as the leader in the most consequential computing race since the Manhattan Project.

“We believe that IBM is the only company that is positioned to rapidly invent and scale quantum software, hardware, fabrication, and error correction to unlock transformative applications,” Gambetta declared at the Quantum Developer Conference 2025. The chip in his hand, Quantum Nighthawk, represents IBM’s most aggressive bet yet that quantum advantage—the point where quantum computers definitively outperform all classical methods—will arrive by the end of 2026.

The timing is extraordinary. Less than three weeks earlier, Google had dominated headlines with its Willow quantum chip, claiming exponential error reduction and computations that would take classical supercomputers 10 septillion years. Microsoft introduced its Majorana 1 chip in February 2025, leveraging exotic topological qubits. Amazon Web Services, Quantinuum, IonQ, and a dozen well-funded startups are all racing toward the same goal.

But IBM isn’t just competing. It’s systematically executing a roadmap first published years ago, hitting milestones with metronomic precision. Nighthawk is the latest proof point in a multi-year plan that treats quantum computing not as a moonshot but as an engineering problem with defined solutions.

According to Tom’s Hardware’s analysis, “Nighthawk represents IBM’s best shot at a near-term quantum advantage, with the architecture specifically designed to complement high-performing quantum software and deliver results that classical computers simply cannot match.”

The stakes extend far beyond corporate competition. McKinsey projects quantum computing could generate $1.3 trillion in value across industries by 2035. Applications span drug discovery (BMW and Airbus are already exploring fuel cell development with Quantinuum), financial modeling, cryptography, materials science, and climate simulation. Whoever reaches quantum advantage first doesn’t just win a technical achievement. They unlock an entirely new computational paradigm with profound economic and geopolitical implications.

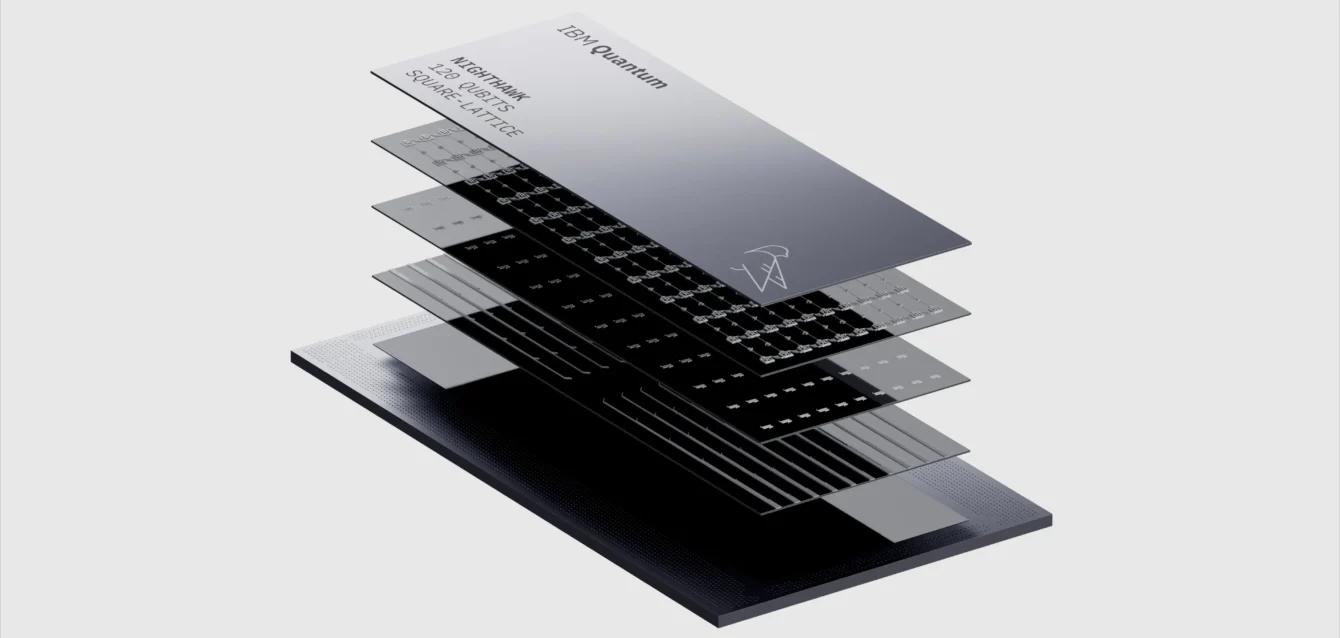

Nighthawk is IBM’s weapon in this race. With 120 qubits, 218 tunable couplers, and a revolutionary square lattice topology, it promises performance levels that seemed impossible just years ago. But raw qubit count tells only part of the story. The real innovation lies in how those qubits connect, how errors are suppressed, and how the entire system integrates with classical computing infrastructure to solve problems humans actually care about.

Decoding Nighthawk: Architecture That Changes Everything

Quantum processors aren’t simply faster computers. They operate on fundamentally different principles rooted in quantum mechanics. Understanding Nighthawk requires grasping these principles and how IBM’s engineering choices amplify quantum advantages while mitigating inherent weaknesses.

The Qubit Foundation

At Nighthawk’s heart lie 120 superconducting qubits—tiny circuits cooled to temperatures colder than outer space (approximately 15 millikelvin, or -459.66°F). At these extreme temperatures, materials enter quantum states where electrons can exist in superposition: simultaneously representing both 0 and 1 until measured.

This superposition is quantum computing’s core advantage. A classical bit exists in one definite state. A qubit in superposition effectively explores multiple computational paths simultaneously. Two qubits can represent four states (00, 01, 10, 11) at once. Three qubits represent eight states. The computational space grows exponentially: 120 qubits can represent 2^120 states, a number with 37 digits.

But superposition alone isn’t enough. Qubits must also exhibit entanglement, where the state of one qubit becomes fundamentally linked to another regardless of distance. Measuring one entangled qubit instantly affects its partner. This “spooky action at a distance,” as Einstein skeptically called it, enables quantum algorithms to process information in ways classical algorithms cannot replicate.

IBM’s superconducting qubits use Josephson junctions—two superconducting materials separated by an insulating barrier. Quantum tunneling allows Cooper pairs (bound electron pairs) to move across the barrier, creating an anharmonic oscillator that can be manipulated with microwave pulses. Each qubit requires precise calibration, electromagnetic shielding, and continuous monitoring to maintain quantum coherence before decoherence (environmental interference) destroys the quantum state.

Square Lattice: The Topology Revolution

Nighthawk’s most significant architectural departure is its square lattice qubit arrangement. IBM’s previous flagship, the Heron processor, used a “heavy-hex” lattice where qubits connected in hexagonal patterns. While this design offered certain stability advantages, it limited connectivity and required more SWAP gates—operations that physically move quantum information between qubits to enable interactions.

SWAP gates are computationally expensive. Each SWAP introduces additional error opportunities and consumes precious coherence time. Reducing SWAP requirements directly translates to more complex circuits and better results.

Nighthawk’s square lattice connects each qubit to four nearest neighbors, creating a dense grid. The 120 qubits link through 218 tunable couplers—controllable connections that can be turned on or off and adjusted in strength. This represents over 20% more couplers than Heron’s 176, dramatically increasing native qubit connectivity.

The impact is immediate: IBM claims Nighthawk can execute circuits with 30% greater complexity than Heron while maintaining comparable error rates. For algorithm designers, this means tackling problems with deeper circuits, more entangling operations, and richer quantum states without sacrificing accuracy.

According to IBM’s official blog, the square lattice topology was specifically chosen to optimize for algorithms requiring high qubit connectivity, such as variational quantum eigensolvers (VQE) used in chemistry simulations and quantum approximate optimization algorithms (QAOA) for combinatorial optimization.

Tunable Couplers: The Secret Weapon

Not all couplers are created equal. Nighthawk’s “next-generation tunable couplers” represent years of research into controlling qubit interactions with unprecedented precision.

Traditional fixed couplers create permanent connections between qubits. This simplifies chip design but reduces flexibility. Qubits continuously interact with connected neighbors, creating unwanted cross-talk and leakage that degrades coherence.

Tunable couplers act as quantum switches. Engineers can dynamically adjust coupling strength via external control signals, effectively turning interactions on when needed for two-qubit gates and off during single-qubit operations or idle periods. This dramatically reduces noise from neighboring qubits and extends effective coherence times.

IBM’s implementation uses capacitively coupled transmon qubits with frequency-tunable couplers. By adjusting the coupler frequency (via applied magnetic flux), IBM can bring qubits into or out of resonance, enabling or disabling interaction. The technique allows precise control over gate times and amplitudes, optimizing for speed versus fidelity tradeoffs.

The 218 couplers create what IBM calls an “all-to-all” connectivity approximation for local neighborhoods. While not every qubit connects directly to every other (that would require thousands of couplers), the square lattice ensures any two qubits can interact with at most two SWAP gates intermediating. For many algorithms, this approaches the efficiency of full connectivity.

The Fabrication Revolution: 300mm Wafers

Quantum processors aren’t printed on silicon wafers like classical chips. The superconducting circuits, cryogenic requirements, and extreme precision needed make fabrication extraordinarily challenging. Until recently, IBM produced quantum chips on smaller wafers in specialized facilities optimized for experimental one-offs rather than scalable manufacturing.

Nighthawk marks IBM’s transition to 300mm wafer fabrication at the Albany NanoTech Complex in New York. This state-of-the-art semiconductor facility, typically used for cutting-edge classical chip development, has been adapted for quantum processor production.

The move yields massive benefits:

Development Speed: IBM has doubled research and development velocity, cutting the time to build each new processor by at least half. Rapid iteration is critical when optimizing qubit designs, testing coupler configurations, and exploring novel error correction techniques.

Physical Complexity: The 300mm facility enables a tenfold increase in chip complexity. More routing layers, denser component placement, and better thermal management become possible. This directly supports IBM’s long-term roadmap toward fault-tolerant systems requiring millions of physical qubits.

Parallel Exploration: With faster fabrication cycles, IBM can now research multiple design variants simultaneously. Rather than building one experimental chip and waiting months for results, teams can test different coupler architectures, qubit arrangements, and control mechanisms in parallel, dramatically accelerating innovation.

Cost Reduction: While quantum chips will never be cheap (each requires custom fabrication, individual tuning, and sophisticated cryogenic packaging), scalable manufacturing reduces marginal costs. As IBM moves toward commercialization, efficient production becomes increasingly critical.

The Albany facility represents more than a manufacturing upgrade. It signals IBM’s commitment to quantum computing as an industrial technology, not merely a research curiosity. You don’t invest in 300mm wafer capacity unless you’re planning to build thousands of chips.

Performance That Matters: Gates, Fidelity, and CLOPS

Qubit count makes for compelling headlines, but quantum computing performance depends on more subtle metrics. Nighthawk’s true capabilities emerge from examining how those 120 qubits actually operate.

Two-Qubit Gate Fidelity: The Critical Metric

Every quantum computation breaks down into gates—fundamental operations that manipulate qubit states. Single-qubit gates (rotations, phase shifts) are relatively easy, achieving fidelities above 99.99%. The challenge lies in two-qubit gates, where entanglement is created and quantum advantage emerges.

Two-qubit gates require precisely calibrated interactions between qubits. The slightest miscalibration, electromagnetic noise, or thermal fluctuation introduces errors. As circuit depth increases (more gates applied sequentially), errors compound. At some point, accumulated errors overwhelm the computation, producing meaningless results.

IBM’s Heron processor recently achieved two-qubit gate fidelities above 99.9% for over 50% of tested qubit pairs—meaning fewer than one error per thousand operations. This milestone took years of engineering refinement: better qubit designs, improved control electronics, sophisticated calibration routines, and real-time error monitoring.

Nighthawk is expected to match or exceed Heron’s fidelity while supporting 30% more complex circuits. How? The tunable couplers reduce cross-talk and leakage, primary error sources in dense qubit arrays. The square lattice minimizes SWAP operations, which are themselves imperfect gates that introduce errors. Better connectivity means achieving the same algorithmic result with fewer total gates, preserving fidelity.

According to IBM’s performance data, maintaining sub-1% error rates while increasing circuit complexity represents the sweet spot for near-term quantum advantage. Algorithms can tolve meaningful problems before error accumulation destroys results.

Circuit Layer Operations Per Second (CLOPS)

CLOPS measures how quickly a quantum processor executes complete circuits. Higher CLOPS means faster iteration, more sampling, and better statistical averaging—critical for overcoming quantum noise through brute-force repetition.

IBM’s Heron fleet recently set a new CLOPS record: 330,000, a 65% improvement over 2024’s 200,000. This acceleration came from faster readout electronics, optimized control pulse sequences, and better classical-quantum integration.

For context, the quantum utility experiment—a benchmark demonstrating quantum advantage on specific problems—ran in under 60 minutes on the latest Herons. In 2023, the same experiment took days. Nighthawk aims for similar or better CLOPS performance, further reducing time-to-solution for complex algorithms.

Why does speed matter? Quantum computations require statistical sampling. A single circuit execution produces probabilistic results reflecting quantum superposition collapse. Running the circuit thousands or millions of times builds statistical confidence in the answer. Higher CLOPS means faster convergence to accurate results, making quantum computers practical for time-sensitive applications like financial modeling or drug screening.

Scalability: 5,000 Gates Today, 15,000 Tomorrow

IBM quantifies Nighthawk’s capabilities in two-qubit gates—the fundamental entangling operations that define circuit complexity. At launch (end of 2025), Nighthawk targets 5,000 gates. This is already remarkable; most early quantum computers struggled with dozens of gates before errors overwhelmed computation.

But 5,000 gates is just the beginning. IBM’s roadmap projects:

- End of 2026: 7,500 gates (50% increase)

- 2027: 10,000 gates (doubling launch capacity)

- 2028: 15,000 gates with 1,000+ connected qubits via long-range couplers

These aren’t vague aspirations. IBM has a track record of hitting or exceeding roadmap targets. The progression reflects continuous improvements in qubit quality, error mitigation techniques, and system integration rather than revolutionary breakthroughs.

Long-range couplers, first demonstrated on IBM experimental processors in 2024, enable physical connections between distant qubits on the same chip. This sidesteps the SWAP bottleneck entirely for certain qubit pairs, dramatically expanding effective connectivity without increasing error rates.

By 2028, Nighthawk-based systems could approach what IBM calls “modular quantum computing”—multiple Nighthawk chips linked together, scaling beyond single-chip qubit limits while maintaining coherent quantum states across the system. This modular approach forms the foundation for IBM’s ultimate goal: fault-tolerant quantum computers with millions of qubits.

The Software Stack: Where Hardware Meets Algorithms

Nighthawk doesn’t operate in isolation. Its performance depends critically on software infrastructure that translates human-designed algorithms into precise microwave pulses controlling individual qubits.

Qiskit: The Quantum Development Kit

IBM’s open-source Qiskit SDK is the most widely used quantum programming framework, with over 500,000 users worldwide executing billions of circuits. The latest version, Qiskit 2.2, shows 83x faster transpilation (circuit optimization) compared to competing frameworks like Tket 2.6.0.

Transpilation is crucial. Algorithms designed for abstract “ideal” qubits must be mapped onto real hardware with specific connectivity constraints, gate sets, and error profiles. Qiskit’s transpiler finds optimal mappings, inserting necessary SWAP gates, scheduling gates to minimize cross-talk, and selecting the best qubit subset for a given problem.

For Nighthawk, Qiskit 2.2 introduces several key features:

Dynamic Circuits: Previously, quantum circuits were static—all operations predetermined before execution. Dynamic circuits allow mid-circuit measurements with classical conditional logic. Measure a qubit, perform classical processing on the result, and conditionally apply subsequent gates based on the outcome. This enables more sophisticated algorithms like quantum error correction, iterative phase estimation, and adaptive variational methods.

IBM demonstrated utility-scale dynamic circuits at Quantum Developer Conference 2025, showing 25% accuracy improvements and 58% two-qubit gate reductions for a 46-site Ising model simulation with 8 Trotter steps. The demo proved dynamic circuits work at 100+ qubit scales, not just small proof-of-concepts.

Samplomatic Engine: Traditional quantum error mitigation requires running circuits hundreds of thousands of times to build statistical confidence. Probabilistic Error Cancellation (PEC) removes noise bias but compounds sampling requirements, sometimes needing millions of shots.

Samplomatic provides fine-grained control over advanced error mitigation, reducing PEC sampling overhead by 100x. It leverages classical high-performance computing to intelligently sample the probability space, extracting accurate expectation values with far fewer quantum circuit executions.

C++ API for HPC Integration: Qiskit now exposes a C++ interface, allowing seamless integration with high-performance classical computing environments. Hybrid algorithms—combining quantum subroutines with classical optimization loops—can execute at full classical computing speed without Python overhead. This is critical for variational algorithms (VQE, QAOA) that iterate thousands of times.

According to IBM’s documentation, the combination of Nighthawk hardware and Qiskit 2.2 software enables a 24% increase in circuit accuracy at 100+ qubit scales while decreasing the cost of extracting accurate results by over 100 times.

The Quantum Advantage Tracker

IBM, alongside Algorithmiq, researchers from the Flatiron Institute, and BlueQubit, launched an open, community-led quantum advantage tracker at QDC 2025. This platform systematically monitors and verifies emerging demonstrations of quantum advantage, encouraging rigorous validation and healthy competition between quantum and classical approaches.

The tracker currently supports three experiments:

Observable Estimation: Computing expectation values of quantum observables (properties of quantum systems) faster than classical shadow tomography or other classical estimation methods.

Variational Problems: Using hybrid quantum-classical algorithms (VQE, QAOA) to solve optimization problems where classical heuristics struggle, such as finding ground states of complex molecular Hamiltonians.

Classically Verifiable Challenges: Problems where quantum solutions can be efficiently checked by classical computers, providing verifiable proof of advantage without requiring trust in the quantum computation itself.

Sabrina Maniscalco, CEO of Algorithmiq, noted: “The model we designed explores regimes so complex that it challenges all state-of-the-art classical methods tested so far.” This reflects the tracker’s purpose: establishing clear, falsifiable benchmarks that prevent premature claims of advantage while accelerating genuine progress.

IBM encourages the broader research community to contribute experiments, propose classical baselines, and rigorously validate results. The tracker represents a philosophical shift: quantum advantage isn’t a single finish line but an ongoing competition pushing both quantum and classical methods to improve.

Quantum Loon: Fault Tolerance on the Horizon

While Nighthawk targets near-term quantum advantage, IBM simultaneously demonstrated IBM Quantum Loon, an experimental processor showcasing all hardware components needed for fault-tolerant quantum computing.

Understanding Fault Tolerance

Quantum computers face an existential challenge: qubits are fragile. Environmental noise, thermal fluctuations, and imperfect controls constantly introduce errors. As computations scale, errors compound, eventually overwhelming any algorithmic advantage.

Fault tolerance solves this through quantum error correction (QEC)—encoding logical qubits across multiple physical qubits such that errors can be detected and corrected without measuring (and thereby destroying) the quantum state. This sounds paradoxical: how can you detect errors without measuring? The answer lies in clever redundancy schemes that measure error syndromes (indicators of error presence and type) while preserving the encoded quantum information.

The holy grail of QEC is “below threshold” operation: error rates low enough that adding more physical qubits to the encoding actually reduces logical qubit error rates exponentially. Peter Shor proved this theoretically possible in 1995. Google’s Willow chip recently demonstrated it experimentally, achieving exponential error reduction as qubit arrays scaled from 3×3 to 5×5 to 7×7 grids.

Loon’s Architecture

Loon isn’t designed for public quantum advantage demonstrations. It’s a testbed validating technologies needed for IBM’s Starling fault-tolerant system, targeted for 2029.

Key features include:

Long-Range C-Couplers: Most QEC codes require connecting distant qubits on the same chip. Surface codes, the dominant QEC approach, arrange qubits in two-dimensional grids with only nearest-neighbor interactions. But quantum low-density parity-check (qLDPC) codes, which IBM is pursuing, require long-range connections.

Loon incorporates six-way qubit connectivity via extended couplers that physically link qubits separated by multiple grid spacings. This enables efficient qLDPC implementations that require 90% fewer physical qubits per logical qubit compared to surface codes—a dramatic efficiency gain critical for scaling to millions of qubits.

Multiple Routing Layers: Classical chips use multiple metal layers for signal routing. Quantum chips historically limited layers due to fabrication challenges. Loon features multiple high-quality, low-loss routing layers, enabling the dense wiring needed for high-connectivity QEC codes without excessive cross-talk or signal degradation.

Qubit Reset Mechanisms: Fault-tolerant protocols require resetting auxiliary qubits to ground states between error correction cycles. Loon includes specialized reset gadgets that rapidly return qubits to |0⟩ without disturbing neighboring qubits. Fast, high-fidelity reset is essential for maintaining QEC cycle rates.

Real-Time Error Decoding: IBM announced a 10x speedup in error correction decoding, achieving real-time correction under 480 nanoseconds using qLDPC codes—a full year ahead of schedule. This was accomplished through collaboration with AMD, running QEC decoding algorithms on FPGAs (field-programmable gate arrays) integrated with the quantum system.

Decoding must happen faster than the QEC cycle time; otherwise, errors accumulate faster than correction can fix them. IBM’s <480ns decoding meets this threshold, demonstrating that classical computing hardware can support fault-tolerant quantum operations in real-time.

The Starling Roadmap

Loon is the first of four quantum chip releases leading to Starling:

- IBM Quantum Loon (2025): Demonstrates core fault-tolerance components

- IBM Quantum Kookaburra (2026): Adds quantum memory and processing unit attachment

- IBM Quantum Cockatoo (2027): Enables inter-module entanglement for modular scaling

- IBM Quantum Starling (2028-2029): Fault-tolerant system with 200 logical qubits and 100 million quantum gates

Starling will mark a phase transition in quantum computing. Current systems, including Nighthawk, operate in the Noisy Intermediate-Scale Quantum (NISQ) era—useful for near-term advantage on specific problems but limited by error accumulation. Starling enters the fault-tolerant era, where computations scale arbitrarily with error rates kept below acceptable thresholds through active correction.

IBM’s modular approach envisions linking multiple fault-tolerant modules, each containing hundreds of logical qubits, into larger systems. This architecture parallels classical computing’s evolution from single processors to multi-core chips to distributed clusters. Quantum computing will follow a similar trajectory, with modular fault-tolerant systems forming the building blocks of utility-scale quantum computers.

The Competition: How IBM Stacks Up

Nighthawk doesn’t exist in a vacuum. Multiple companies and research institutions are racing toward quantum advantage, each with distinct technological approaches.

Google Willow: The Error Correction Pioneer

Google’s Willow chip, announced December 2024, achieved exponential error reduction—a landmark accomplishment. Willow demonstrated “below threshold” QEC performance, the first time any organization has shown error rates decreasing as physical qubit arrays scale.

Willow’s 105 qubits (fewer than Nighthawk) connect in surface code configurations optimized for nearest-neighbor QEC. Google claims Willow performed a random circuit sampling benchmark in under five minutes that would take a classical supercomputer 10 septillion years.

However, random circuit sampling, while computationally impressive, isn’t a practically useful problem. Google’s subsequent “Quantum Echoes” algorithm for the out-of-time-ordered correlator (OTOC) represents more meaningful progress, demonstrating 13,000x speedup over classical methods on physics simulations.

IBM’s Differentiation: Nighthawk focuses on gate capacity and connectivity for near-term algorithms rather than maximizing QEC demonstrations. IBM’s square lattice and tunable couplers optimize for hybrid variational algorithms (VQE, QAOA) expected to deliver early quantum advantage in chemistry and optimization. Where Google emphasizes fundamental QEC breakthroughs, IBM prioritizes commercially relevant applications.

Microsoft Majorana 1: The Topological Bet

Microsoft’s Majorana 1 chip, announced February 2025, uses topological qubits based on exotic Majorana zero modes. These quasi-particles, predicted theoretically but only recently observed experimentally, potentially offer intrinsic noise protection.

The theory is compelling: topological qubits store quantum information in global properties of the system rather than local states, making them naturally resilient to local perturbations. If scalable, topological quantum computing could achieve fault tolerance with far fewer physical qubits.

The catch: it’s early. Microsoft has demonstrated individual topological qubits but hasn’t yet built multi-qubit systems or shown two-qubit gates. Scaling to dozens or hundreds of topological qubits faces unknown engineering challenges.

IBM’s Differentiation: Superconducting qubits are mature. IBM has built and operated 100+ qubit systems, understands error sources, and has clear scaling pathways. Topological qubits might eventually surpass superconducting technology, but IBM is solving problems today with proven approaches rather than betting on speculative physics.

Amazon, Quantinuum, IonQ: The Emerging Players

Amazon Web Services offers quantum computing through Braket, integrating multiple hardware backends including D-Wave annealers, IonQ trapped-ion systems, and Rigetti superconducting processors. Amazon is also developing its own quantum chip but hasn’t revealed detailed specifications.

Quantinuum (formed by merging Honeywell Quantum Solutions and Cambridge Quantum) recently launched Helios, a trapped-ion quantum computer claiming industry-leading fidelities. Trapped-ion systems use electromagnetic fields to trap individual ions (charged atoms), with quantum information stored in ion internal states. Trapped ions naturally offer high fidelities and long coherence times but face different scaling challenges than superconducting systems.

IonQ similarly pursues trapped-ion technology, recently demonstrating 12% speedups in medical simulations via collaboration with Ansys. IonQ targets cloud-based quantum-as-a-service, partnering with Microsoft Azure and AWS for distribution.

IBM’s Differentiation: Integration and ecosystem. IBM doesn’t just build quantum processors; it provides end-to-end quantum development platforms, open-source software (Qiskit), extensive documentation, educational programs, and a global network of quantum researchers. The company has operated quantum computers in the cloud since 2016, accumulating years of user feedback, algorithm development, and system optimization.

Trapped-ion systems offer advantages (high fidelity, long coherence) but currently scale more slowly than superconducting approaches. IBM’s ability to manufacture 120+ qubit processors on 300mm wafers positions it well for reaching 1,000+ qubit systems by decade’s end.

Real-World Applications: When Quantum Advantage Actually Matters

Quantum computing has suffered from a perception problem: lots of hype, few applications. Nighthawk and the broader push toward 2026 quantum advantage aims to change that.

Drug Discovery and Molecular Modeling

Pharmaceutical development involves simulating molecular interactions to predict drug efficacy and side effects. Classical computers struggle with quantum chemistry—the physics governing electron behavior in molecules. For simple molecules, approximations work. For complex proteins or novel compounds, classical simulation becomes intractable.

Quantum computers naturally simulate quantum systems. Representing a molecule’s quantum state on qubits allows direct computation of properties like ground state energy, reaction pathways, and binding affinities.

Case Study: Accenture Labs, Biogen, and 1QBit are collaborating on quantum-accelerated drug discovery. Early results suggest quantum algorithms can analyze molecular candidates orders of magnitude larger than classical methods handle, potentially accelerating drug development timelines from years to months.

With Nighthawk’s 5,000-7,500 gate capacity, IBM believes variational quantum eigensolvers (VQE) can solve industrially relevant molecular problems by 2026-2027. This isn’t replacing all of drug discovery—screening, clinical trials, and regulatory approval remain unchanged—but quantum computing could dramatically improve the molecular design phase, identifying promising candidates faster and more accurately.

Financial Modeling and Optimization

Banks, hedge funds, and insurance companies constantly solve optimization problems: portfolio optimization, risk assessment, derivative pricing, fraud detection. Many of these problems are NP-hard—classical solutions require time exponential in problem size.

Quantum approximate optimization algorithms (QAOA) offer polynomial speedups for certain combinatorial optimization problems. While not solving NP-hard problems in polynomial time (that would require proving P=NP), quantum algorithms can find better solutions faster than classical heuristics for practical problem sizes.

Case Study: IBM is working with financial institutions to develop quantum algorithms for option pricing, credit risk analysis, and portfolio optimization. Early benchmarks suggest 10-100x speedups are achievable for specific formulations, though results remain preliminary.

McKinsey estimates quantum computing could generate $300-500 billion in value for the financial sector by 2035, primarily through better risk modeling and algorithmic trading strategies enabled by quantum optimization.

Materials Science and Battery Development

Modern batteries, semiconductors, and advanced materials involve complex quantum phenomena: superconductivity, topological insulators, quantum dots, and photovoltaic effects. Designing new materials requires understanding electron interactions across thousands of atoms—a quintessentially quantum problem.

Case Study: BMW and Airbus are collaborating with Quantinuum on quantum simulations for fuel cell development. Hydrogen fuel cells could decarbonize transportation, but current catalyst materials are expensive and inefficient. Quantum simulations might identify novel catalyst designs with superior performance.

IBM’s quantum systems could similarly accelerate battery research, identifying solid-state electrolytes or next-generation cathode materials that classical simulations miss. The economic impact is substantial: better batteries enable longer-range electric vehicles, grid-scale energy storage, and consumer electronics advances.

Cryptography and Security (The Quantum Threat)

Quantum computing’s most famous application is also its most ominous: breaking current encryption. Shor’s algorithm, run on a fault-tolerant quantum computer with thousands of logical qubits, can factor large numbers exponentially faster than classical algorithms. This would break RSA encryption, the foundation of internet security.

Bitcoin and other cryptocurrencies using elliptic curve cryptography face similar threats. Cracking Bitcoin’s encryption requires an estimated 2,000 logical qubits (equivalent to tens of millions of physical qubits with error correction). While Nighthawk’s 120 qubits pose no immediate risk, the long-term trajectory is clear.

“Q-Day”—the day quantum computers can break current encryption—might arrive in the 2030s. Organizations are already transitioning to post-quantum cryptography, algorithms believed secure against quantum attacks. The National Institute of Standards and Technology (NIST) recently standardized several post-quantum algorithms, and migration efforts are underway.

IBM’s quantum advantage timeline (2026) precedes fault-tolerant systems capable of breaking encryption (2029+). This provides a critical window for implementing quantum-resistant security before Q-Day arrives.

The Path to 2026: What Quantum Advantage Really Means

IBM’s bold prediction—verified quantum advantage by end of 2026—requires unpacking. What constitutes “advantage”? How will it be verified? And most importantly, why should non-experts care?

Defining Quantum Advantage

Quantum advantage (sometimes called “quantum supremacy,” though that term has fallen out of favor due to problematic connotations) occurs when a quantum computer provably outperforms all classical computers on a specific task. Three criteria must be met:

Correctness: The quantum algorithm produces valid results that can be verified.

Speedup: The quantum approach is significantly faster than the best-known classical method (typically requiring orders-of-magnitude improvement, not marginal gains).

Utility: The problem solved has at least potential practical relevance, not just a contrived benchmark designed to favor quantum computers.

Google’s 2019 quantum supremacy claim met the first two criteria but not clearly the third—random circuit sampling has limited practical applications. The Willow-based Quantum Echoes algorithm represents progress toward useful advantage, demonstrating speedups on physics simulations with broader applicability.

IBM’s 2026 target focuses on utility. Rather than single benchmark demonstrations, IBM expects multiple verified advantage cases across observable estimation, variational problems, and classically verifiable tasks—the three categories in the quantum advantage tracker.

The Verification Challenge

Proving quantum advantage is surprisingly difficult. If a classical computer can’t solve the problem, how do you know the quantum solution is correct? If the quantum speedup is marginal, how do you prove the classical algorithm wasn’t simply poorly optimized?

IBM’s community-led tracker addresses this through:

Open Benchmarks: Publicly available problem specifications allowing anyone to propose classical approaches or challenge results.

Classical Baselines: Leading classical algorithms implemented by expert classical computing researchers, ensuring fair comparisons.

Independent Verification: Multiple research groups validate claims, reducing risk of errors or oversights.

Continuous Competition: As classical algorithms improve, quantum systems must maintain advantage, preventing premature declarations of victory.

Jay Gambetta emphasized this philosophy: “We want to encourage a back-and-forth between the best quantum and classical approaches. Quantum advantage isn’t a one-time achievement but an ongoing dialogue pushing both fields forward.”

Why 2026?

IBM’s confidence in the 2026 timeline stems from several factors:

Hardware Readiness: Nighthawk’s architecture, coupled with Heron’s demonstrated performance (330,000 CLOPS, sub-1% error rates), provides the hardware foundation.

Software Maturity: Qiskit 2.2’s dynamic circuits, improved transpilation, and error mitigation tools unlock algorithmic capabilities previously impossible.

Algorithm Development: Years of research have identified specific problems—molecular electronic structure, certain optimization landscapes, quantum simulation protocols—where quantum advantage seems achievable at 100-200 qubit scales with moderate circuit depths.

Community Momentum: Hundreds of research groups globally are developing and testing quantum algorithms. The probability that someone demonstrates verifiable advantage within the next 12-18 months is high, even if individual attempts fail.

Competitive Pressure: Google, Microsoft, Quantinuum, IonQ, and others are pushing toward the same goal. IBM isn’t declaring advantage alone but predicting the broader quantum community will collectively reach this milestone.

Of course, timelines slip. Google’s Sycamore processor was supposed to deliver advantage in 2018; it arrived in 2019. IBM’s own roadmap has seen adjustments. But the trend is unmistakable: quantum computers are improving faster than classical algorithms can compensate. Advantage isn’t a question of if, but when.

Business Implications: Why Investors Are Paying Attention

Nighthawk’s unveiling didn’t just excite physicists. Financial markets reacted immediately. IBM’s stock gained 3% in the week following the announcement, adding billions to market capitalization. Quantum computing pure-plays like IonQ, Rigetti, and D-Wave saw similar bumps.

The $1.3 Trillion Opportunity

McKinsey’s analysis projects quantum computing could generate $1.3 trillion in value across industries by 2035, with:

- Pharmaceuticals: $300-400 billion from accelerated drug discovery

- Chemicals: $200-300 billion from materials design and process optimization

- Finance: $300-500 billion from improved risk modeling and trading strategies

- Logistics: $100-200 billion from supply chain optimization

- Cybersecurity: $50-100 billion from quantum-safe encryption services

The market for quantum hardware and software could reach $170 billion by 2040, with quantum computing as a service (QCaaS) driving recurring revenue streams.

IBM’s Quantum Strategy

IBM approaches quantum computing as a long-term strategic investment, not a near-term profit center. The company has invested billions over two decades building quantum expertise, fabrication capabilities, and developer ecosystems.

Revenue models include:

Quantum-as-a-Service: Cloud access to quantum processors via IBM Quantum Platform. Enterprises pay for circuit execution time, accessing systems like Nighthawk without building their own quantum labs.

Enterprise Partnerships: IBM collaborates with companies like BMW, Airbus, Goldman Sachs, and JPMorgan Chase on quantum research, providing both hardware access and consulting expertise.

Quantum Software and Tools: While Qiskit is open-source, IBM offers premium services, support contracts, and enterprise integrations generating revenue.

Future Hardware Sales: As quantum computers mature, IBM could sell dedicated systems to government agencies, research institutions, and large corporations requiring on-premise quantum capabilities.

IBM’s quantum business remains small relative to its $60 billion annual revenue, but analysts view it as a long-term growth driver. Simply Wall St analysis suggests quantum-as-a-service could become a major revenue stream by the 2030s, potentially rivaling IBM’s hybrid cloud and AI offerings.

Competitive Dynamics

IBM isn’t alone chasing quantum revenue. Tech giants (Google, Microsoft, Amazon, Alibaba) and quantum specialists (IonQ, Rigetti, Quantinuum) all compete for quantum computing dominance.

IBM’s Advantages:

- Track Record: 25+ years quantum computing research, operational systems since 2016

- Ecosystem: 500,000+ Qiskit users, extensive educational resources, global partnerships

- Integration: Quantum systems integrate with IBM’s classical supercomputers, cloud infrastructure, and enterprise software

- Roadmap Execution: Consistent delivery on promised milestones builds trust with enterprise customers

Challenges:

- Smaller R&D Budgets: Alphabet and Microsoft can outspend IBM on quantum research

- Agile Startups: IonQ and Rigetti move faster than IBM’s corporate bureaucracy

- Technology Risk: Superconducting qubits might not be the winning long-term approach

Despite challenges, IBM’s comprehensive strategy—hardware, software, fabrication, partnerships, and clear roadmaps—positions it well. Nighthawk demonstrates IBM isn’t just keeping pace but setting it.

FAQ: Everything You Need to Know

What is IBM Quantum Nighthawk?

IBM Quantum Nighthawk is a 120-qubit superconducting quantum processor featuring 218 next-generation tunable couplers arranged in a square lattice topology. It represents IBM’s most advanced quantum chip, designed to achieve quantum advantage by executing circuits with 5,000 two-qubit gates at launch (end of 2025), scaling to 15,000 gates by 2028.

How does Nighthawk differ from previous IBM quantum processors?

Nighthawk introduces a square lattice qubit arrangement replacing IBM’s previous heavy-hex topology. This provides over 20% more couplers (218 vs. 176 in Heron), enabling 30% greater circuit complexity while maintaining low error rates. The architecture specifically optimizes for high-connectivity algorithms like variational quantum eigensolvers and quantum approximate optimization algorithms.

What is quantum advantage and when will IBM achieve it?

Quantum advantage occurs when a quantum computer provably outperforms all classical computers on a specific task. IBM projects the broader quantum community will achieve verified quantum advantage by end of 2026, with Nighthawk serving as the hardware foundation. The company launched a community-led quantum advantage tracker with three experimental categories to systematically validate emerging claims.

How does Nighthawk compare to Google’s Willow chip?

Google’s Willow (105 qubits) emphasizes quantum error correction demonstrations, achieving exponential error reduction as qubit arrays scale. Nighthawk (120 qubits) focuses on gate capacity and connectivity for near-term practical algorithms. Both represent cutting-edge achievements with different optimization targets—Google prioritizes fundamental QEC breakthroughs, IBM emphasizes commercially relevant applications.

What are tunable couplers and why do they matter?

Tunable couplers are controllable connections between qubits that can be dynamically adjusted in strength or turned on/off via external control signals. This reduces unwanted qubit cross-talk, extends coherence times, and enables precise control over two-qubit gate operations. Nighthawk’s 218 tunable couplers provide the connectivity needed for complex quantum algorithms while minimizing error sources.

When will Nighthawk be available to users?

IBM expects to deliver Nighthawk to users via the IBM Quantum Platform by the end of 2025. The system will be accessible through cloud-based quantum computing services, allowing researchers and enterprises to execute quantum algorithms without building their own quantum labs.

What is IBM Quantum Loon and how does it relate to Nighthawk?

IBM Quantum Loon is an experimental processor demonstrating all hardware components needed for fault-tolerant quantum computing, including long-range C-couplers, multiple routing layers, and qubit reset mechanisms. While Nighthawk targets near-term quantum advantage, Loon represents the first step toward IBM’s Starling fault-tolerant system planned for 2029.

What real-world problems can Nighthawk solve?

Nighthawk is designed for applications including molecular simulation for drug discovery, financial portfolio optimization, materials design for batteries and fuel cells, quantum chemistry calculations, and machine learning optimization. At launch, it will handle problems requiring up to 5,000 two-qubit gates, expanding to 15,000 gates by 2028 as the technology matures.

How do two-qubit gate fidelity and CLOPS measure quantum performance?

Two-qubit gate fidelity measures error rates in entangling operations, with sub-1% errors (99%+ fidelity) considered high-quality. CLOPS (Circuit Layer Operations Per Second) measures circuit execution speed—higher CLOPS means faster iteration and better statistical sampling. IBM’s Heron processors recently achieved 330,000 CLOPS with 99.9% two-qubit fidelities, benchmarks Nighthawk aims to match or exceed.

What is the significance of 300mm wafer fabrication?

IBM’s transition to 300mm wafer fabrication at Albany NanoTech Complex enables scalable quantum chip manufacturing, doubling R&D speed, increasing chip complexity tenfold, and allowing parallel exploration of multiple designs. This represents a shift from experimental one-offs to industrial-scale production, critical for commercializing quantum computing.

Is quantum computing a threat to cryptocurrency and encryption?

Fault-tolerant quantum computers with thousands of logical qubits (equivalent to tens of millions of physical qubits) could eventually break current encryption, including Bitcoin’s elliptic curve cryptography. However, Nighthawk’s 120 qubits pose no immediate risk. “Q-Day” (when quantum computers threaten encryption) likely arrives in the 2030s, providing time to transition to post-quantum cryptography already being standardized by NIST.

What is IBM’s roadmap beyond Nighthawk?

IBM’s roadmap includes iterative Nighthawk improvements (7,500 gates by 2026, 10,000 by 2027, 15,000 by 2028) alongside the fault-tolerance pathway: Quantum Loon (2025), Quantum Kookaburra (2026), Quantum Cockatoo (2027), culminating in the Starling fault-tolerant system with 200 logical qubits and 100 million quantum gates by 2029.

The Verdict: Why Nighthawk Matters

Quantum computing has long suffered from a credibility gap. Breathless headlines about “computers that could change everything” followed by years of minimal practical progress. Nighthawk represents something different: methodical engineering executing a multi-year roadmap with measurable milestones.

This isn’t a research curiosity or speculative moonshot. IBM is building quantum computers the way Boeing builds aircraft—with defined specifications, rigorous testing, predictable scaling, and clear applications. Nighthawk’s 120 qubits, 218 tunable couplers, and 5,000-gate capacity represent years of accumulated expertise translated into functional hardware.

The 2026 quantum advantage timeline is ambitious but achievable. Nighthawk provides the hardware foundation. Qiskit 2.2 provides the software infrastructure. The quantum advantage tracker provides rigorous validation mechanisms. Hundreds of researchers globally are developing algorithms. The pieces are in place.

Whether quantum advantage arrives in December 2026, mid-2027, or later matters less than the trajectory. Quantum computers are improving exponentially—doubling capabilities yearly—while classical algorithms improve incrementally. The gap is closing. Advantage is inevitable.

For businesses, the question isn’t whether quantum computing will matter but when to invest in quantum literacy. Companies that understand quantum algorithms, identify relevant applications, and develop quantum expertise today will dominate quantum-enabled industries tomorrow. Those that ignore quantum computing risk being disrupted by competitors leveraging capabilities they don’t understand.

For researchers, Nighthawk opens new computational frontiers. Problems considered intractable become solvable. Chemistry simulations, optimization landscapes, and physics phenomena beyond classical reach become accessible. The scientific discoveries enabled by quantum advantage could rival those enabled by early supercomputers in the 1960s.

For society, quantum computing promises transformative impacts: drugs for previously untreatable diseases, materials enabling sustainable energy, financial systems managing risk with unprecedented precision, and cryptography securing communications in the quantum age.

Nighthawk is one step on a long journey. It isn’t the destination—that remains IBM’s Starling fault-tolerant system and beyond. But it’s a critical waypoint proving that quantum advantage isn’t science fiction but engineering challenge being systematically solved.

The quantum revolution is coming. IBM just accelerated its arrival.

Last updated: November 21, 2025. IBM Quantum Nighthawk is expected to be available via IBM Quantum Platform by end of 2025. For the latest updates, visit IBM Quantum.