Best AI Voice Generators 2026

Quick Answer: The AI voice generator market in 2026 encompasses over 40 commercially available platforms spanning dedicated text-to-speech tools, cloud-based speech APIs, and integrated audio production suites. This analysis evaluates 15 solutions across voice quality, language coverage, customization depth, pricing accessibility, and deployment flexibility. The market is undergoing a structural shift from basic text-to-speech conversion toward emotionally expressive, real-time conversational synthesis, driven by neural TTS architectures and enterprise demand for voice automation at scale.

What This Analysis Covers:

- 15 AI voice generation platforms evaluated across six primary criteria

- Pricing models ranging from free tiers to enterprise-grade subscriptions

- Deployment options including cloud SaaS, API-first services, and platform-integrated tools

- Use cases for content creators, developers, educators, marketers, and enterprise teams

Key Finding: According to MarketsandMarkets, the AI voice generator market is projected to grow from USD 4.16 billion in 2025 to USD 20.71 billion by 2031, at a CAGR of 30.7%. This growth is concentrating investment around two poles: high-fidelity creative synthesis for media production and low-latency conversational engines for enterprise customer interactions. The gap between these two segments is widening, with most platforms optimizing for one use case at the expense of the other.

How We Evaluated These AI Voice Generation Solutions

Scope

This analysis covers AI voice generation platforms that convert text input into synthetic speech output, including standalone text-to-speech (TTS) tools, API-based speech synthesis services, and integrated audio production platforms. Voice-only modification tools (real-time voice changers without TTS capability), music generation platforms, and speech-to-text transcription services are excluded unless they are part of a broader voice generation suite.

The evaluation period covers platform capabilities as of February 2026, based on publicly available documentation, pricing pages, and independent assessments.

Target Audience

This guide serves a broad audience that includes content creators producing voiceovers for video, podcasts, and audiobooks; developers integrating speech synthesis via API; educators and instructional designers building e-learning modules; marketing teams scaling audio content production; and enterprise operations teams deploying voice agents or IVR systems. Recommendations are intentionally omitted — the analysis provides factual capabilities and documented trade-offs to support individual decision-making across these diverse use cases.

Evaluation Framework

Each platform was assessed against six criteria:

- Voice Quality and Naturalness — Prosody, emotional range, consistency across long-form content, and absence of audible artifacts

- Language and Voice Coverage — Number of supported languages, regional accents, and total voice library size

- Customization and Control — Ability to adjust pitch, speed, emphasis, pauses, emotional tone, and availability of voice cloning

- Integration and Deployment — API availability, SDK support, third-party integrations (video editors, LMS, CMS), and deployment model (cloud, on-premise, hybrid)

- Pricing Transparency — Clarity of pricing structure, free tier availability, character/minute limits, and commercial licensing terms

- Compliance and Ethics — Data handling practices, consent frameworks for voice cloning, watermarking capabilities, and regulatory compliance (SOC 2, GDPR)

Data Sources

Evaluations draw on official vendor documentation and published feature specifications, independent benchmark data from Artificial Analysis and community-driven TTS leaderboards, analyst reports from MarketsandMarkets, Grand View Research, and Gartner, publicly reported adoption metrics and funding disclosures, and user feedback aggregated from developer forums and professional review platforms such as G2 and Capterra.

What This Analysis Does Not Cover

This analysis does not include real-time voice changers designed for gaming or live streaming (e.g., Voice.ai, Voicemod), AI music generation platforms, speech-to-text or transcription-only services, or tools that exclusively target the telephony/call center market without a general-purpose TTS offering. Custom enterprise deployments requiring vendor consultation for pricing are noted but not evaluated on cost-effectiveness.

Independence Statement

This analysis was conducted independently. Axis Intelligence maintains no commercial relationship with any vendor mentioned. No compensation was received for inclusion or placement. All evaluations are based on publicly available information, vendor documentation, and independent technical assessments.

The State of AI Voice Generation in 2026

Market Overview

AI voice generation is a text-to-speech technology category that uses neural network models to convert written text into synthetic spoken audio. Modern implementations rely primarily on neural TTS (NTTS) architectures — including models such as Tacotron, FastSpeech, and WaveNet-derived systems — that generate speech waveforms directly from text input, producing output with natural prosody, intonation, and emotional variation.

The global AI voice generator market was valued at approximately USD 4.16 billion in 2025 and is projected to reach USD 20.71 billion by 2031, according to a December 2025 report from MarketsandMarkets. Grand View Research provides a comparable estimate, projecting the market at USD 21.75 billion by 2030 from a 2023 base of USD 3.56 billion, at a CAGR of approximately 29.6%. Neural text-to-speech engines and speech synthesis systems held the largest technology segment share at 49.6% in 2025, according to MarketsandMarkets data.

The media and entertainment sector currently represents the largest end-use segment, driven by demand for multilingual dubbing, voiceover production, and dynamic audio content for streaming platforms and podcasting. North America accounts for approximately 40% of global market share, supported by the concentration of major platform providers and a robust startup ecosystem.

Key Challenges Users Face

Despite rapid improvements in voice quality, several persistent challenges affect adoption across user segments. Voice consistency over long-form content remains an issue — many platforms produce convincing output in short clips but exhibit drift in tone, pacing, or pronunciation across 10+ minute narrations. Language quality varies significantly beyond English; many platforms advertise support for 20-100+ languages, but output quality drops substantially for lower-resource languages and regional dialects.

Pricing complexity creates evaluation friction, particularly for users comparing per-character, per-minute, and per-seat models across different providers. Commercial licensing terms vary widely, with some free tiers restricting commercial use or requiring attribution. For enterprise adopters, compliance requirements around voice cloning consent, synthetic media watermarking, and data residency add evaluation complexity that smaller teams or individual creators may not encounter.

What’s Changing in 2026

Three developments are reshaping the AI voice generation landscape in 2026.

First, conversational voice AI is emerging as a distinct product category. ElevenLabs, which closed 2025 with over USD 330 million in annual recurring revenue and raised USD 500 million in February 2026 at an USD 11 billion valuation, is investing heavily in its ElevenAgents platform for real-time conversational voice agents. This reflects a broader industry shift from one-way narration toward interactive, low-latency speech generation for customer service, sales, and operational workflows.

Second, regulatory frameworks are advancing. The EU AI Act‘s transparency obligations under Article 50, scheduled to become enforceable in August 2026, will require providers of generative AI systems to mark AI-generated content — including synthetic audio — in machine-readable formats. The European Commission published a first draft Code of Practice on marking and labeling AI-generated content in December 2025, proposing multilayered approaches including watermarking, metadata embedding, and content provenance standards. In the United States, over 45 states have enacted some form of deepfake-related legislation as of mid-2025, creating a patchwork of compliance requirements for voice synthesis providers and their users.

Third, consolidation is accelerating. Meta’s acquisition of PlayAI (formerly PlayHT) signals that major technology companies view voice synthesis as strategic infrastructure. Google hired key talent from emotion-focused voice AI company Hume AI in early 2026, and competitor Deepgram raised USD 130 million in January 2026 at a USD 1.3 billion valuation. These moves indicate that standalone voice generation may increasingly become a feature within larger AI platforms rather than an independent product category.

How AI Voice Generation Solutions Are Organized

AI voice generators in 2026 can be categorized into four primary segments based on their architecture, target user, and deployment model. Understanding these categories helps contextualize the differences in pricing, capability, and integration depth across the 15 platforms evaluated in this analysis.

Dedicated Creative TTS Platforms

Dedicated creative TTS platforms are standalone software-as-a-service products designed primarily for content creation workflows — voiceovers, narration, podcasting, and audiobook production. These platforms typically offer browser-based studios with visual editing interfaces, pre-built voice libraries, and export-ready audio output. They prioritize voice expressiveness, ease of use, and production speed over API depth or infrastructure integration.

Typically used by: Content creators, marketing teams, instructional designers, podcasters, independent producers Price range: Free tiers with limited characters; paid plans from approximately $5–$99/month

API-First Voice Synthesis Services

API-first platforms are designed for developers and engineering teams who need to integrate speech synthesis into applications, products, or automated workflows. These services expose TTS functionality through RESTful APIs and SDKs, with emphasis on latency, throughput, scalability, and programmable voice control (SSML support, streaming output). Some also offer web-based interfaces, but the primary product is the API endpoint.

Typically used by: Software developers, DevOps teams, product engineers building voice-enabled applications Price range: Pay-as-you-go per character or per minute; free tiers typically 500K–5M characters/month

Cloud Platform TTS Services

Major cloud infrastructure providers (AWS, Google Cloud, Microsoft Azure) offer TTS as one component within their broader cloud service portfolios. These services benefit from deep ecosystem integration — shared billing, monitoring, identity management — and are designed to operate within existing cloud architectures. Voice quality has improved substantially with neural voice options, though they generally trail dedicated TTS platforms in emotional expressiveness.

Typically used by: Enterprise engineering teams already deployed on a specific cloud platform; organizations requiring infrastructure-level SLAs Price range: Per-character pricing with free tier allowances; costs scale with usage volume

Integrated Audio/Video Production Suites

Some voice generation capabilities are embedded within broader audio or video production platforms. These tools combine TTS with video editing, screen recording, transcription, or avatar generation, targeting users who need voice as one component of a larger media production workflow rather than as a standalone deliverable.

Typically used by: Video production teams, social media marketers, e-learning developers, multimedia content teams Price range: Subscription-based, typically $20–$100+/month, bundled with broader production features

AI Voice Generator Comparison: Key Features at a Glance

| Solution | Category | Languages | Voice Library | Voice Cloning | Free Tier | Pricing Model | Notable Limitation |

|---|---|---|---|---|---|---|---|

| ElevenLabs | Creative TTS / API | 32 | 1,200+ | Yes (instant + professional) | 10,000 chars/month | Freemium + per-character | Costs escalate at high volume |

| Murf AI | Creative TTS | 20+ | 120+ | Yes | Limited trial | Per-user subscription ($26–$75/mo) | Smaller voice library vs. competitors |

| WellSaid Labs | Creative TTS (Enterprise) | English-focused | 120+ | Limited (voice avatars) | 7-day trial | Per-seat subscription ($49–$199/mo) | English-only as primary language |

| LOVO AI (Genny) | Creative TTS / Video | 100+ | 500+ | Yes | Limited free plan | Subscription ($24/mo+) | Focused on short-form content |

| Speechify | Accessibility / TTS | 30+ | 200+ (incl. celebrity) | Yes | Free basic tier | Freemium ($11.58/mo annual) | Primary focus is reading/accessibility |

| Resemble AI | API / Creative TTS | 24+ | Custom-focused | Yes (3-min samples) | Limited trial | Per-second pricing + subscription | Learning curve for fine-tuning |

| Listnr | Creative TTS / Podcasting | 142 | 1,000+ | Yes | Limited free plan | Subscription ($19–$99/mo) | Quality varies across languages |

| Descript | Integrated Production | English primary | Limited | Yes (Overdub) | Free tier with limits | Subscription ($24/mo+) | TTS secondary to editing features |

| Amazon Polly | Cloud Platform TTS | 29 | 60+ | No | 5M chars/mo (12 months) | Per-character (pay-as-you-go) | Limited emotional expressiveness |

| Google Cloud TTS | Cloud Platform TTS | 40+ | 400+ | No | 1M chars/mo (WaveNet) | Per-character (pay-as-you-go) | Requires GCP account and setup |

| Microsoft Azure TTS | Cloud Platform TTS | 140+ | 500+ | Yes (Custom Neural Voice) | 500K chars/mo | Per-character (pay-as-you-go) | Custom voice requires approval process |

| OpenAI TTS | API TTS | Multi-language | 6 preset voices | No | Via API credits | Per-character (pay-as-you-go) | Very limited voice selection |

| PlayAI (formerly PlayHT) | API / Creative TTS | 140+ | 600+ | Yes | Limited free plan | Subscription ($29/mo+) | Acquired by Meta; roadmap shifting |

| Fliki | Integrated Video/TTS | 80+ | 2,500+ | No | Free tier with limits | Subscription ($28/mo+) | Voice is bundled with video creation |

| Synthesia | Integrated Video/Avatar | 140+ | 130+ | Yes (avatar-focused) | Free trial | Subscription ($29/mo+) | Avatar-centric; TTS not standalone |

Individual Platform Profiles

ElevenLabs

Overview: ElevenLabs is a voice AI company founded in 2022 that develops neural text-to-speech, voice cloning, speech-to-text, and conversational AI technologies. The platform has expanded from its original TTS focus into a broader audio AI suite that includes dubbing, sound effects generation, music creation, and real-time voice agents.

Core Capabilities:

- Neural TTS with contextual awareness that adjusts intonation based on text content

- Instant voice cloning from short audio samples and professional-grade cloning with extended training

- AI dubbing across 32 languages with retention of original speaker tone and timing

- Conversational AI platform (ElevenAgents) for building interactive voice agents with LLM integration

- Sound effects and music generation from text prompts

- Iconic Voice Marketplace featuring licensed celebrity voices (launched November 2025)

Deployment: Cloud-based SaaS platform with comprehensive API and SDK support

Integration Ecosystem: RESTful API, Python and JavaScript SDKs, integrations with major LLM providers (GPT, Claude, Gemini), workflows compatible with video editors and content management systems

Pricing Approach: Freemium model. Free tier offers approximately 10,000 characters per month. Paid plans start at $5/month (Starter) scaling through Creator ($22/month) and custom Enterprise pricing. Character-based consumption model. (ElevenLabs Pricing) As of February 2026, ElevenLabs reported over USD 330 million in annual recurring revenue and a USD 11 billion valuation following a USD 500 million Series D round led by Sequoia Capital.

Documented Limitations:

- Per-character costs can escalate significantly for high-volume production workflows

- Voice Changer feature can struggle with cross-accent transformations

- Free tier includes attribution requirements and limited commercial usage rights

- Quality of some non-English languages lags behind English output

Typical Users: Audiobook producers, podcast creators, game studios, enterprise teams deploying voice agents, media companies requiring multilingual localization

Murf AI

Overview: Murf AI is a cloud-based text-to-speech platform founded in 2020 that focuses on voiceover production for business, marketing, and e-learning content. The platform combines a voice generation engine with a studio-style editing interface that allows users to sync voice output with video and visual assets.

Core Capabilities:

- Text-to-speech with granular controls for pitch, speed, pause, and word-level emphasis

- Voice cloning for custom brand voice development

- Built-in studio editor with video timeline synchronization

- Audio-to-text conversion and voice changer (recording to AI voice transformation)

- Dubbing and translation capabilities across 20+ languages

Deployment: Cloud-based SaaS platform with API access

Integration Ecosystem: API for programmatic access, integrations with Articulate 360, WordPress, Adobe Captivate, and Google Slides TTS extension

Pricing Approach: Per-user subscription model. Enterprise plan at $75/user/month ($4,500 annually) includes unlimited voice generation. Pro plan at $26/user/month includes 48 hours of voice generation per year. No permanent free tier; limited trial access available. (Murf AI Pricing)

Documented Limitations:

- Voice library of 120+ voices is smaller than several competitors offering 500+

- Language support limited to approximately 20 languages, narrower than platforms supporting 100+

- Some users report inconsistency in output quality across different voice avatars

- Higher-tier pricing may not be justified for users who do not need the studio editing features

Typical Users: Marketing teams producing video voiceovers, instructional designers building e-learning modules, corporate communications teams, small businesses creating explainer videos

WellSaid Labs

Overview: WellSaid Labs is a Seattle-based AI voice generation company founded in 2018 by former Allen Institute researchers. The platform focuses on enterprise-grade voice synthesis with an emphasis on ethical voice sourcing, data security, and production consistency for professional content.

Core Capabilities:

- Neural TTS with word-by-word precision controls for emphasis, pacing, and pronunciation

- Pre-built voice avatar library created through a paid Voice Actor Program with consented recordings

- SOC 2 and GDPR compliance certifications

- Native integrations with Adobe Premiere Pro and Adobe Express

- Team collaboration features with shared projects and asset management

Deployment: Cloud-based SaaS platform (WellSaid Studio) with API access

Integration Ecosystem: Adobe Premiere Pro, Adobe Express, API for custom integrations, enterprise deployment options

Pricing Approach: Per-seat subscription. Maker plan starts at approximately $49/month. Enterprise plan with unlimited projects, SSO, and dedicated support requires sales consultation. No permanent free tier; 7-day free trial available. (WellSaid Labs Pricing)

Documented Limitations:

- English-focused platform with limited multilingual capabilities compared to competitors

- No user-initiated voice cloning from personal audio samples; limited to pre-built voice avatars

- Clip length restricted to 5,000 characters on Maker plan, requiring splitting for long-form content

- Price point positions the platform primarily for enterprise and professional users

Typical Users: Enterprise L&D teams, corporate training producers, professional video production teams using Adobe workflows, organizations in regulated industries requiring SOC 2 compliance

LOVO AI (Genny)

Overview: LOVO AI operates Genny, an AI voice generation and video creation platform that combines text-to-speech with video editing capabilities. The platform targets teams producing short-form content for marketing, social media, advertising, and branded video.

Core Capabilities:

- Text-to-speech with 500+ voices across 100+ languages

- Voice cloning from audio samples

- Integrated video editor for combining voiceover with visuals

- Emotion and style controls for adjusting delivery tone

- AI script generation assistance

Deployment: Cloud-based SaaS platform

Integration Ecosystem: Web-based studio; API available for developer integration

Pricing Approach: Freemium with limited free plan. Paid plans start at approximately $24/month (Basic). Higher tiers offer additional characters and features.

Documented Limitations:

- Platform is optimized for short-form content; long-form narration workflows are less developed

- Video editing features, while convenient, are less capable than dedicated video editors

- Voice quality across 100+ languages varies significantly

- Some voice styles may exhibit repetitive intonation patterns in extended use

Typical Users: Social media marketing teams, advertising creatives, small businesses producing short promotional videos, content creators targeting platforms like YouTube Shorts, TikTok, and Instagram Reels

Speechify

Overview: Speechify is a text-to-speech application originally designed as an accessibility tool for individuals with reading difficulties, founded by Cliff Weitzman. The platform has expanded into a broader audio content suite with Speechify Studio, offering voice generation, voice cloning, and content consumption features across mobile, desktop, and browser.

Core Capabilities:

- Text-to-speech from PDFs, web pages, documents, and emails with cross-device synchronization

- Voice library including AI voices and licensed celebrity voices (Snoop Dogg, Gwyneth Paltrow, MrBeast)

- Voice cloning for personal voice replication

- Adjustable reading speeds and offline access

- Browser extension (Chrome, Safari) for reading web content aloud

Deployment: Mobile apps (iOS, Android), desktop apps, browser extensions, cloud-based Speechify Studio

Integration Ecosystem: Chrome extension, Safari extension, mobile apps with OCR capability for physical text scanning

Pricing Approach: Freemium model. Free basic tier with limited features. Premium subscription at approximately $11.58/month billed annually. Speechify Studio for content creation is priced separately.

Documented Limitations:

- Primary design focus is reading/accessibility rather than production voiceover creation

- Studio features for professional voiceover production are newer and less mature than dedicated TTS platforms

- Celebrity voice licensing terms may restrict commercial usage in certain contexts

- Voice quality for production-grade narration trails platforms specifically built for that purpose

Typical Users: Students, professionals seeking reading productivity tools, individuals with dyslexia or reading difficulties, casual content consumers, creators exploring voice content with minimal setup

Resemble AI

Overview: Resemble AI is a voice AI company focused on voice cloning, real-time voice conversion, and speech synthesis with built-in safety features. The platform positions itself as a tool for professional-grade voice work requiring emotional control and ethical safeguards, including deepfake detection and audio watermarking.

Core Capabilities:

- Voice cloning from approximately 3 minutes of recorded audio

- Emotion control through text prompts (adjustable expressions for happiness, sadness, anger)

- Real-time voice conversion (speech-to-speech)

- Built-in deepfake detection (Resemblyzer) and audio watermarking

- Neural speech synthesis with SSML support for fine-grained control

Deployment: Cloud-based platform with API; on-premise deployment option available for enterprise

Integration Ecosystem: RESTful API, Python SDK, enterprise deployment options

Pricing Approach: Per-second pricing model with subscription tiers. Enterprise plans with custom pricing for on-premise deployment. Free trial available with limited features.

Documented Limitations:

- Voice changer feature can produce audio glitches and artifacts in some configurations

- Fine-tuning TTS output requires more technical effort than competitors with simpler interfaces

- Per-second pricing model can be less predictable for budgeting than flat subscription rates

- Smaller general voice library; strength is in custom cloned voices rather than pre-built options

Typical Users: Film and TV production teams, game studios requiring character voices, enterprise teams building branded voice identities, organizations prioritizing synthetic media safety and detection capabilities

Listnr

Overview: Listnr is a text-to-speech platform with integrated podcasting tools, offering voice generation across a broad language set combined with hosting, distribution, and monetization features for audio content.

Core Capabilities:

- Text-to-speech with 1,000+ voices across 142 languages

- Voice cloning capability

- Emotion and punctuation-based speech tuning

- Built-in podcast hosting with RSS feed generation

- Audio editor for voiceover refinement

- Embeddable audio widgets for websites

Deployment: Cloud-based SaaS platform

Integration Ecosystem: RSS feed distribution, website embedding widgets, API access

Pricing Approach: Subscription-based. Individual plan at $19/month, Solo at $39/month, Agency at $99/month. All plans billed annually. Limited free access available.

Documented Limitations:

- Voice quality is inconsistent across the 142 supported languages; high quality concentrated in major languages

- Podcasting features, while useful for distribution, do not match dedicated podcast hosting platforms in analytics depth

- User interface is functional but less polished than competitors focused purely on production quality

- Limited brand recognition compared to leading TTS platforms

Typical Users: Bloggers converting written content to audio, independent podcasters, multilingual content publishers, small publishers adding audio accessibility to websites

Descript

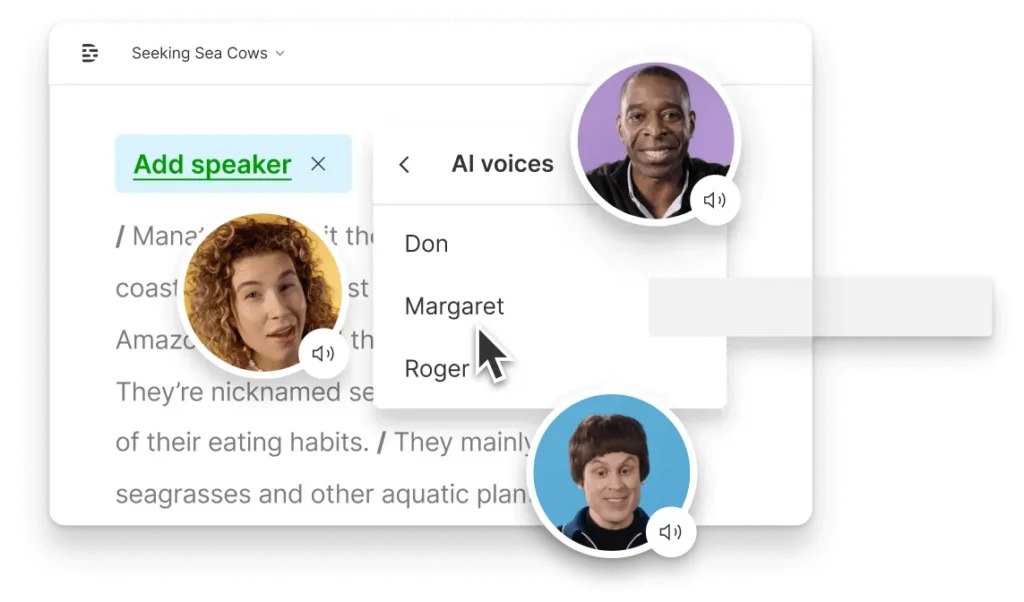

Overview: Descript is an audio and video editing platform that incorporates AI voice features within a broader editing workspace. The platform treats audio editing through a document-style text interface, allowing users to edit spoken content by modifying the transcript.

Core Capabilities:

- Text-based audio and video editing (edit recordings by editing the transcript)

- Overdub voice cloning for generating new speech in the user’s cloned voice

- Filler word removal, background noise reduction, and audio enhancement

- Screen recording and multi-track editing

- Collaboration features for team-based production workflows

Deployment: Desktop application (macOS, Windows) with cloud synchronization

Integration Ecosystem: Export to major audio/video formats, publishing integrations for podcast platforms

Pricing Approach: Freemium. Free tier with limited transcription hours and editing features. Paid plans starting at approximately $24/month.

Documented Limitations:

- TTS/voice generation is a secondary feature within a broader editing platform, not the primary product

- Overdub cloning requires consent verification and produces output best suited for corrections rather than full narration

- Voice generation capabilities are narrower than dedicated TTS platforms

- Primary language support focused on English

Typical Users: Podcast producers editing recorded content, video editors needing to correct or extend voiceover segments, content teams prioritizing editing workflow efficiency over standalone voice generation

Amazon Polly

Overview: Amazon Polly is a cloud-based text-to-speech service offered by Amazon Web Services (AWS) that converts text into lifelike speech using deep learning models. Originally launched in 2016, Polly is designed primarily for developers and engineering teams integrating speech synthesis into applications, devices, and automated workflows within the AWS ecosystem.

Core Capabilities:

- Multiple voice engine tiers: Standard (concatenative synthesis), Neural (NTTS), Long-Form (optimized for extended narration), and Generative (latest, most expressive engine)

- Support for 29 languages with dozens of male and female voices across regional variants

- SSML (Speech Synthesis Markup Language) support for controlling pronunciation, volume, pitch, speed, and emphasis

- Speech Marks output for lip-sync, word highlighting, and subtitle generation

- Real-time streaming and batch synthesis with output in MP3, OGG, and PCM formats

- Native integration with AWS services including Amazon S3, Amazon Connect, and AWS Lambda

Deployment: Fully managed cloud service accessible via API. No on-premise deployment option.

Integration Ecosystem: RESTful API, AWS SDKs (Python, Java, Node.js, .NET, Go, Ruby, PHP, C++), deep integration with AWS services (S3, Connect, Translate, Lex), third-party connectors via AWS Marketplace

Pricing Approach: Pay-as-you-go per character. Standard voices at $4.00 per 1 million characters, Neural voices at $16.00 per 1M characters, Long-Form at $100.00 per 1M characters, Generative at higher tiers. Free tier includes 5M standard characters/month and 1M neural characters/month for the first 12 months. (Amazon Polly Pricing)

Documented Limitations:

- Voice library is smaller (60+ voices) than dedicated creative TTS platforms offering 500+

- Limited emotional expressiveness compared to platforms focused on creative production use cases

- Requires AWS account setup and familiarity with AWS service architecture, creating a higher barrier to entry for non-technical users

- No voice cloning capability for custom brand voices

- Standard voices sound notably robotic; Neural and Generative voices are substantially better but cost 4–25x more per character

Typical Users: Software developers building voice-enabled applications, enterprise teams deploying IVR and customer service automation within AWS infrastructure, organizations needing scalable TTS as a microservice component

Google Cloud Text-to-Speech

Overview: Google Cloud Text-to-Speech is a cloud-based speech synthesis service within Google Cloud Platform (GCP) that leverages Google’s DeepMind research in neural audio generation. The service offers multiple voice engine tiers including Standard, WaveNet (based on DeepMind’s WaveNet research), Neural2, and Studio voices, with the latter producing output approaching professional narration quality.

Core Capabilities:

- Multiple voice engine tiers: Standard, WaveNet, Neural2, Studio, and Journey (conversational) voices

- 400+ voices across 40+ languages and regional variants

- SSML support with fine-grained control over pronunciation, pauses, emphasis, and speaking rate

- Audio profiles optimized for different playback devices (phone, headphones, smart speakers, car)

- Custom Voice creation for enterprise customers (requires Google Cloud sales engagement)

- Streaming and batch synthesis with output in MP3, OGG, WAV, and MULAW formats

Deployment: Fully managed cloud service via GCP. No on-premise option for standard customers.

Integration Ecosystem: RESTful API, gRPC API, client libraries for Python, Java, Node.js, Go, C#, Ruby, PHP. Native integration with Google Cloud services (Dialogflow, Cloud Storage, Pub/Sub)

Pricing Approach: Pay-as-you-go per character, tiered by voice engine. Standard voices at $4.00 per 1M characters, WaveNet at $16.00 per 1M, Neural2 at $16.00 per 1M, Studio at $160.00 per 1M. Free tier includes 1M standard characters and 1M WaveNet characters per month (ongoing, not time-limited). (Google Cloud TTS Pricing)

Documented Limitations:

- Requires Google Cloud Platform account and project setup, which adds friction for non-technical users

- Studio voices — the highest-quality tier — are significantly more expensive at $160/1M characters

- Custom Voice feature is enterprise-only and requires Google Cloud sales engagement; not self-service

- Voice expressiveness, while improved with Studio/Journey voices, still trails the most advanced dedicated creative TTS platforms for long-form narration

- Some WaveNet voices exhibit slight metallic artifacts in longer passages

Typical Users: Developers integrating TTS into Google Cloud-hosted applications, enterprises building conversational AI with Dialogflow, organizations requiring multilingual TTS at scale within GCP infrastructure

Microsoft Azure AI Speech (Text-to-Speech)

Overview: Microsoft Azure AI Speech is the text-to-speech component of Azure’s AI Services suite, offering one of the broadest language and voice selections among cloud platform providers. The service has expanded significantly with neural voice capabilities and Custom Neural Voice (CNV), which allows enterprise customers to create bespoke synthetic voices from recorded samples.

Core Capabilities:

- 500+ neural voices across 140+ languages and locales — the largest language coverage among major cloud TTS providers

- Custom Neural Voice (CNV) for creating branded synthetic voices from recorded audio, available in Lite and Pro tiers

- Personal Voice feature for voice replication from short audio samples (with speaker consent verification)

- Real-time and batch synthesis with SSML support, including emotion and style controls via

<mstts:express-as>tags - Audio Content Creation Studio — a browser-based GUI for non-developers to produce voiceover content without code

- Pronunciation customization via lexicons and phonetic markup

Deployment: Cloud-based via Azure. On-premise deployment available through Azure AI Speech containers for latency-sensitive or data-residency-restricted environments.

Integration Ecosystem: RESTful API, WebSocket API, SDKs for C#, C++, Java, JavaScript, Python, Swift, Objective-C, Go. Integrations with Microsoft 365, Azure Bot Service, Azure Communication Services, Power Platform

Pricing Approach: Pay-as-you-go per character. Neural voices at $16 per 1M characters. Custom Neural Voice Pro training starts at $48/model/hour. Free tier includes 500K neural characters/month (ongoing). (Azure Speech Pricing)

Documented Limitations:

- Custom Neural Voice requires a formal application and approval process, including voice talent consent verification — not self-service

- The breadth of 500+ voices means quality is uneven; some lesser-used language voices are noticeably less natural than English or major European language options

- Azure account and resource group setup adds configuration overhead for new users

- Personal Voice feature requires enrollment consent and has stricter usage policies

- On-premise container deployment requires Azure Arc-enabled subscription and has limited voice availability compared to cloud

Typical Users: Enterprise development teams building multilingual voice experiences, organizations in regulated industries needing on-premise TTS deployment, Microsoft ecosystem users leveraging Azure for integrated AI services, contact center operators using Azure Communication Services

OpenAI TTS

Overview: OpenAI offers text-to-speech capabilities through its API, providing a small selection of high-quality preset voices designed for integration into applications and workflows. Unlike dedicated TTS platforms with hundreds of voices, OpenAI’s approach prioritizes simplicity and quality over voice selection breadth, positioning TTS as one component within its broader AI API offering alongside GPT models and DALL-E.

Core Capabilities:

- Two model variants:

tts-1(optimized for low latency, suitable for real-time applications) andtts-1-hd(higher quality, optimized for production audio) - 6 preset voices (Alloy, Echo, Fable, Onyx, Nova, Shimmer) with distinct tonal characteristics

- Multi-language support inferred from input text (no explicit language parameter needed)

- Streaming audio output for real-time applications

- Output formats: MP3, Opus, AAC, FLAC, WAV, PCM

Deployment: Cloud-based API only. No standalone interface or studio.

Integration Ecosystem: OpenAI API, Python and Node.js SDKs, compatible with any application capable of making HTTP requests. No native integrations with specific production tools.

Pricing Approach: Per-character pricing via API credits. tts-1 at $15.00 per 1M characters, tts-1-hd at $30.00 per 1M characters. No free tier for TTS specifically; subject to OpenAI API account credit allocation. (OpenAI TTS Pricing)

Documented Limitations:

- Only 6 preset voices — extremely limited compared to competitors offering hundreds or thousands

- No voice cloning, custom voice creation, or voice customization beyond model and voice selection

- No SSML support — users cannot control pronunciation, emphasis, pauses, or speaking rate at a granular level

- No dedicated TTS studio or GUI; API-only access means non-developers have no direct way to use the service

- Pricing is higher per character than comparable cloud platform offerings (Amazon Polly Neural at $16/1M vs. OpenAI at $15–$30/1M, with Polly offering more voices and SSML control)

Typical Users: Developers integrating voice output into GPT-powered applications, teams building quick prototypes requiring voice capabilities, organizations already using OpenAI’s API ecosystem seeking a simple TTS add-on

PlayAI (formerly PlayHT) — Discontinued

Overview: PlayAI (originally PlayHT) was a voice AI startup specializing in ultra-realistic conversational speech synthesis and voice cloning. Founded in 2020 by Mahmoud Felfel and Hammad Syed, the company developed Play Dialog, a multi-turn conversational speech model that performed strongly in independent evaluations. In July 2025, Meta acquired PlayAI, bringing the entire 35-person team into Meta’s AI division under Johan Schalkwyk. Meta shut down PlayAI’s standalone SaaS platform in late 2025, with the data export window closing on December 31, 2025.

Status as of February 2026: PlayAI is no longer available as an independent product. The technology is being integrated into Meta’s broader AI ecosystem — including Meta AI, AI Characters, Ray-Ban Meta smart glasses, and audio content creation tools. Legacy accounts are closed, and cloud-hosted projects have been purged. Former PlayAI users have migrated primarily to ElevenLabs, Resemble AI, and Fish Audio.

Why This Entry Is Included: PlayAI is listed in this analysis because it was a notable platform in the AI voice generation space through 2025 and continues to appear in comparative searches. Its acquisition illustrates a broader market trend: standalone voice synthesis startups being absorbed by major technology companies as voice AI becomes strategic infrastructure.

Key Pre-Acquisition Capabilities (Historical):

- Play Dialog: multi-turn conversational speech model trained on hundreds of millions of conversations

- Voice cloning from short audio samples with emotion preservation

- Support for 140+ languages with cross-lingual voice cloning

- API-first architecture with sub-second latency for real-time applications

- Prior pricing: subscription plans starting at $29/month with pay-as-you-go API options

Fliki

Overview: Fliki is an AI-powered text-to-video and text-to-speech platform that combines voice generation with automated video creation. The platform targets content creators, marketers, and educators who need to produce video content with voiceovers from text scripts, blog posts, or simple prompts, without requiring video editing expertise or voice talent.

Core Capabilities:

- Text-to-speech with 2,500+ AI voices across 80+ languages and 100+ dialects

- Text-to-video creation that automatically matches visuals (from 10M+ stock media library) to script content

- Idea-to-video feature that generates complete videos from simple text prompts

- Voice cloning capability for personalized voiceover production

- Blog-to-video conversion for repurposing written content

- Template library for social media formats (TikTok, Instagram Reels, YouTube Shorts)

Deployment: Cloud-based SaaS platform (browser-based editor)

Integration Ecosystem: Web-based standalone platform with direct export to major video and audio formats (MP4, MP3). No public API currently available for external integration.

Pricing Approach: Freemium model. Free tier includes 5 minutes of audio/video content per month with watermark. Paid plans start at approximately $14/month (Standard) with higher tiers for increased generation minutes and premium features. (Fliki Pricing)

Documented Limitations:

- TTS is bundled within a video creation platform — not available as a standalone voice generation tool or API

- Credit-based system can be confusing, and credits are consumed by both voice generation and video rendering

- Voice quality, while generally praised, varies across the 2,500+ voice library — premium voices noticeably outperform free-tier options

- Video editing capabilities are functional but limited compared to dedicated video editors

- AI-generated visuals don’t always match script content accurately, sometimes requiring manual scene adjustment

- User reviews on Capterra note that AI voices occasionally skip words, wasting credits on regeneration

Typical Users: Social media marketers producing short-form video content, bloggers repurposing written content into video, small businesses creating promotional videos without production teams, educators building visual learning materials

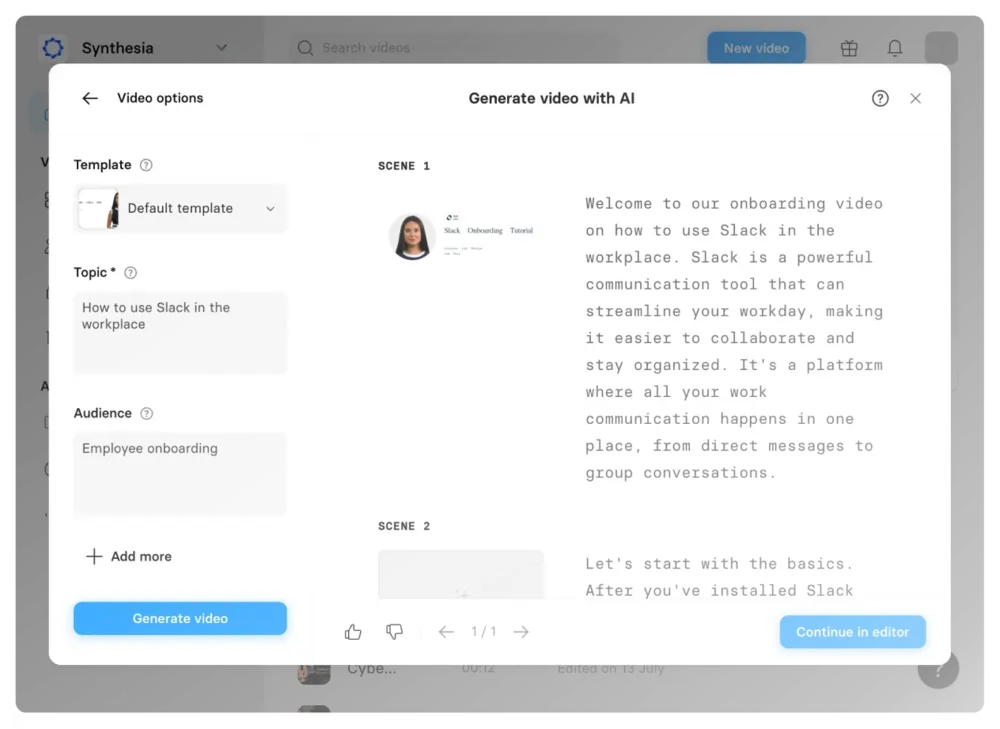

Synthesia

Overview: Synthesia is primarily an AI video generation platform known for its digital avatar technology, but it includes text-to-speech capabilities as an integrated component of its video creation workflow. Founded in 2017 and headquartered in London, Synthesia has raised over $200 million in funding and reports that more than 50,000 companies use its platform. The TTS component is not sold separately — it functions within the avatar video generation pipeline.

Core Capabilities:

- Text-to-speech integrated with 240+ AI avatar presenters in generated videos

- 130+ voices across 140+ languages with multilingual dubbing capabilities

- Voice cloning for creating custom presenter voices that match avatar appearance

- Adjustable speech pacing and emphasis within the video script editor

- Automated lip-sync between generated voice and avatar facial movements

- SOC 2 Type II and ISO 42001 compliance certifications

Deployment: Cloud-based SaaS platform with enterprise API access

Integration Ecosystem: API for programmatic video generation, integrations with LMS platforms (SCORM export), PowerPoint import, Zapier, and custom enterprise connectors

Pricing Approach: Subscription-based. Starter plan at $29/month (billed annually). Enterprise plan with custom pricing includes advanced features, SSO, and dedicated support. No free tier; free trial available. (Synthesia Pricing)

Documented Limitations:

- TTS is not available as a standalone audio product — voice generation is locked within the video creation workflow

- Voice selection is smaller (130+ voices) than dedicated TTS platforms offering 500+

- Voice quality is optimized for avatar video narration rather than standalone audio production (audiobooks, podcasts)

- Video generation includes a content moderation review process that can add processing time

- Pricing reflects the full video generation platform cost, making it expensive if the primary need is voice generation alone

Typical Users: Enterprise L&D teams producing training videos, corporate communications teams creating internal video content, marketing teams generating multilingual product explainer videos — rather than users whose primary need is standalone TTS or audio production

Cross-Category Patterns and Observations

Shared Capabilities Across Platforms

Certain features have become baseline across most evaluated platforms. Neural TTS engines are now standard — every platform in this analysis offers at least one neural voice option, and purely concatenative synthesis has been relegated to legacy tiers. SSML support (with varying depth) is available on all cloud platform services and most dedicated TTS tools. Multi-language support is universal, though the quality gap between English and lower-resource languages remains significant across all platforms.

Persistent Limitations Across the Category

Several challenges remain category-wide rather than platform-specific. Voice consistency over long-form content (10+ minutes of continuous narration) continues to be a weakness — even the most advanced platforms exhibit subtle drift in prosody, pacing, or pronunciation over extended passages. Emotional range, while improved, remains limited compared to professional human voice talent; most platforms handle neutral and conversational tones well but struggle with complex emotional registers like sarcasm, irony, or subtle warmth.

Non-English quality varies dramatically. Platforms advertising “100+ languages” typically deliver excellent results in 5–10 major languages, acceptable quality in another 20–30, and notably weaker output in the remainder. Users requiring production-quality output in less commonly supported languages should test extensively before committing.

Pricing Model Fragmentation

The category lacks pricing standardization, which makes cross-platform cost comparison unusually difficult. Per-character pricing (Amazon Polly, Google Cloud, Azure, OpenAI), per-minute pricing (some creative platforms), per-seat subscription pricing (WellSaid Labs, Murf AI), freemium with character caps (ElevenLabs, Speechify), and bundled pricing within broader platforms (Fliki, Descript, Synthesia) all coexist. For high-volume users, per-character cloud pricing can be significantly cheaper than subscription models, but for low-volume users with creative needs, subscription plans often provide better value through studio interfaces and pre-built voice libraries.

The Creative-Infrastructure Divide

The most significant structural pattern in the 2026 AI voice market is the widening divide between creative TTS platforms (ElevenLabs, Murf AI, WellSaid Labs, LOVO AI) and infrastructure TTS services (Amazon Polly, Google Cloud, Azure). Creative platforms prioritize voice expressiveness, studio editing interfaces, and production-ready output. Infrastructure services prioritize scalability, API depth, ecosystem integration, and per-character cost efficiency. Attempting to use an infrastructure service for creative production — or a creative platform for high-throughput API integration — typically leads to suboptimal results.

Consolidation Trajectory

The PlayAI acquisition by Meta and Google’s hiring of key Hume AI talent indicate that standalone voice synthesis is increasingly viewed as a feature to be absorbed into larger platforms rather than sustained as an independent product category. According to Gartner’s analysis of the AI landscape, voice synthesis is expected to become embedded infrastructure within broader AI platforms by 2028, which has implications for organizations selecting standalone TTS vendors today — long-term platform viability should factor into procurement decisions.

Emerging Capabilities: Real-Time Conversational TTS

The most significant capability shift in 2026 is the emergence of real-time conversational voice AI as a distinct product category, led by ElevenLabs’ ElevenAgents platform and supported by Deepgram’s low-latency speech infrastructure. This moves TTS from a content production tool (generating audio files from text) to an interactive communication layer (generating speech in real-time during live conversations). Organizations evaluating TTS platforms should consider whether their roadmap includes conversational voice applications, as platforms optimized for batch content creation may not serve real-time use cases, and vice versa.

How to Choose the Right AI Voice Generator

Selecting an AI voice generator requires matching platform capabilities to specific workflow requirements, budget constraints, and deployment context. The following framework organizes key decision factors by user priority. No single platform is optimal for all use cases — the most effective choice depends on identifying which dimensions matter most for a given scenario.

Key Questions to Ask Before Choosing

What is the primary use case? Content creation (voiceovers, podcasts, audiobooks) points toward dedicated creative TTS platforms with studio interfaces and large voice libraries. Application integration (voice-enabled apps, chatbots, IVR systems) points toward API-first or cloud platform services. Video production with embedded narration points toward integrated suites like Fliki, Descript, or Synthesia. Understanding the use case before evaluating features prevents selecting a platform optimized for the wrong workflow.

What is the expected volume and pricing tolerance? Per-character cloud pricing (Amazon Polly, Google Cloud, Azure) is typically most cost-effective for high-throughput API use at scale. Subscription models (ElevenLabs, Murf AI, WellSaid Labs) are more predictable for production teams with consistent monthly output. Free tiers vary dramatically in generosity — from 10,000 characters/month (ElevenLabs) to 5 million standard characters/month (Amazon Polly free tier). Comparing costs requires normalizing to a common unit, as platforms use characters, minutes, words, and credits interchangeably.

How critical is voice quality versus voice variety? ElevenLabs and WellSaid Labs lead in voice expressiveness and naturalness for English-language production. Cloud platforms (Google Cloud, Azure) lead in language breadth and voice count. OpenAI TTS offers only 6 voices but with consistent high quality. The trade-off between depth (quality per voice) and breadth (number of voices and languages) is real and platform-dependent.

Is voice cloning required? Not all platforms offer voice cloning, and those that do vary in approach. ElevenLabs and Resemble AI offer instant cloning from short samples. Azure requires a formal application process with consent verification. Amazon Polly and Google Cloud TTS do not offer user-initiated voice cloning. OpenAI TTS provides no voice cloning at all. For brand voice consistency, cloning capability may be a decisive factor.

What is the technical environment? Teams already on AWS, GCP, or Azure benefit from native TTS services that share billing, IAM, monitoring, and deployment infrastructure. Teams without cloud platform commitments have broader flexibility. Non-technical users need platforms with studio interfaces (ElevenLabs, Murf AI, LOVO AI, Descript) rather than API-only services.

Decision Matrix Template

| Decision Factor | Creative TTS Platforms | API-First Services | Cloud Platform TTS | Integrated Suites |

|---|---|---|---|---|

| Best for | Content production | Application integration | Enterprise infrastructure | Multimedia workflows |

| Voice quality | Highest for English | High, specialized | Good to excellent | Good (secondary feature) |

| Voice variety | Medium to large | Varies | Largest selection | Small to medium |

| Pricing | Subscription | Per-use | Per-character | Bundled subscription |

| Technical skill needed | Low to medium | Medium to high | Medium to high | Low |

| Voice cloning | Often available | Sometimes | Azure only (with approval) | Varies |

| SSML support | Limited | Varies | Full | Limited |

| Ecosystem integration | Limited | Moderate | Deep (within platform) | Self-contained |

Frequently Asked Questions

What is an AI voice generator and how does it work?

An AI voice generator is software that converts written text into synthetic spoken audio using neural network models. Modern systems use architectures such as Tacotron, FastSpeech, and WaveNet-derived models to generate speech waveforms directly from text input. According to MarketsandMarkets, the global AI voice generator market was valued at approximately USD 4.16 billion in 2025 and is projected to reach USD 20.71 billion by 2031. These systems analyze text for context, apply learned patterns of human prosody and intonation, and produce audio output that increasingly approximates natural speech.

Are AI-generated voices legal to use commercially?

Commercial usage legality depends on the specific platform’s licensing terms and the jurisdiction of use. Most paid TTS platforms (ElevenLabs, Murf AI, WellSaid Labs, cloud providers) grant commercial usage rights within their paid tiers. Free tiers often restrict commercial use or require attribution. Voice cloning introduces additional legal considerations — using someone’s voice without consent may violate right-of-publicity laws in many jurisdictions. The EU AI Act will require marking AI-generated audio content in machine-readable formats starting August 2026, and over 45 U.S. states have enacted deepfake-related legislation. Users should review each platform’s commercial licensing terms and applicable local regulations.

Which AI voice generator sounds most realistic in 2026?

Voice realism varies by use case, language, and voice selection. For English-language creative production, ElevenLabs and WellSaid Labs consistently receive the highest naturalness ratings in independent evaluations, including benchmarks published by Artificial Analysis. For conversational AI applications, ElevenLabs’ Turbo models and Deepgram’s low-latency engines are leading. Among cloud providers, Google Cloud’s Studio voices and Azure’s latest neural voices approach production quality. OpenAI TTS offers consistently natural output but with only 6 voice options. No single platform produces universally “best” voices — testing with actual content samples is essential.

How much does AI voice generation cost?

Costs vary significantly by platform and pricing model. Per-character cloud services (Amazon Polly, Google Cloud, Azure) range from $4.00 to $160.00 per million characters depending on voice engine tier. Subscription creative platforms range from $5/month (ElevenLabs Starter) to $199/month (WellSaid Labs Creator). Enterprise plans with custom pricing, voice cloning, and SSO typically require sales consultation. Free tiers range from 10,000 characters/month (ElevenLabs) to 5 million standard characters/month (Amazon Polly, first 12 months). For context, 1 million characters produces approximately 15–20 hours of audio, depending on speaking rate.

Can AI voice generators clone my voice?

Several platforms offer voice cloning with varying approaches. ElevenLabs provides instant cloning from short samples (1–5 minutes of audio) and Professional Voice Cloning (PVC) for higher fidelity. Resemble AI offers cloning from approximately 3 minutes of recorded audio with emotion control. Microsoft Azure’s Custom Neural Voice requires a formal application process and recorded consent from the voice talent. Descript’s Overdub feature clones voices for correction and extension within its editing platform. Amazon Polly, Google Cloud TTS, and OpenAI TTS do not offer user-initiated voice cloning. All platforms with cloning capability require some form of consent verification, reflecting industry-wide adoption of ethical AI voice practices.

What languages do AI voice generators support?

Language coverage ranges from English-only (WellSaid Labs) to 140+ languages (Microsoft Azure, Synthesia). ElevenLabs supports 32 languages with strong cross-lingual voice cloning. Cloud platforms typically lead in language count: Azure at 140+, Google Cloud at 40+, Amazon Polly at 29. However, output quality varies significantly beyond major languages. According to Grand View Research, the media and entertainment sector drives demand for multilingual TTS, but production-quality output in lower-resource languages remains inconsistent across all platforms as of 2026.

What happened to PlayAI (PlayHT)?

PlayAI was acquired by Meta in July 2025. The entire 35-person team joined Meta’s AI division, and Meta shut down PlayAI’s standalone SaaS platform in late 2025. The data export window closed on December 31, 2025, and legacy accounts are now inaccessible. PlayAI’s technology is being integrated into Meta’s AI ecosystem, including Meta AI, AI Characters, and Ray-Ban Meta smart glasses. Former PlayAI users have migrated primarily to ElevenLabs and Resemble AI. The acquisition reflects a broader consolidation trend in the voice AI market.

Do I need technical skills to use an AI voice generator?

It depends on the platform type. Dedicated creative TTS platforms (ElevenLabs, Murf AI, LOVO AI, Listnr) and integrated suites (Fliki, Descript) offer browser-based studio interfaces that require no coding or technical setup. Cloud platform services (Amazon Polly, Google Cloud TTS, Azure) and API-first tools (OpenAI TTS) require developer skills for setup and integration. Microsoft Azure’s Audio Content Creation Studio is a notable exception — it provides a GUI for non-developers within an otherwise developer-oriented service.

How do AI voice generators handle data privacy?

Data handling varies by provider. Enterprise-focused platforms (WellSaid Labs, Synthesia) hold SOC 2 Type II compliance. Cloud providers (AWS, Google Cloud, Azure) operate under their respective platform compliance frameworks, which include GDPR, HIPAA, and FedRAMP options. Azure offers on-premise deployment via Speech containers for organizations with strict data residency requirements. For voice cloning, platforms are increasingly requiring consent verification — ElevenLabs uses a voice consent protocol, Azure requires signed consent documentation, and Resemble AI includes built-in watermarking and deepfake detection (Resemblyzer). Users processing personal data (voice recordings) should verify each platform’s data processing agreements against applicable privacy regulations.

What is the difference between Standard, Neural, and Generative TTS voices?

These tiers represent different underlying speech synthesis technologies. Standard voices use concatenative synthesis (assembling pre-recorded speech segments), producing functional but noticeably robotic output. Neural voices use deep learning models to generate speech waveforms from scratch, producing significantly more natural prosody and intonation. Generative voices (offered by Amazon Polly and ElevenLabs) use the latest transformer-based and diffusion models for the most expressive output, including emotional variation and contextual awareness. Pricing typically scales with quality tier — Amazon Polly’s Standard voices cost $4/1M characters versus $16/1M for Neural. The quality difference between Standard and Neural is substantial; between Neural and Generative, it is noticeable but less dramatic.

Will AI voice generators replace human voice actors?

As of 2026, AI voice generation is complementing rather than fully replacing human voice talent. AI voices excel at scalable production tasks — localization, corporate training narration, IVR systems, content repurposing — where speed and cost are primary factors. Human voice actors continue to lead in performance requiring complex emotional range, character acting, and artistic interpretation. According to industry analysis from MarketsandMarkets, the market’s 30.7% projected CAGR suggests expanding use cases rather than direct displacement, with AI handling high-volume, standardized voice work while human talent focuses on premium, performance-driven production.

Key Takeaways

- The AI voice generator market is projected to grow from USD 4.16 billion (2025) to USD 20.71 billion (2031) — this 30.7% CAGR, according to MarketsandMarkets, reflects expanding enterprise adoption and the emergence of real-time conversational voice AI as a new product category alongside traditional content narration.

- A structural divide separates creative TTS platforms from infrastructure TTS services — ElevenLabs, Murf AI, and WellSaid Labs optimize for voice expressiveness and production workflows, while Amazon Polly, Google Cloud, and Azure optimize for scalability, API depth, and ecosystem integration. Selecting across this divide typically leads to mismatched expectations.

- PlayAI’s acquisition by Meta and Google’s Hume AI hiring signal ongoing consolidation — standalone voice synthesis is increasingly being absorbed into major technology platforms, which has long-term implications for vendor selection and platform viability.

- The EU AI Act’s Article 50 transparency requirements, enforceable from August 2026, will require machine-readable marking of AI-generated audio — organizations producing synthetic voice content should begin evaluating watermarking and content provenance capabilities as part of their platform selection criteria.

- Voice quality in 2026 varies significantly by language, voice engine tier, and use case — platforms advertising 100+ languages typically deliver production-quality results in only 5–10 major languages, and the gap between Standard, Neural, and Generative voice tiers remains substantial in both quality and cost.

This analysis was last updated February 2026. The AI voice generation market evolves rapidly; readers should verify current pricing and features directly with vendors.