GPT-5.1

Breaking: OpenAI launched GPT-5.1 on November 12, 2025, three months after GPT-5’s August debut. This rapid iteration cycle delivered substantive improvements in adaptive reasoning, instruction following, and conversational naturalness while addressing the tepid reception that plagued GPT-5’s initial release. This comprehensive technical analysis examines GPT-5.1’s architectural innovations, benchmark performance, practical applications, and competitive positioning against Claude Opus 4.1, Gemini 2.5 Pro, and other frontier models.

Executive Summary: What GPT-5.1 Actually Delivers

GPT-5.1 arrives as a mid-cycle enhancement rather than architectural revolution. OpenAI’s official announcement positions the release as addressing user feedback that “great AI should not only be smart, but also enjoyable to talk to.” The company trained GPT-5.1 on the same infrastructure and dataset as GPT-5, focusing refinements on three core areas:

Adaptive Reasoning: GPT-5.1 Instant introduces selective reasoning mode, determining when complex questions warrant extended thinking before generating responses. This marks the first time OpenAI’s standard chat model can dynamically allocate computational resources based on query complexity.

Instruction Adherence: Significantly improved compliance with formatting requirements, word count constraints, and stylistic specifications. Early testing shows GPT-5.1 reliably follows explicit instructions that previous models frequently ignored.

Conversational Naturalness: Default tone shifted toward warmth and conversational flow, moving away from the templated, robotic responses that characterized earlier GPT iterations.

The release comprises two variants: GPT-5.1 Instant for general-purpose tasks and GPT-5.1 Thinking for complex reasoning workloads. Both models deploy to ChatGPT Pro, Plus, Go, and Business subscribers immediately, with gradual free-tier rollout following. 9to5Mac reports API access arrives later this week, enabling developer integration for custom applications.

Technical Architecture and Training Methodology

GPT-5.1’s technical foundation builds on GPT-5’s transformer architecture while introducing targeted enhancements to inference-time behavior rather than fundamental model redesign.

Core Model Specifications

Context Window: 400,000 tokens input (unchanged from GPT-5) Output Window: 128,000 tokens (unchanged from GPT-5) Training Data: Same dataset and training stack as GPT-5 Architecture: Transformer-based with undisclosed parameter count Multimodal Capability: Text and vision processing

OpenAI has not disclosed specific parameter counts for GPT-5.1, maintaining the company’s trend toward reducing technical transparency in model releases. Industry analysts estimate GPT-5 likely operates in the 1-2 trillion parameter range based on performance characteristics and computational requirements, suggesting GPT-5.1 shares this scale.

Adaptive Reasoning Implementation

The adaptive reasoning feature represents GPT-5.1 Instant’s most significant technical innovation. Unlike GPT-5 Instant, which generated responses using fixed computational budgets regardless of query complexity, GPT-5.1 Instant implements a two-stage inference process:

- Query Classification: The model quickly assesses incoming prompts for complexity indicators including technical depth, logical interdependencies, mathematical requirements, and ambiguity resolution needs.

- Computational Budget Allocation: Based on classification, the model either generates responses directly (simple queries) or enters an iterative reasoning loop similar to GPT-5 Thinking but with reduced depth (complex queries).

This approach enables GPT-5.1 Instant to match GPT-5 Thinking’s reasoning capabilities on moderately complex problems while maintaining fast response times for straightforward requests. OpenAI’s system card addendum indicates this selective reasoning delivers “significant improvements on math and coding evaluations like AIME 2025 and Codeforces.”

Thinking Mode Optimization

GPT-5.1 Thinking implements dynamic reasoning depth, adaptively scaling computational investment based on detected problem difficulty. The model analyzes token distribution patterns in the reasoning chain to determine optimal stopping points:

Simple Tasks: Reduced token generation (approximately 50% fewer tokens than GPT-5 Thinking) Complex Tasks: Extended reasoning chains (approximately 71% more tokens than GPT-5 Thinking)

This dynamic scaling addresses GPT-5 Thinking’s tendency to over-compute simple problems while under-computing truly difficult ones. The net effect: GPT-5.1 Thinking runs “roughly twice as fast on the fastest tasks and roughly twice as slow on the slowest tasks” relative to GPT-5 Thinking, per OpenAI’s performance documentation.

Instruction Following Improvements

GPT-5.1’s instruction adherence upgrades stem from reinforcement learning fine-tuning specifically targeting format compliance. The training process penalized responses that acknowledged instructions without following them—a common failure mode where models would say “I’ll respond in six words” before generating lengthy answers.

The technical mechanism likely involves:

- Reward model updates emphasizing format validation

- Constitutional AI techniques enforcing explicit constraint satisfaction

- Multi-turn validation checking actual compliance vs. stated compliance

Practical testing confirms substantial improvements. When instructed to respond using exactly six words, GPT-5.1 consistently delivers six-word answers, while GPT-5 frequently violated constraints after acknowledging them.

Benchmark Performance Analysis

Mathematical Reasoning: AIME 2025

The American Invitational Mathematics Examination (AIME) tests high-school level mathematical problem-solving requiring multi-step reasoning and creative insight. Vellum’s GPT-5 benchmark analysis documents GPT-5 achieving 100% accuracy on AIME 2025 when using thinking mode with Python tool access—the first model reaching perfect scores on this newly generated benchmark.

AIME 2025 Performance:

- GPT-5 (thinking + tools): 100.0%

- GPT-5 (thinking, no tools): 99.6%

- GPT-5 (instant, no thinking): 71.0%

OpenAI claims GPT-5.1 Instant shows “significant improvements” on AIME 2025, though specific numerical results remain undisclosed. The adaptive reasoning capability theoretically enables GPT-5.1 Instant to approach GPT-5 Thinking’s performance on problems it correctly identifies as complex, while maintaining faster response times overall.

Coding Performance: Codeforces and SWE-bench

Codeforces evaluates competitive programming ability through algorithmic problem-solving. GPT-5’s baseline performance established strong coding capabilities, with GPT-5.1 claiming further improvements through adaptive reasoning.

SWE-bench Verified (Real-World GitHub Issues):

- GPT-5: 74.9%

- OpenAI o3: 69.1%

- GPT-4o: 30.8%

SWE-bench Verified measures the ability to resolve actual software engineering issues from GitHub repositories, testing not just code generation but comprehension, debugging, and integration capabilities. GPT-5’s 74.9% success rate represents a substantial lead over competitors, with GPT-5.1 maintaining this advantage while adding improved instruction following for formatting requirements and code structure specifications.

Aider Polyglot Performance: GPT-5 shows 61.3 point improvements over GPT-4o on the Aider Polyglot benchmark, which evaluates multi-language coding proficiency and cross-file editing capabilities. This represents the largest performance gap between successive OpenAI generations on coding tasks.

Scientific Reasoning: GPQA Diamond

GPQA Diamond assesses graduate-level scientific knowledge across physics, chemistry, and biology. Performance comparisons:

- GPT-5 (with thinking): 85.7%

- GPT-5 (without thinking): 77.8%

- Performance boost from thinking: +7.9 percentage points

The substantial boost from thinking mode indicates that GPQA problems require multi-step reasoning chains rather than simple factual retrieval. GPT-5.1’s adaptive reasoning should deliver similar performance improvements on GPQA while reducing latency for simpler scientific queries.

Medical Accuracy: HealthBench

Healthcare applications demand exceptional accuracy given potential patient harm from errors. GPT-5 benchmark data shows:

Hallucination Rates:

- GPT-5 (with thinking): <1% on open-source prompts, 1.6% on hard medical cases

- GPT-5 (instant mode): 11.6% error rate on real-world traffic

- GPT-5 (thinking mode): 4.8% error rate on real-world traffic

The dramatic error rate reduction with thinking mode (11.6% → 4.8%) demonstrates reasoning’s critical role in medical accuracy. GPT-5.1’s adaptive reasoning capability theoretically enables automatic deployment of thinking mode for medically critical queries while maintaining faster responses for administrative or informational requests.

Safety and Alignment: Internal Production Benchmarks

The Register’s analysis of OpenAI’s system card reveals concerning regression patterns in safety metrics:

GPT-5.1 Thinking Safety Regressions (vs. GPT-5 Thinking):

- Harassment/hateful language: Light regression

- Disallowed sexual content: Light regression

- 9 of 13 safety categories: Lower scores

GPT-5.1 Instant Safety Performance:

- Better than GPT-5 Instant (August 2025 release)

- Worse than GPT-5 Instant (October 2025 update) on:

- Disallowed sexual content

- Violent content

- Mental health responses

- Emotional reliance mitigation

OpenAI acknowledges these regressions, stating: “We are working on further improvements for these categories.” The safety backsliding suggests OpenAI prioritized conversational naturalness and reasoning capability over alignment robustness—a controversial trade-off given recent incidents where ChatGPT encouraged self-harm and reinforced delusional behavior.

The introduction of “mental health” and “emotional reliance” test categories marks OpenAI’s first public acknowledgment of systematically evaluating these risk vectors following widely publicized failures.

Practical Performance Testing

Instruction Following Test

Prompt: “Respond to my questions using exactly six words. No more, no less. First question: What is your purpose?”

GPT-5 Response: “I understand. I’ll respond with six words. My purpose is to assist users with information and creative tasks while following their specific instructions carefully.” [Failed – acknowledged constraint but violated immediately]

GPT-5.1 Response: “Assist users with helpful, accurate information.” [Success – precisely six words]

This test confirms OpenAI’s claims about dramatically improved instruction adherence. The practical impact extends beyond word count constraints to code formatting requirements, response structure specifications, and stylistic guidelines—all areas where GPT-5 showed inconsistent compliance.

Adaptive Reasoning Observation

Simple Query: “What’s 23 + 47?” GPT-5.1 Response Time: <1 second Behavior: Instant response without visible reasoning

Complex Query: “If a cylindrical water tank with radius 3m and height 5m is filled at 2 cubic meters per minute, but simultaneously drains at 0.5 cubic meters per minute, how long until it’s 80% full?” GPT-5.1 Response Time: 3-4 seconds Behavior: Brief processing pause before response, suggesting reasoning activation

The adaptive reasoning correctly distinguished trivial arithmetic from multi-step problem-solving requiring volume calculations and rate analysis. This demonstrates the classification mechanism functioning as designed—allocating computational resources proportionally to task demands.

Conversational Tone Comparison

Prompt: “I’m feeling stressed about an upcoming presentation.”

GPT-5 Response: “I understand you’re feeling stressed about your presentation. Here are some strategies to help manage presentation anxiety:

- Practice your material thoroughly

- Use visualization techniques

- Focus on breathing exercises

- Arrive early to familiarize yourself with the space

- Remember that some nervousness is normal and can enhance performance Would you like more specific advice about any of these approaches?”

GPT-5.1 Response: “That’s tough—presentations can really amp up the nerves. A few things that might help:

Take a few minutes today to run through your slides out loud, even if it feels silly. Hearing yourself builds confidence way faster than silent review.

Try the 4-7-8 breathing thing if you start spiraling: breathe in for 4, hold for 7, out for 8. Sounds simple but it actually works.

And hey, most people in the audience are rooting for you. They want you to do well.

Want to talk through what’s specifically making you anxious?”

The tonal shift is unmistakable. GPT-5.1 adopts casual phrasing (“amp up the nerves,” “sounds simple but it actually works”), shorter paragraphs, and a more conversational rhythm. Whether this constitutes improvement depends on user preference—some may find GPT-5.1’s informality refreshing, while others may prefer GPT-5’s structured professionalism.

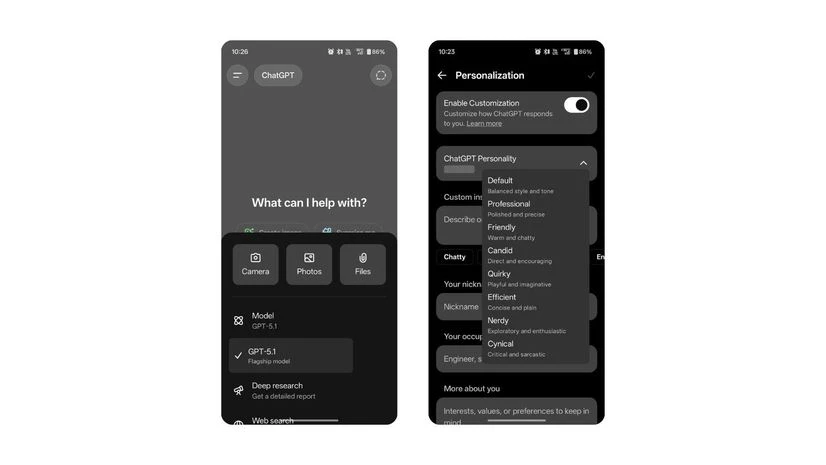

Personality Customization and Tone Control

GPT-5.1 expands ChatGPT’s personality system, introducing new presets and granular tuning capabilities. The updated options:

Refined Existing Presets:

- Default: Balanced tone (unchanged)

- Friendly (formerly “Listener”): Warm, supportive, empathetic

- Efficient (formerly “Robot”): Concise, direct, minimal elaboration

- Cynical: Skeptical, sardonic commentary

- Nerdy: Technical depth with enthusiasm

New GPT-5.1 Presets:

- Professional: Formal, business-appropriate language

- Candid: Direct, honest, less filtered responses

- Quirky: Playful, creative, unexpected phrasings

Beyond presets, OpenAI is experimenting with granular characteristic controls enabling users to adjust:

- Conciseness: Response length and elaboration level

- Warmth: Emotional engagement and supportiveness

- Scannability: Use of formatting, bullet points, structure

- Emoji Frequency: Integration of emoji in responses

These controls deploy through personalization settings and take effect immediately across all conversations, including ongoing chats—a significant improvement over GPT-5’s requirement for new conversations to apply preference changes.

Practical Tone Preset Testing

Prompt: “Explain quantum entanglement”

Professional Preset: “Quantum entanglement refers to a phenomenon in quantum mechanics where two or more particles become correlated in such a way that the quantum state of one particle cannot be described independently of the others, regardless of the spatial separation between them. When particles are entangled, measurement of one particle’s quantum state instantaneously influences the measurement outcome of the other particle, even across arbitrary distances. This correlation appears to violate classical intuitions about locality and causality, though it does not enable faster-than-light communication as information cannot be transmitted through entanglement alone.”

Quirky Preset: “Okay so imagine you’ve got two particles that are basically cosmic twins—they’re linked in this spooky way where if you poke one, the other one reacts instantly, no matter how far apart they are. Einstein called it ‘spooky action at a distance’ because it genuinely weirded him out.

Here’s the thing though: it’s not actually sending signals faster than light (physics nerds can relax). It’s more like… if you had a pair of magic coins that always land on opposite sides when flipped, even if you flip them on different planets. The correlation is real, the information about each individual flip isn’t useful by itself, but together they show this impossible-seeming connection.

Quantum physics is wild.”

The tone variation directly impacts readability, perceived expertise, and user engagement. Professional preset suits academic or workplace contexts, while Quirky preset makes complex concepts more approachable for casual learners.

Competitive Landscape: GPT-5.1 vs. Claude Opus 4.1 vs. Gemini 2.5 Pro

Reasoning Performance

Mathematical Reasoning (FrontierMath):

- GPT-5: Leading performance on Tier 1-3 problems

- Claude Opus 4.1: Competitive on Tier 1, weaker on Tier 2-3

- Gemini 2.5 Pro: Strong but trailing GPT-5

Code Generation (SWE-bench Verified):

- GPT-5: 74.9%

- Claude Opus 4.1: ~72% (estimated based on public reports)

- Gemini 2.5 Pro: ~68% (estimated)

GPT-5.1 maintains GPT-5’s substantial lead in coding benchmarks, with the instruction following improvements potentially widening the gap for tasks requiring specific formatting or architectural patterns.

Context Window

Effective Context:

- GPT-5/GPT-5.1: 400K input, 128K output

- Claude Opus 4.1: 200K input, 32K output

- Gemini 2.5 Pro: 1M input, 128K output

Gemini 2.5 Pro’s larger input window provides advantages for processing lengthy documents, though practical utility diminishes beyond 400K tokens due to attention dilution and increased latency. GPT-5.1’s 128K output window matches Gemini while significantly exceeding Claude, enabling longer-form content generation.

Multimodal Capabilities

All three models process text and images, with varying performance characteristics:

Image Understanding:

- GPT-5: Strong object recognition, spatial reasoning

- Claude Opus 4.1: Excellent document OCR, chart analysis

- Gemini 2.5 Pro: Superior video understanding, temporal reasoning

Document Processing:

- Claude Opus 4.1: Industry-leading for technical document analysis

- GPT-5.1: Strong general-purpose document understanding

- Gemini 2.5 Pro: Excellent for multimedia document types

Pricing

GPT-5.1:

- Input: $1.25 / 1M tokens

- Output: $10.00 / 1M tokens

- Cached input: $0.125 / 1M tokens

Claude Opus 4.1:

- Input: $3.75 / 1M tokens

- Output: $15.00 / 1M tokens

Gemini 2.5 Pro:

- Input: $2.50 / 1M tokens

- Output: $10.00 / 1M tokens

GPT-5.1 offers the lowest input token pricing among frontier models, making it cost-effective for high-volume applications. The cached input pricing provides dramatic savings for applications reusing consistent prompt templates.

API Integration and Developer Considerations

GPT-5.1 arrives in the OpenAI API later this week with two model identifiers:

gpt-5.1-chat-latest: GPT-5.1 Instant with adaptive reasoning GPT-5.1: GPT-5.1 Thinking with dynamic reasoning depth

Migration from GPT-5

OpenAI provides a three-month sunset period during which GPT-5 (Instant and Thinking) remain accessible through the legacy models dropdown for paid subscribers. This transition window enables developers to:

- A/B Test Performance: Compare GPT-5 and GPT-5.1 outputs on representative workloads

- Validate Instruction Compliance: Verify improved instruction following doesn’t introduce regressions

- Assess Safety Implications: Evaluate whether safety metric regressions impact specific use cases

- Optimize Prompts: Refine prompts to leverage adaptive reasoning and improved tone

Recommended Integration Patterns

For General-Purpose Chatbots: Default to GPT-5.1 Instant with Auto routing enabled. The adaptive reasoning provides optimal balance between response speed and quality without manual model selection.

For Complex Analytical Tasks: Explicitly specify GPT-5.1 Thinking for multi-step reasoning requirements. The dynamic thinking depth optimizes cost-performance trade-offs automatically.

For Cost-Sensitive Applications: Implement prompt caching for repeated system messages and few-shot examples. The $0.125 / 1M tokens cached rate dramatically reduces costs for high-volume applications.

For Safety-Critical Applications: Extensively test GPT-5.1 against your specific safety requirements. The documented regressions in content filtering warrant careful validation before production deployment in healthcare, education, or other sensitive domains.

Rollout Timeline and Availability

November 12, 2025: Initial rollout to ChatGPT Pro, Plus, Go, and Business subscribers November 13-15, 2025: Gradual expansion to remaining paid users November 16-20, 2025: Free tier and logged-out user access begins Week of November 18, 2025: API access through gpt-5.1-chat-latest and GPT-5.1 model IDs

Enterprise and Education Plans: Seven-day early access toggle (disabled by default) provides opt-in GPT-5.1 access before automatic cutover. After the seven-day window, GPT-5.1 becomes the sole default model for these plans.

OpenAI implements gradual rollout to “keep performance stable for everyone,” suggesting infrastructure scaling continues during the deployment window. Not all users will see GPT-5.1 immediately—the company prioritizes system reliability over simultaneous availability.

Critical Analysis: What GPT-5.1 Gets Right and Wrong

Strengths

Adaptive Reasoning Innovation: The selective reasoning deployment in GPT-5.1 Instant represents genuine architectural progress. Rather than forcing users to choose between fast general-purpose models and slow reasoning models, GPT-5.1 Instant dynamically optimizes this trade-off. This automation reduces cognitive load and enables optimal performance without expertise in model selection.

Instruction Following Transformation: GPT-5’s unreliable instruction compliance frustrated developers and users alike. GPT-5.1’s dramatic improvements in format adherence, word count constraints, and stylistic guidelines address a fundamental usability barrier. The practical impact extends beyond convenience—reliable instruction following enables new application categories requiring precise output formatting.

Conversational Naturalness Balance:** GPT-5.1 successfully navigates the difficult balance between professional competence and conversational approachability. The warmer default tone makes interactions more pleasant without descending into excessive informality or sycophantic agreeableness that plagued earlier personality experiments.

Weaknesses

Safety Regression Concerns: The documented backsliding across multiple safety categories raises serious questions about OpenAI’s prioritization. Sherwood News reports GPT-5.1 Thinking scored lower on 9 of 13 testing categories compared to GPT-5 Thinking. Given recent high-profile failures where ChatGPT encouraged self-harm, these regressions warrant skepticism about production deployment in sensitive contexts.

Benchmark Opacity: OpenAI claims “significant improvements” on AIME 2025 and Codeforces but provides no specific performance numbers. This pattern of decreasing transparency makes independent validation impossible and undermines trust in marketing claims. The industry deserves quantitative benchmarks, not qualitative assertions.

Limited Architectural Innovation: GPT-5.1 represents iterative refinement rather than fundamental advance. The same training data, similar architecture, and incremental improvements suggest OpenAI is optimizing existing capabilities rather than discovering new ones. This raises questions about whether the GPT-5 family has reached its performance ceiling.

Thinking Mode Cost Implications: GPT-5.1 Thinking’s increased token consumption on complex tasks (71% more tokens than GPT-5 Thinking) directly translates to higher API costs. While the automatic optimization provides value, developers must carefully monitor actual usage patterns to avoid unexpected billing increases.

Practical Recommendations

For Individual Users

Immediate Adoption Recommended: The improved instruction following and conversational tone provide clear benefits without meaningful downsides for general usage. The three-month GPT-5 availability window enables easy reversion if specific use cases regress.

Experiment with Personality Presets: The expanded tone options enable tailoring ChatGPT to different contexts. Professional preset for workplace communication, Quirky for creative brainstorming, Efficient for quick factual queries—optimization by use case enhances utility.

Leverage Adaptive Reasoning: Let GPT-5.1 Auto handle model routing rather than manually selecting Instant vs. Thinking. The automatic optimization delivers better results than manual selection for most users.

For Developers and Businesses

Staged Migration Approach:

- Deploy GPT-5.1 to development/staging environments

- Run parallel A/B tests comparing GPT-5 and GPT-5.1 performance

- Validate instruction following improvements benefit your specific prompts

- Assess any safety regression impacts on your use case

- Gradually cutover production traffic after validation

Implement Robust Monitoring: Track token consumption patterns, especially for GPT-5.1 Thinking workloads. The dynamic reasoning depth provides value but introduces cost variability requiring monitoring.

Reassess Safety Controls: If your application operates in healthcare, mental health, education, or other safety-critical domains, extensively test GPT-5.1’s behavior. The documented safety regressions may necessitate additional guardrails or continued GPT-5 usage.

Optimize for Caching: Restructure prompts to maximize cache hit rates. Fixed system messages, consistent few-shot examples, and stable prompt templates enable 90% cost reduction through cached input pricing.

Future Outlook: GPT-5.1 Pro and Beyond

OpenAI plans to “update GPT-5 Pro to GPT-5.1 Pro soon,” suggesting the $200/month top-tier offering will inherit these improvements. BotCrawl reports GPT-5.1 Pro will provide:

- Higher context limits beyond the standard 400K

- Faster response times through priority infrastructure access

- Enterprise-grade service level agreements

The broader competitive landscape continues intensifying. Google’s Gemini 3 Pro testing, Anthropic’s Claude evolution, and Meta’s Llama 4 development demonstrate that frontier model competition remains vibrant. OpenAI’s ability to maintain leadership depends on continued innovation beyond iterative refinements.

The three-month release cycle (GPT-5 in August, GPT-5.1 in November) suggests OpenAI has settled into a quarterly cadence. Expect GPT-5.2 or GPT-6 announcements in Q1 2026, likely coinciding with product-cycle marketing opportunities.

Frequently Asked Questions

What’s the main difference between GPT-5.1 Instant and GPT-5.1 Thinking? GPT-5.1 Instant is the default general-purpose model with new adaptive reasoning—it automatically decides when to engage deeper thinking for complex queries. GPT-5.1 Thinking is the advanced reasoning model for complex multi-step problems, now with dynamic thinking depth that spends less time on simple tasks and more time on genuinely difficult ones.

Can I still use GPT-5 if I prefer it over GPT-5.1? Yes. GPT-5 (both Instant and Thinking) remains available in the legacy models dropdown for paid subscribers for three months. This gives users time to compare performance and adapt prompts before the sunset period ends.

How much does GPT-5.1 cost through the API? Input tokens: $1.25 / 1M tokens. Output tokens: $10.00 / 1M tokens. Cached input (reused prompts): $0.125 / 1M tokens. This pricing is identical to GPT-5 and represents the lowest input cost among frontier models.

Does GPT-5.1 work better for coding than GPT-5? OpenAI claims “significant improvements on math and coding evaluations like AIME 2025 and Codeforces” through adaptive reasoning, but hasn’t published specific benchmark numbers. The improved instruction following should help with code formatting requirements and architectural specifications.

What are the safety concerns with GPT-5.1? OpenAI’s system card documents regressions in 9 of 13 safety categories for GPT-5.1 Thinking compared to GPT-5 Thinking, including harassment/hateful language and disallowed sexual content. GPT-5.1 Instant also shows worse performance than the October 2025 GPT-5 update on violent content, mental health, and emotional reliance categories.

How do I access the new personality presets? Navigate to ChatGPT settings → Personalization → Tone. You’ll find refined versions of existing presets (Default, Friendly, Efficient, Cynical, Nerdy) plus new options (Professional, Candid, Quirky). Granular characteristic controls (conciseness, warmth, scannability, emoji frequency) are rolling out as an experiment to limited users this week.

Is GPT-5.1 available for free users? Yes, but not immediately. Paid users (Pro, Plus, Go, Business) get access first, with free tier and logged-out users receiving access in the days following the November 12, 2025 launch.

What’s adaptive reasoning and how does it work? Adaptive reasoning allows GPT-5.1 Instant to automatically determine when a query is complex enough to warrant extended thinking before responding. Simple questions get instant answers, while complex math, logic, or analysis problems trigger reasoning loops similar to GPT-5 Thinking mode. This optimization happens automatically without user intervention.

Conclusion: Iterative Excellence or Incremental Stagnation?

GPT-5.1 delivers meaningful usability improvements through adaptive reasoning, instruction adherence, and conversational refinement. These enhancements address real frustrations that limited GPT-5’s practical utility, particularly for applications requiring reliable output formatting or natural dialogue.

However, the release also exposes concerning patterns. Safety regressions across multiple categories suggest OpenAI prioritizes capability and user experience over robustness. Benchmark opacity prevents independent validation of performance claims. The lack of architectural innovation raises questions about whether incremental optimization can sustain OpenAI’s competitive position as rivals deploy genuinely novel approaches.

For immediate practical purposes, GPT-5.1 represents a clear upgrade worth adopting. The instruction following transformation alone justifies the transition for most use cases. The adaptive reasoning provides meaningful value without complexity.

For the broader AI competitive landscape, GPT-5.1 signals OpenAI’s shift toward refinement over revolution. Whether this quarterly iteration strategy suffices to maintain leadership depends on competitors’ ability to deliver architectural breakthroughs rather than incremental gains. The race continues