Internet Scams 2026

TL;DR: Internet scams exploded to $16.6 billion in reported losses in 2024, with AI-powered deepfakes and voice cloning crossing the “indistinguishability threshold” in sophisticated fraud schemes and digital impersonation attacks. Investment fraud now dominates at $6.5 billion annually in cryptocurrency scams and fake investment platforms, while pig butchering scams have siphoned $75.3 billion globally since 2020 through romance fraud schemes and crypto investment scams. Voice cloning requires just 3 seconds of audio for grandparent scams and family emergency fraud, QR code phishing surged 587% in quishing attacks and mobile payment fraud, and deepfake scam files jumped from 500,000 in 2023 to 8 million in 2025 in synthetic media fraud and AI-generated content scams. Older adults lost $445 million in single-incident losses exceeding $100,000 in senior fraud targeting and elderly financial exploitation, marking an eight-fold increase since 2020. The convergence of artificial intelligence, cryptocurrency infrastructure, and psychological manipulation has created an unprecedented threat landscape in online fraud evolution and cybercrime trends 2026 where traditional defenses are increasingly obsolete against AI scam tactics and machine learning fraud.

Americans face an escalating crisis as internet scams reach record-breaking financial and emotional devastation. The Federal Bureau of Investigation’s 2024 Internet Crime Complaint Center report documents $16.6 billion in losses across 880,000 complaints, representing a 33% increase from 2023 and marking the highest annual total in the agency’s 25-year tracking history. Yet these staggering numbers likely represent just 15-25% of actual losses, with shame, embarrassment, and lack of awareness preventing millions from reporting victimization.

The Global Anti-Scam Alliance estimates worldwide losses exceeded $1.03 trillion in 2024, demonstrating that online fraud has evolved from opportunistic crime into sophisticated criminal enterprises operating at industrial scale. What distinguishes 2026’s threat landscape is the weaponization of artificial intelligence, creating scams so convincing that even cybersecurity professionals struggle to differentiate authentic from fraudulent communications.

Research from the University at Buffalo Media Forensic Lab confirms that AI-generated voices have crossed what director Siwei Lyu terms the “indistinguishable threshold.” Major retailers now report receiving over 1,000 AI-generated scam calls daily, with the perceptual indicators that once revealed synthetic voices largely eliminated. Meanwhile, deepfake technology has achieved temporal and behavioral coherence, enabling real-time synthesis that captures not just appearance but movement, mannerisms, and speech patterns across contexts.

The financial services sector experienced particularly acute targeting, with business email compromise and tech support scams generating $2.7 billion and $1.4 billion in losses respectively. Investment fraud claimed the top position across all crime categories tracked by the FBI, accumulating $6.5 billion in reported losses and demonstrating a 24% year-over-year increase despite heightened regulatory scrutiny and public awareness campaigns from organizations like the Federal Trade Commission and AARP Fraud Watch Network.

Geographic analysis reveals concerning patterns in fraud distribution and scam targeting by region, with California ($3.54 billion), Texas ($1.66 billion), Florida ($1.51 billion), and New York ($1.15 billion) accounting for the majority of absolute losses in state-by-state fraud statistics and regional scam patterns. However, per-capita analysis tells a different story about cybercrime vulnerability by state and fraud risk assessment. Nevada residents lost $96.29 per person in gambling scams and casino fraud, nearly double the national average of $47.69 in per capita fraud losses, while Washington D.C. ($84.66), California ($89.79), and Alaska ($70.57) also demonstrated elevated vulnerability in high-risk fraud zones and scam hotspot analysis.

Age-based victimization patterns expose systematic targeting of older adults, who suffered nearly $5 billion in losses and submitted the greatest number of complaints to the IC3. Federal Trade Commission data shows that adults age 60 and older reporting losses exceeding $10,000 quadrupled since 2020, while those losing $100,000 or more increased eight-fold from $55 million to $445 million during the same period. These life-altering losses frequently represent entire retirement savings, leaving victims financially and emotionally devastated.

Industry targeting reveals strategic focus by criminal organizations. The manufacturing sector remained the most-targeted industry despite experiencing a 16.8% reduction in phishing attempts during 2024. Education institutions faced a staggering 224% explosion in phishing campaigns, while Finance and Insurance sectors saw a 78.2% reduction, likely reflecting improved defensive postures and employee training programs. Technology and Communications experienced a 32.8% decrease, suggesting that security-conscious industries are developing effective countermeasures.

The 2026 scam ecosystem operates across multiple attack vectors simultaneously. Email displaced phone calls and text messages as the most common initial contact method, accounting for the highest proportion of fraud reports in 2023. However, social media emerged as the most financially devastating platform, with Americans reporting $2.7 billion in losses between 2021 and 2023. One in four people who reported losing money to fraud since 2021 identified social media as the scam’s origin, exceeding losses from phone calls, emails, websites, and mail combined.

Payment method preferences reveal criminal adaptation to law enforcement capabilities in money transfer scams and payment fraud schemes. Cryptocurrency and bank transfers accounted for more than 50% of all reported losses in 2024 through Bitcoin scams, Ethereum fraud, and wire transfer scams, with 83% of losses involving internet or technology usage in digital payment fraud and online transaction scams. Cryptocurrency’s appeal stems from transaction irreversibility in blockchain payment scams, pseudo-anonymity in anonymous crypto transfers, and cross-border movement capabilities in international money laundering that complicate recovery efforts and prosecution in cryptocurrency fraud investigation. Gift cards, wire transfers, and reloadable debit cards round out preferred payment methods in gift card scams, prepaid card fraud, and money mule schemes, each chosen specifically for difficulty in tracing and recovering funds in untraceable payment scams and irreversible transaction fraud.

The convergence of artificial intelligence with traditional scam methodologies has created hybrid threats that exploit both technological sophistication and human psychology. Voice cloning scams now require just three seconds of audio to generate convincing replicas complete with natural intonation, rhythm, emphasis, emotion, pauses, and breathing patterns. McAfee research found that 70% of people worldwide lack confidence in distinguishing cloned voices from authentic recordings, while one in four survey respondents had directly experienced AI voice cloning scams or knew someone who had.

AI-Powered Deepfakes and Voice Cloning: The New Frontier

Artificial intelligence has fundamentally transformed the scam landscape, elevating fraud from detectable deception into near-perfect impersonation. The technical capabilities enabling this transformation stem from three concurrent developments: video generation models achieving temporal consistency, voice cloning crossing perceptual thresholds, and consumer-accessible tools eliminating technical barriers.

Video generation models like OpenAI’s Sora 2 and Google’s Veo 3 now produce stable, coherent facial animations without the flickering, warping, or structural distortions around eyes and jawlines that previously served as reliable forensic indicators. These models disentangle identity representation from motion information, enabling the same identity to exhibit multiple motion types while maintaining consistency across frames. The result produces deepfakes indistinguishable from authentic recordings for non-expert viewers, particularly in low-resolution contexts like video calls and social media platforms.

DeepStrike cybersecurity research documents explosive growth from approximately 500,000 online deepfakes in 2023 to about 8 million in 2025, representing annual growth approaching 900%. This volume surge coincides with quality improvements that have rendered traditional human-based detection methods increasingly unreliable. The University at Buffalo’s Deepfake-o-Meter forensic tool represents next-generation defensive capabilities, but infrastructure-level protections will prove essential as the perceptual gap between synthetic and authentic media continues narrowing.

Voice cloning technology has achieved what researchers describe as complete indistinguishability from authentic recordings. Services like ElevenLabs, Resemble AI, Google’s Tacotron 2, and Microsoft’s VALL-E can generate custom speech replicating tone, pitch, accent, and speaking style with remarkable realism across multiple languages. McAfee Labs research demonstrates that just three seconds of audio can produce an 85% voice match to the original, with longer samples achieving near-perfect replication in AI voice scams and audio deepfake attacks.

The technical methodology involves two primary approaches. Text-to-speech systems generate pre-recorded audio from written scripts, enabling criminals to craft perfect messages without live interaction. Real-time voice transformation uses voice-masking software to convert the scammer’s live speech into the cloned voice during active calls. While real-time deployment remains limited due to processing requirements, advancing computational efficiency suggests widespread adoption within 12-18 months.

Caller ID spoofing amplifies voice cloning effectiveness by manipulating VoIP platforms to display trusted numbers in call headers. Scammers routinely impersonate banks, government agencies, and company executives, with victims seeing apparently legitimate phone numbers that reinforce perceived authenticity. Group-IB threat intelligence confirms that the combination of voice cloning with caller ID spoofing creates scenarios where traditional verification methods fail comprehensively.

Real-world victimization demonstrates devastating consequences of these sophisticated fraud schemes, identity theft scams, and financial fraud attacks. In Dover, Florida, Sharon Brightwell lost $15,000 to scammers who cloned her grandson’s voice, creating an emergency scenario demanding immediate wire transfers. UK energy firm employees transferred €220,000 after receiving calls from someone who sounded exactly like the company’s CEO, directing funds to a “trusted supplier.” Engineering firm Arup lost $25 million in a single deepfake incident, while financial sector targeting has increased over 2,100% in three years according to Norton Cyber Safety Insights reports from London’s financial district.

Corporate targeting reveals particular vulnerability in business email compromise schemes enhanced with deepfake elements. WPP’s CEO was impersonated using cloned voice on a fabricated Teams-style call, while numerous cases involve synthesized executive video messages authorizing fraudulent wire transfers. The FBI’s 2024 public service announcement explicitly warns that generative AI enables criminals to create content bypassing traditional detection methods, with law enforcement struggling to keep pace.

The emotional manipulation amplified by AI deserves particular attention. Voice cloning removes the mental barrier to skepticism that typically accompanies suspicious requests. When communications sound, look, and behave exactly like trusted individuals, rational defenses shut down. Victims report profound feelings of betrayal, fear, and helplessness, with the emotional toll frequently exceeding financial damage. Older victims particularly experience shame and self-blame, believing they should have recognized deception despite technological sophistication rendering such detection practically impossible.

Family emergency scams leverage voice cloning with exceptional effectiveness. Parents receive calls from what sounds precisely like their child’s voice, pleading for urgent assistance with bail money, medical bills, or emergency travel funds. Grandparent scams similarly exploit emotional connections, with criminals obtaining voice samples from social media videos, public speaking engagements, or even voicemail greetings. The urgency manufactured by scammers overwhelms critical thinking, prompting victims to act before verifying authenticity.

Romance and friendship scams increasingly incorporate deepfake video capabilities to sustain long-term deception. Traditional romance scams relied on stolen photographs and excuses avoiding video calls. Current iterations deploy AI-generated faces with realistic movement, responsive expressions, and authentic-seeming backgrounds. Victims engage in extended video conversations with entities that don’t actually exist, building emotional connections sophisticated enough to justify substantial financial requests.

The financial services industry faces mounting challenges as deepfakes target customer-facing operations. Banks report AI-generated voices attempting to access accounts, initiate wire transfers, and modify security settings. Multi-factor authentication provides incomplete protection when criminals can synthesize real-time responses to security questions or generate verification codes through SIM swapping attacks. Financial institutions are investing heavily in multimodal authentication systems that analyze behavioral patterns, device fingerprints, and contextual anomalies rather than relying solely on voice or visual verification.

Prevention strategies must evolve beyond traditional approaches. The family “safe word” concept provides foundational protection, requiring pre-established codes that authentic family members can provide but AI systems cannot access. These safe words must be unique, difficult to guess, and avoid information readily available online like street names, alma maters, or phone numbers. Four-word paraphrases offer superior security compared to single words, while regular rotation prevents compromise through observation or accidental disclosure.

Verification protocols represent critical defense layers. Any unexpected request for money or sensitive information should trigger immediate independent confirmation through separately-initiated contact using known phone numbers rather than provided contact information. Hanging up and calling back through official channels defeats caller ID spoofing and allows time for emotional responses to subside. Delayed decision-making naturally counters urgency tactics that form the psychological core of these scams.

Technical defenses include limiting publicly available voice content, maintaining privacy settings on social media platforms, and avoiding oversharing personal details that could inform more convincing scams. Organizations must implement comprehensive security awareness training covering AI-enhanced threats, with particular emphasis on recognizing manufactured urgency, verifying identities through multiple channels, and understanding that visual and auditory “proof” no longer provides reliable authenticity indicators.

Investment Fraud and Pig Butchering Scams: The $75 Billion Criminal Industry

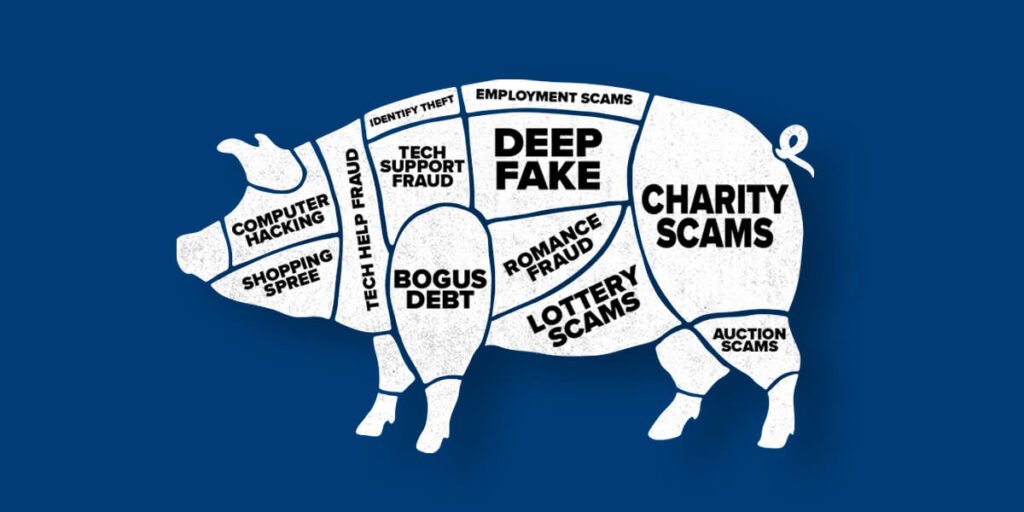

Investment scams have emerged as the single most financially devastating category of internet fraud, accumulating $6.5 billion in FBI-reported losses during 2024 alone. Within this category, “pig butchering” scams represent a particularly sophisticated and psychologically manipulative threat that has extracted at least $75.3 billion globally since January 2020 according to University of Texas research, with actual totals likely far higher. The U.S. Secret Service has issued specific warnings about these cryptocurrency investment scams and romance scam fraud schemes targeting vulnerable investors.

The term “pig butchering” (Chinese: 杀猪盘, shā zhū pán) originates from agricultural practices of fattening livestock before slaughter, metaphorically describing how scammers cultivate victim trust through extended relationship-building before financial exploitation in these crypto fraud schemes. These schemes combine romance scam elements with investment fraud, creating multi-layered deception that can sustain for weeks or months before the “slaughter” phase begins.

The operational methodology follows predictable stages that leverage both psychological manipulation and technical infrastructure. Initial contact occurs through dating apps, social media platforms, messaging services like WhatsApp and WeChat, or even wrong-number text messages designed to initiate seemingly innocent conversations. Scammers deploy sophisticated fake profiles, frequently using stolen photographs of attractive individuals to establish visual appeal and credibility.

Relationship cultivation represents the critical “fattening” phase where scammers invest substantial time building trust and emotional connection. Conversations remain casual and friendly, with scammers trained to mirror victims’ interests, shared hobbies, and communication patterns. They might claim to be fellow runners if targets post running content, or share Paris travel stories matching victims’ recent trips. This personalization, derived from social media mining and public information gathering, creates powerful illusions of genuine connection.

Criminal enterprises operating pig butchering scams frequently employ deepfake technology to enhance credibility. Recent investigations expose operations where scammers use stolen photos of Chinese influencer Yangyang Sweet paired with AI-generated video demonstrating wealth and success. These “wealthy friend” or “successful investor” personas display luxury lifestyles, expensive cars, designer clothing, and exclusive restaurants, cultivating aspiration and trust before introducing investment opportunities.

The investment proposition emerges gradually after rapport establishment. Rather than direct solicitation, scammers casually mention their cryptocurrency or investment success, attributing gains to “insider knowledge,” “family connections,” or “exclusive platforms.” They offer to guide victims’ investment strategies, positioning themselves as mentors sharing lucrative opportunities with trusted friends. This approach bypasses skepticism that might accompany direct sales pitches, instead leveraging established emotional bonds.

Fraudulent investment platforms represent sophisticated technical components crucial to sustaining deception. Scammers direct victims to fake cryptocurrency exchanges, trading applications, or investment websites that appear professional and legitimate. These platforms may be available through Apple App Store and Google Play Store, featuring polished interfaces, real-time price charts, and account dashboards displaying fabricated investment growth.

Initial investment requests start small, lowering psychological barriers and establishing pattern behavior. Victims might invest $500-$1,000 initially, with the platform displaying modest but believable returns. Critically, scammers often allow victims to withdraw funds once or twice during early stages, creating powerful proof-of-concept that the investment is legitimate. These successful withdrawals overcome remaining skepticism and prime victims for larger commitments.

Escalation follows predictable patterns. Scammers apply increasing pressure for larger investments, suggesting limited-time opportunities, market movements requiring immediate action, or exclusive deals available only to select participants. They leverage established emotional relationships, implying that trust and commitment require financial participation. Victims who hesitate may receive concerned messages questioning their confidence in the relationship or suggesting lack of seriousness.

The “butchering” occurs when scammers have extracted maximum possible funds. Some operations simply disappear, deleting accounts and vanishing with victims’ money. Others deploy elaborate exit scams demanding payment of exorbitant fees, taxes, or account unlocking charges before withdrawals can proceed. These fabricated requirements extract final amounts before abandonment, with victims realizing too late that both initial investments and additional fees are irretrievably lost.

Financial devastation can be catastrophic. Individual victims have lost entire life savings, with documented cases exceeding $1 million. Philadelphia resident Shreya Datta lost $450,000 to an elaborate romance-investment scam. Maryland woman’s losses ran into millions, with secondary “recovery” firms later victimizing her again by claiming they could retrieve lost funds for upfront fees. The FBI’s 2023 Internet Crime Complaint Report revealed investment scams claimed the most substantial losses of all tracked crime categories, soaring 38% to reach $4.57 billion, with cryptocurrency-related investment fraud accounting for almost 90% of that total.

The human trafficking dimension adds disturbing complexity to pig butchering operations. United Nations reports identify the Mekong region of Southeast Asia as the primary hub for these criminal enterprises, with an estimated 220,000 people forced to execute scams in Cambodia and Myanmar alone. Criminal groups use social media to lure unwitting victims to travel internationally with false promises of legitimate careers, then take them hostage upon arrival.

Trafficked workers are compelled to operate scam campaigns under threat of physical violence, with families frequently forced to pay ransoms in cryptocurrency to secure their release. This creates double victimization where both the fraud targets and the people executing the fraud experience severe harm. The scale of forced labor supporting pig butchering operations has attracted international law enforcement attention, with the 2025 Cambodian-Thai conflict specifically targeting scam centers through military action.

Cryptocurrency infrastructure enables pig butchering operations through characteristics that appeal to criminals. Transaction irreversibility means victims cannot recover funds once transferred in these blockchain scams and digital currency fraud schemes. Pseudo-anonymity complicates tracking and attribution in cryptocurrency fraud cases, particularly when criminals employ mixing services and multi-jurisdictional exchange hopping. Chainalysis research documented single victim losses of $1 million being divided across 15 transactions and routed through 11 different exchanges in crypto money laundering operations, demonstrating sophisticated money laundering that requires advanced blockchain analysis to trace.

Many wallets linked to pig butchering scams show connections to other fraud types, suggesting networked criminal operations rather than isolated scammers. Chainalysis research indicates that pig butchering comprised 33.2% of the $12.4 billion in cryptocurrency fraud during 2024, with 40% year-over-year growth demonstrating escalating sophistication and reach. The estimated scale exceeds major financial crimes including business email compromise, establishing pig butchering as among the most lucrative criminal enterprises globally.

Red flag recognition provides essential protection. Unsolicited contact from strangers on dating apps or social media should trigger immediate skepticism, particularly when conversations quickly move to messaging platforms outside the original service. Reluctance to meet in person or engage in video calls represents classic warning signs, as does any discussion of investment opportunities from someone met online.

Investment platforms demanding cryptocurrency transfers to shell companies or unknown virtual asset service providers should raise immediate alarm. Pressure to invest larger amounts with threats that relationships will end otherwise indicates manipulation rather than genuine concern. Messages emphasizing urgency, limited-time opportunities, or exclusive insider access follow standard scam playbooks designed to bypass critical thinking.

Financial institutions play crucial roles in interdiction. Banks should focus on Know Your Customer requirements, immediately freezing suspicious accounts and conducting compliance checks. Following up with account owners suspected of victimization, requesting supporting documentation for large transfers, and contacting transfer recipients to ensure legitimate purposes all provide intervention opportunities. Filing Suspicious Activity Reports and 314(b) filings enables information sharing across institutions and with law enforcement.

Victims should act immediately upon recognizing victimization. Notifying banks to freeze accounts, filing reports with the FTC at ReportFraud.ftc.gov, submitting complaints to the FBI’s Internet Crime Complaint Center at ic3.gov, contacting the Secret Service at cryptofraud@usss.dhs.gov for cryptocurrency-related fraud, and engaging local law enforcement all enable faster response and potential asset recovery. Providing complete and accurate information including scammer identifiers, website addresses, screenshots, and transaction details assists investigations.

Prevention education emphasizes healthy skepticism toward online relationships, particularly those involving financial discussions. Never sharing login credentials, wallet access, or screens during calls prevents direct theft. Conducting independent research on investment opportunities, consulting regulatory authority websites, and searching for scam reports before committing funds provides additional protection. Requesting financial statements, annual reports, and audit results for proposed investments separates legitimate opportunities from fabricated schemes.

The psychological resilience required to resist pig butchering scams stems from understanding that emotional manipulation represents the core methodology. Criminal enterprises train operators in relationship development, objection handling, and persuasion techniques specifically designed to override rational decision-making. Recognizing that manufactured connection, fabricated shared interests, and apparent romantic or friendly investment advice all follow scripted patterns helps maintain perspective.

Phishing, QR Code Scams, and Traditional Attack Vectors

Despite the emergence of AI-enhanced threats, traditional phishing and its evolved variants continue representing massive portions of cybercrime activity. Email phishing remained the most common delivery method for fraud in 2023, with nearly 1 million attacks recorded worldwide in Q4 2024 alone, representing an increase of more than 100,000 from the previous quarter. However, the tactics, techniques, and procedures employed by criminals have advanced substantially.

QR code phishing, termed “quishing” by cybersecurity professionals, has experienced explosive growth as legitimate QR code adoption expanded during and after the COVID-19 pandemic in these mobile phishing attacks and smartphone scams. KeepNet Labs research documents 8,878 quishing incidents reported between June and August 2023, with June witnessing peak activity at 5,063 cases in QR code fraud schemes. The percentage of QR codes used in phishing attacks surged from 0.8% in 2021 to 12.4% in 2023, sustaining a 10.8% rate through 2024. Overall quishing incidents increased 587% year-over-year, with 26% of all malicious links now embedded in QR codes.

The appeal of QR codes for criminals stems from inherent design characteristics in these barcode scams and contactless payment fraud schemes. QR codes were built for convenience rather than security, creating perfect conditions for exploitation. Users typically cannot read or verify encoded web addresses before scanning, unlike hyperlinks where URLs remain visible. Even when QR codes include human-readable text, attackers can modify this text to deceive users into trusting the destination. The visual simplicity and mobile-first usage patterns bypass critical evaluation that might apply to suspicious emails or links.

NordVPN research reveals that 73% of Americans scan QR codes without verification in these smartphone security threats, with more than 26 million people directed to malicious sites through this vector in mobile malware attacks. The low detection and reporting rate compounds the problem, with only 36% of quishing incidents accurately identified and reported by recipients according to cybersecurity analysis. This gap in security awareness and preparedness leaves vast populations vulnerable to QR-based attacks.

Industry targeting shows strategic focus by criminals employing quishing tactics. The energy sector experiences the highest vulnerability, receiving 29% of over 1,000 malware-infested phishing email QR codes. Manufacturing, insurance, technology, and financial services sectors also face elevated risk, indicating criminal perception of these industries as either more lucrative or more vulnerable. Retail demonstrates the highest miss rate, meaning retail employees frequently fail to detect and report malicious QR codes compared to other sectors.

Deployment methods for malicious QR codes span physical and digital environments. Criminals place fake QR code stickers over legitimate codes on parking meters, restaurant menus, utility bill payment kiosks, and public transit posters. Victims scanning these codes get redirected away from intended payment or information websites to credential-harvesting pages or account-draining sites. San Francisco Municipal Transportation Agency reported widespread parking ticket scams where fake tickets with malicious QR codes were placed on vehicles, directing victims to counterfeit payment websites replicating the SFMTA’s official design.

Email-based quishing embeds QR codes in messages claiming to require urgent account verification, password resets, or payment updates. By placing codes in PDF attachments or JPEG images, criminals bypass email security filters that typically scan for malicious links in message bodies. Victims scan codes with mobile devices, which often have weaker security protections than desktop computers, and find themselves on convincing phishing sites harvesting credentials, credit card numbers, or personal information.

Recorded Future research documents a 433% increase in QR code scans between 2021 and 2023, with phishing-as-a-service platforms like Tycoon 2FA and Greatness now incorporating QR codes to steal credentials and multi-factor authentication tokens. Executives face particular targeting, receiving 42 times more QR code attacks than other employees due to their broader access to company resources and authority to authorize transactions.

Singapore cases demonstrate quishing sophistication and impact. A 60-year-old woman lost $20,000 after scanning a QR code pasted on a bubble tea shop’s window, purportedly offering a free drink for completing a survey. The code downloaded third-party applications requesting microphone and camera access, ultimately compromising the device and enabling account access. Similar tactics have appeared across Asia-Pacific regions, with analysis showing AI-related fraud attempts surging 194% in 2024 compared to 2023.

Traditional email phishing has simultaneously evolved through artificial intelligence integration. Large language models like ChatGPT enable threat actors to generate highly believable phishing emails devoid of grammatical errors that previously served as reliable warning signs in email scams and phishing attacks. Attackers can produce 1,000 phishing emails in under two hours for approximately $10, with AI likely contributing to a 1,265% increase in phishing attacks according to Insikt Group research.

The click-through rate for AI-crafted phishing emails proves alarmingly high, exceeding four times that of human-written messages in these social engineering attacks. This effectiveness stems from improved personalization, emotional manipulation, and contextual relevance that AI enables at scale in spear phishing campaigns. Brand impersonation represents a favored tactic in business email compromise schemes, with 54% of phishing campaigns targeting consumers impersonating online software and service brands according to Microsoft Security data.

Vishing (voice phishing) attacks increased 442% year-over-year, frequently combining caller ID spoofing with social engineering to impersonate trusted entities. Criminals pose as bank representatives, technical support, government officials, or company executives to extract sensitive information or authorize fraudulent transactions. The integration of voice cloning elevates vishing from detectable to nearly impossible to distinguish from legitimate communications.

Smishing (SMS phishing) similarly exploded, with text message scams generating $330 million in losses during 2022 according to FTC data. Toll road scams emerged as a hot trend in 2025, increasing 900% year-over-year. Victims receive text messages claiming unpaid tolls requiring immediate payment to avoid late fees, with convenient links provided to fraudulent payment sites. The urgency manufactured by these messages, combined with legitimacy suggested by sender information and plausible scenarios, drives high conversion rates.

Government impersonation scams demonstrated particular financial devastation. FTC data shows government imposter scams increased $171 million from 2023 to total $789 million in 2024 losses. IRS scams demand tax payments while threatening arrest or legal action. Law enforcement impersonation claims warrant issuance requiring immediate payment or information to resolve. Grant scams offer government grants or loans in exchange for upfront fees or personal information. These scams exploit authority bias and fear of legal consequences to override rational evaluation.

Social media platforms serve as increasingly prevalent fraud vectors in these online scams and digital fraud schemes, accounting for one in four reported fraud losses and exceeding losses from phone calls, emails, websites, and advertising combined. Americans reported $2.7 billion in social media fraud losses according to CBS News reporting on FTC data, with online shopping scams representing the most common category in Facebook scams, Instagram fraud, and TikTok scams. Victims order products marketed on Facebook, Instagram, or Snapchat in these e-commerce scams and fake online stores that never arrive, or receive counterfeit goods substantially different from advertised items in product fraud schemes.

Investment scams promoted through social media generated over half of reported losses despite comprising a smaller percentage of total incidents. Fraudulent actors pose as successful investors, promote bogus applications or websites promising huge returns, and leverage trust built through extended social media engagement. Romance scams initiated through social platforms follow similar patterns, with criminals sending friend requests, developing online relationships, and eventually requesting money for fabricated emergencies or investment opportunities.

The sophistication of modern phishing extends to technical infrastructure enabling attacks. AWS SNS (Simple Notification Service) provides criminals with mass SMS distribution capabilities that appear legitimate due to AWS infrastructure reputation. VAST tags used in digital advertising get manipulated to inject malvertising that redirects users to phishing sites or downloads malware. These technical exploits leverage trusted platforms and services to bypass security measures and enhance perceived legitimacy.

Business email compromise (BEC) represents enterprise-focused phishing that generated $2.7 billion in FBI-reported losses during 2024. Criminals conduct reconnaissance to identify organizational structures, accounting procedures, and executive communication patterns. They compromise email accounts or create convincing spoofs to authorize fraudulent wire transfers, payroll diversions, or gift card purchases. The addition of deepfake audio and video elements to BEC attacks has elevated effectiveness, with employees receiving synthesized messages from executives they believe to be authentic.

Tech support scams accumulated $1.4 billion in losses, typically beginning with pop-up warnings claiming device infections or security compromises. Victims call provided numbers to reach “support technicians” who remotely access computers, install actual malware while claiming to remove threats, and charge hundreds or thousands of dollars for unnecessary services. Remote access tools provide criminals with ongoing device access, enabling later account takeovers, data theft, and further victimization.

Emerging Scam Trends and Future Threats

The scam landscape continues evolving with emerging threats that combine new technologies, exploit current events, and adapt to defensive measures. Several trends deserve particular attention for their potential to cause substantial harm in 2026 and beyond.

Digital arrest scams represent among the most psychologically devastating fraud types emerging from India and spreading to Western nations. These elaborate operations involve criminals impersonating law enforcement across extended video calls spanning hours or even days. Scammers claim victims are linked to serious crimes, conduct interrogations, threaten criminal charges, and demand settlement payments or fines. The use of AI-generated deepfake videos of legitimate law enforcement officers enhances credibility and coercion.

The psychological toll proves severe, with prolonged interrogation creating exhaustion, fear, and desperation that override rational thinking. In September 2024, a retired doctor in Hyderabad, India died from a heart attack after enduring nearly 70 hours of digital arrest and constant video surveillance by scammers. While currently more prevalent in India, AARP identifies digital arrest as among the top five scams to watch in 2026 as tactics migrate globally.

“Hello Pervert” or sextortion scams leverage fabricated claims of compromising video or information to extort payment. Victims receive emails claiming hackers have recorded them viewing pornography or other compromising material through webcam access. Scammers demand payment, typically in cryptocurrency or gift cards, threatening to distribute material to contacts if payment isn’t received. These scams rely entirely on psychological manipulation and manufactured shame, as actual compromising material rarely exists.

AARP recommends never opening email attachments from unrecognized addresses, as most blackmail messages arrive as PDFs. Staying calm despite manufactured urgency and recognizing that legitimate law enforcement never demands cryptocurrency or gift card payments provides protection. Reporting to appropriate authorities rather than paying enables intervention and potential scammer identification.

Recovery scams target previous fraud victims, compounding losses through false promises of fund recovery. Criminals contact victims claiming to represent law enforcement, recovery firms, or consumer protection agencies able to retrieve lost money. They demand upfront fees, personal information, or cryptocurrency payments to initiate recovery processes that never occur. Maryland cases document victims losing additional millions to recovery scammers after initial pig butchering losses, demonstrating how vulnerability persists after initial victimization.

Job and employment scams tripled in frequency from 2020 to 2024, with reported losses jumping from $90 million to $501 million according to FTC data. Fake job offers appear on LinkedIn, Indeed, and other job boards, advertising remote work requiring little effort while offering quick cash. Scammers request personal information including Social Security numbers, addresses, and direct deposit details for “routine paperwork,” enabling identity theft and account takeover. Others demand payment for equipment, training, or background checks that never materialize.

Task scams emerged as a 2025 trend where victims complete simple assignments like liking social media posts in exchange for fees. Initial small payments establish pattern and trust, with victims eventually asked to invest money into schemes for fabricated reasons. These scams can sustain for months with victims believing they’re performing legitimate work before experiencing substantial losses. The prolonged engagement creates reluctance to acknowledge victimization, as time investment and apparent legitimacy create cognitive dissonance.

Friendship scams expand beyond traditional romance fraud to target platonic relationships. Criminals build connections through shared interests, professional networking, or hobby groups before introducing financial requests or investment opportunities. AARP notes that “we’re starting to call them friendship scams instead of romance scams, because a lot of times now it’s somebody wanting to be your friend and talking you up.” The emotional manipulation proves equally effective whether relationships present as romantic or platonic.

Brushing scams involve sending unsolicited packages to individuals, often with QR codes that direct to phishing websites or download malware. The FBI issued specific warnings in July 2024 about these scams, which exploit curiosity about unexpected deliveries. Beyond immediate QR code dangers, brushing enables fraudulent sellers to post fake verified purchase reviews using victims’ information, boosting product rankings while compromising privacy.

Cryptocurrency giveaway scams leverage AI-generated celebrity endorsements to promote fraudulent investment opportunities. Deepfake videos of Elon Musk, Vitalik Buterin, and other public figures promise to double cryptocurrency sent to specific addresses, stealing funds that victims transfer expecting matching returns. These scams spread rapidly on YouTube, X (formerly Twitter), and other social platforms, with individual campaigns collecting millions before detection and removal.

Pump and dump schemes targeting low-capitalization cryptocurrencies coordinate across Telegram and X to artificially inflate token prices before orchestrated selling crashes values and leaves retail investors with worthless holdings. Research shows 3.6% of new tokens experience pump and dump manipulation, with Solana’s Pump.fun platform seeing 98% of launches result in rug pulls or pumps. India’s Enforcement Directorate busted operations using celebrity deepfakes to promote Ponzi schemes valued at ₹2,300 crore.

NFT and metaverse scams exploit emerging technology adoption through fake minting websites, rug pull projects where developers abandon initiatives after collecting funds, and phishing targeting digital wallet credentials. The convergence of QR codes with NFT authentication creates additional attack surface, with QR TIGER CEO Benjamin Claeys noting that “QR codes and NFTs seem to be a great match; a lovely marriage,” while simultaneously cautioning about security implications.

Deepfake celebrity scams extend beyond cryptocurrency to promote miracle products, weight loss supplements, and fraudulent endorsements. Victims believe they’re purchasing legitimate celebrity-recommended products, only receiving counterfeit goods or nothing at all. The authority bias created by familiar faces drives high conversion rates, with viral sharing amplifying reach before platform removal.

Spear phishing incorporating personal information from data breaches creates highly targeted campaigns. Criminals leverage stolen emails, passwords, birth dates, and employment information to craft convincing messages referencing specific details that verify seeming legitimacy. 2024 saw large-scale breaches at major retailers and brands, with fraudsters obtaining extensive databases enabling sophisticated campaigns. Which? research indicates 28% of UK adults faced voice cloning scams in the past year, with 46% unaware such scams existed.

Financial-relief scams exploit economic uncertainty by offering bogus health insurance, tariff relief schemes, or assistance programs. Identity Theft Resource Center CEO Eva Velasquez predicts increased prevalence in 2026 as economic pressures mount: “People will be looking for ways to alleviate their burdens. So when they get a message that says, ‘Hey, apply here, just send us this information,’ and it’s all of your personal information, including your checking account, I think they’ll be vulnerable to that.”

The proliferation of agentic AI capable of autonomous action creates emerging threats beyond current capabilities. Anthropic research acknowledges that AI models like Claude can be misused for sophisticated cyberattacks while arguing that the same abilities prove crucial for cyber defense. The arms race between AI-enhanced attacks and AI-powered defenses will define the 2026-2027 threat landscape, with defensive capabilities needing constant evolution to match offensive innovations.

Real-time deepfake synthesis represents the next frontier, with researchers expecting entire video call participants to be generated live, interactive AI-driven actors adapting instantly to prompts, and scammers deploying responsive avatars rather than static content. The shift from pre-rendered clips to temporal behavioral coherence producing entities that behave like specific people over time rather than just resembling them in appearance will render current detection methodologies obsolete.

Infrastructure-level protections including cryptographic content signing, Coalition for Content Provenance and Authenticity specifications, and multimodal forensic tools will prove essential as human perceptual judgment becomes insufficient for authenticating communications. Organizations must invest in these defensive capabilities now to avoid catastrophic vulnerabilities as synthesis technology continues advancing.

Comprehensive Protection Strategies and Defensive Frameworks

Defending against the 2026 scam ecosystem requires multi-layered approaches combining technological solutions, procedural safeguards, and psychological awareness. No single defensive measure provides complete protection, but integrated strategies significantly reduce vulnerability while enabling rapid response when attacks occur.

Individual Protection Measures

Authentication and Verification Protocols: Establish family safe words or paraphrases for emergency communications, ensuring unique codes difficult to guess and unavailable through online research. Four-word phrases offer superior security compared to single words, while regular rotation prevents compromise. Any unexpected request for money, regardless of apparent source authenticity, should trigger independent verification through separately-initiated contact using known phone numbers from contacts rather than caller ID or provided information.

Critical Thinking and Skepticism: Manufactured urgency represents the cornerstone of scam psychology. Legitimate emergencies rarely require immediate wire transfers, cryptocurrency payments, or gift card purchases. Pausing before responding to urgent requests, regardless of emotional content, provides time for rational evaluation. Scammers rely on emotional responses overwhelming logic, making deliberate delays extraordinarily effective countermeasures.

Digital Hygiene and Privacy: Limit publicly available personal information that criminals can weaponize for personalized attacks. Review and restrict social media privacy settings, avoid oversharing voice content, and carefully consider what photographs, videos, and personal details remain accessible. Information shared online never truly disappears and can be aggregated to create comprehensive profiles enabling sophisticated social engineering.

Account Security: Enable two-factor or multi-factor authentication on all accounts offering the capability, particularly email, banking, and social media. Even when passwords are compromised, additional authentication factors prevent unauthorized access. Use strong, unique passwords across different sites rather than reusing credentials. Password managers simplify this requirement while enhancing security beyond human memory capabilities.

Payment Method Awareness: Understand that requests for payment via gift cards, cryptocurrency, wire transfers, or reloadable debit cards virtually guarantee fraud. Legitimate businesses, government agencies, and family members in genuine emergencies don’t demand these payment methods. Their primary appeal to criminals stems from transaction irreversibility and difficulty tracing funds.

QR Code Caution: Never scan QR codes from unsolicited emails, unexpected packages, or public locations without verification. Use QR code scanning applications with built-in security features that preview URLs before opening and filter malicious destinations. Verify QR code legitimacy by contacting organizations through official channels rather than information provided alongside the code.

Communication Verification: Independently verify unexpected communications from financial institutions, government agencies, or companies by contacting them through official phone numbers or websites found through your own research rather than information provided in suspicious messages. Legitimate organizations expect and respect verification efforts, while scammers typically become hostile or evasive when questioned.

Social Media Vigilance: Recognize that friend requests from strangers, particularly those quickly developing into romantic or close friendships, frequently represent scam attempts. Investment advice from online acquaintances, especially involving cryptocurrency, demands extreme skepticism. Conduct reverse image searches on profile photographs to identify stolen or recycled images used across multiple fake accounts.

Investment Due Diligence: Research any investment opportunity thoroughly before committing funds. Consult regulatory authority websites including the Securities and Exchange Commission, Financial Industry Regulatory Authority, and state securities regulators to verify registration status. Search for company names combined with “scam” or “fraud” to surface warning signs. Request and review financial statements, annual reports, and audit results, understanding that legitimate opportunities provide transparency while scams offer fabricated documentation or excuses avoiding scrutiny.

Reporting and Response: Report suspected scams immediately to multiple authorities including the FTC at ReportFraud.ftc.gov, the FBI’s IC3 at ic3.gov, and local law enforcement. For cryptocurrency fraud, contact the Secret Service at cryptofraud@usss.dhs.gov. Quick reporting enables faster investigation, potential asset freezing, and warning dissemination protecting others. Even without financial loss, reporting contributes to enforcement efforts and threat intelligence.

Organizational Security Frameworks

Employee Training and Awareness: Conduct regular security awareness training covering evolving threats including AI-generated phishing, voice-based scams, and QR code attacks. Move beyond annual compliance exercises to ongoing education with simulated phishing campaigns testing and reinforcing recognition skills. Tailor training intensity to role-based risk, with finance, HR, and executive personnel receiving enhanced focus given their elevated targeting and access levels.

Identity and Access Management: Implement phishing-resistant multi-factor authentication using passkeys, biometrics, or hardware tokens rather than SMS or email codes vulnerable to interception. Apply principle of least privilege, granting users minimum access necessary for their roles. Regularly audit permissions, promptly disable dormant accounts, and carefully vet external or remote access requests.

Email Security Enhancement: Deploy advanced email security solutions using machine learning to detect AI-generated phishing emails, anomalous sender patterns, and malicious attachments. Implement DMARC, SPF, and DKIM protocols preventing email spoofing. Configure systems to flag or quarantine emails containing QR codes, particularly from external sources, enabling human review before delivery.

Endpoint Protection: Ensure comprehensive endpoint security across all devices including mobile phones and tablets that often have weaker protections than desktop computers. Use mobile device management systems enforcing security policies, detecting jailbroken or rooted devices, and enabling remote wipe capabilities. Deploy solutions detecting malicious applications and preventing unauthorized installations.

Voice and Video Authentication: Develop protocols for verifying high-risk transactions beyond voice or video calls alone. Require in-person confirmation, callback verification using known numbers, or multi-person authorization for transactions exceeding certain thresholds. Understand that realistic voice and video synthesis renders these authentication methods insufficient in isolation.

Vendor and Third-Party Management: Assess security postures of vendors and partners accessing organizational systems or data. Require compliance with security standards, conduct regular audits, and maintain updated risk assessments. Many breaches originate through third-party compromise, making supply chain security essential to overall defensive postures.

Incident Response Planning: Develop and regularly test incident response plans addressing various fraud scenarios. Define clear procedures for freezing accounts, contacting law enforcement, preserving evidence, and communicating with affected parties. Response speed directly correlates with damage limitation and recovery prospects.

Technical Countermeasures: Implement caller ID verification systems, deploy anomaly detection monitoring for unusual account activity or transaction patterns, and use blockchain analysis tools for cryptocurrency transactions. Consider voice authentication systems analyzing acoustic fingerprints and behavioral patterns rather than content alone, though understanding these remain vulnerable to sophisticated attacks.

SMS and Communication Filtering: Use SMS filtering technology identifying and blocking malicious messages. Validate sources of VAST tags and other advertising technologies before integration, preventing malvertising injection. Monitor AWS SNS and similar mass communication platforms for abuse indicators.

Financial Institution Responsibilities

Banks and financial services providers occupy critical positions in fraud prevention and interdiction. Know Your Customer requirements must extend beyond account opening to ongoing transaction monitoring. Suspicious activity including large cryptocurrency purchases, wire transfers to unfamiliar recipients, or account behavioral changes should trigger customer contact and verification.

Immediate account freezing upon fraud indicators prevents further losses while investigations proceed. Requesting supporting documentation for large or unusual transactions provides legitimate customers with protection while creating obstacles for criminals. Contacting transfer recipients to verify transaction legitimacy catches fraud before irreversible completion.

Filing Suspicious Activity Reports enables information sharing across financial institutions and with law enforcement. 314(b) information sharing requests facilitate industry collaboration identifying interconnected fraud patterns. Customer education about common scams, warning signs, and protective measures extends defensive capabilities beyond institutional controls.

Law Enforcement and Regulatory Actions

Coordinated law enforcement operations targeting fraud infrastructure, money laundering networks, and scam centers produce measurable impacts in the fight against cybercrime and organized fraud. Operation Stop Scam Calls, involving over 100 federal and state partners and resulting in 180+ actions, demonstrates the effectiveness of multi-jurisdictional coordination in telemarketing fraud enforcement. International cooperation targeting Southeast Asian scam centers through Europol cybercrime initiatives, including military actions during the 2025 Cambodian-Thai conflict, disrupts operational capabilities in human trafficking cases and forced labor exploitation.

Regulatory actions including the FTC’s proposed ban on impersonator fraud and enhanced enforcement against illegal telemarketing operations create deterrent effects and victim restitution pathways in consumer protection enforcement. The FBI’s Virtual Assets Unit, established in 2022, provides specialized capabilities for cryptocurrency crime investigation and asset recovery in digital asset fraud cases and blockchain investigation.

Public awareness campaigns from agencies including the FTC, FBI, AARP, CISA (Cybersecurity and Infrastructure Security Agency), and the National Cybersecurity Alliance disseminate current threat information, warning signs, and protective guidance in cybersecurity awareness programs and fraud prevention education. These efforts prove essential given the constant evolution of scam tactics and the challenge of reaching vulnerable populations through digital literacy programs and online safety education campaigns.

Technology Industry Responsibilities

Platform providers must implement enhanced security measures detecting and removing fraudulent content, fake accounts, and scam advertisements. Social media companies should verify business accounts, flag suspicious patterns, and respond rapidly to fraud reports. Cryptocurrency exchanges need robust Know Your Customer procedures, transaction monitoring, and cooperation with law enforcement investigations.

Artificial intelligence companies developing synthesis capabilities bear responsibility for implementing safeguards preventing malicious use in deepfake creation and voice cloning technology. Content provenance systems, watermarking synthetic media through digital watermarking standards, and cooperation with defensive research efforts help balance innovation against exploitation risks in AI safety and responsible AI development. The Coalition for Content Provenance and Authenticity (C2PA) represents industry collaboration toward standardized authentication frameworks and content verification systems to combat misinformation and synthetic media fraud.

Telecommunications providers should enhance caller ID authentication, implement STIR/SHAKEN protocols preventing spoofing, and cooperate with blocking robocalls and scam texts. Cloud services must monitor for abuse of mass communication platforms and infrastructure supporting fraud operations.

Search engines and app stores need improved vetting processes preventing fraudulent investment platforms, malicious applications, and scam websites from achieving prominent visibility. User reviews and complaint systems should incorporate fraud detection, with rapid response removing identified threats.

Conclusion: Building Resilience Against an Evolving Threat

The $16.6 billion in FBI-reported losses, combined with likely three to four times that amount in unreported fraud, positions internet scams among the most financially consequential criminal threats facing individuals and organizations globally. The convergence of artificial intelligence, cryptocurrency infrastructure, psychological manipulation, and industrial-scale criminal operations has created an unprecedented challenge requiring comprehensive responses spanning technology, policy, education, and individual vigilance.

The evidence demonstrates conclusively that traditional defensive approaches prove increasingly inadequate. Voice and video authentication can no longer reliably verify identity when synthesis technology achieves indistinguishability from authentic recordings. Visual inspection fails when deepfakes maintain temporal consistency and behavioral coherence. Grammar and spelling errors disappear when AI generates content. Caller ID provides no security when spoofing trivially impersonates trusted numbers.

This evolution demands fundamental shifts in defensive thinking. Infrastructure-level protections including cryptographic content signing, standardized content provenance systems, and multimodal forensic analysis must supplement rather than replace human judgment. Organizations need security awareness training reflecting current threats, with particular emphasis on manufactured urgency, verification protocols independent of communication channels, and understanding that any communication medium can be compromised.

Individual resilience stems from healthy skepticism toward unexpected requests, particularly those involving financial urgency. The family safe word concept provides practical protection against emergency scams leveraging emotional manipulation. Deliberate delays counter urgency tactics that form psychological cores of fraud attempts. Independent verification through separately-initiated contact using known numbers defeats caller ID spoofing and provides time for rational evaluation.

The societal dimension requires acknowledging that anyone can be victimized regardless of intelligence, education, or sophistication. Shame and embarrassment prevent reporting, enabling criminals to operate with impunity while denying law enforcement the information needed for effective intervention. Creating environments where victimization reporting occurs without stigma serves collective security interests.

Financial institution responsibilities extend beyond account services to active fraud interdiction through transaction monitoring, customer education, and rapid response to suspicious activity. Technology platforms must balance innovation accessibility against exploitation prevention through enhanced content verification, fake account detection, and cooperation with law enforcement. Cryptocurrency exchanges need robust compliance and investigation support given the payment method’s centrality to modern fraud.

Law enforcement and regulatory coordination demonstrates measurable impact through operations disrupting scam infrastructure, prosecuting criminal enterprises, and recovering assets. International cooperation targeting Southeast Asian scam centers addresses human trafficking dimensions while dismantling operational capabilities. Enhanced information sharing across jurisdictions and industries enables pattern recognition and network disruption exceeding single-case investigations.

The forward trajectory suggests continued escalation absent comprehensive countermeasures. Real-time synthesis capabilities will render current detection methodologies obsolete. Agentic AI will enable autonomous fraud campaigns adapting to defensive measures in real-time. Quantum computing may eventually threaten cryptographic protections currently securing digital communications and financial transactions.

Yet technological advancement simultaneously creates defensive opportunities. AI-powered anomaly detection can identify behavioral patterns humans miss. Blockchain analysis tools enable cryptocurrency tracking previously impossible. Collaborative intelligence sharing platforms aggregate threat data at scale enabling rapid response. Biometric authentication evolves toward multimodal approaches more difficult to spoof comprehensively.

Success requires commitment across multiple domains. Governments must fund law enforcement capabilities, international cooperation frameworks, and public awareness campaigns while balancing innovation encouragement against exploitation prevention. Industry must prioritize security in product design, implement robust verification mechanisms, and cooperate with investigations. Academia contributes through forensic tool development, threat research, and understanding psychological vulnerabilities. Civil society organizations provide victim support, education, and advocacy.

Individuals must recognize that protection starts with awareness. Understanding current threats, their psychological tactics, and effective countermeasures transforms abstract warnings into actionable knowledge. Sharing scam experiences, even when victimization didn’t occur, educates others and contributes to collective resistance. Reporting to appropriate authorities enables investigation and warns others facing similar threats.

The $16.6 billion question isn’t whether internet scams will continue, but rather whether defensive capabilities will evolve rapidly enough to prevent exponential growth in financial and emotional devastation. The answer depends on coordinated action across technology development, policy creation, education expansion, and individual commitment to vigilance. The tools, knowledge, and strategies exist to substantially reduce vulnerability. Implementation requires prioritizing fraud prevention alongside other security imperatives.

In 2026 and beyond, resilience against internet scams stems from recognizing that perfect security remains unattainable while significant harm reduction proves achievable through layered defenses, rapid adaptation, and collective commitment to protecting the digital ecosystem that increasingly defines modern life. The choice between victimization and protection often reduces to pausing before acting, verifying before trusting, and understanding that if something seems too good to be true or creates urgent pressure to act without verification, it almost certainly represents fraud.

The fight against internet scams will never end, but substantial progress remains possible through sustained effort, resource commitment, and recognition that protecting digital infrastructure and trust serves fundamental interests of functioning economies and societies. The $16.6 billion documented in FBI reports represents not just financial loss but damaged trust, compromised security, and human suffering extending far beyond monetary calculation. Preventing that harm justifies comprehensive action spanning all sectors of society.

Frequently Asked Questions: Internet Scams 2026

How much money do Americans lose to internet scams annually?

The FBI’s Internet Crime Complaint Center reports $16.6 billion in losses for 2024, representing a 33% increase from 2023 and the highest annual total in the agency’s 25-year history. However, this figure likely represents only 15-25% of actual losses, as many victims don’t report due to shame, embarrassment, or lack of awareness. The Federal Trade Commission documented $12.5 billion in reported fraud losses for 2024, with global losses estimated at $1.03 trillion by the Global Anti-Scam Alliance.

What are the most common types of internet scams in 2026?

Investment fraud dominates with $6.5 billion in FBI-reported losses, with cryptocurrency-related scams accounting for approximately 90% of that total. Imposter scams generated $2.95 billion in FTC-reported losses, encompassing government impersonation, business impersonation, and family emergency schemes. Business email compromise accumulated $2.7 billion in losses, while tech support scams reached $1.4 billion. Romance and pig butchering scams continue growing rapidly, extracting at least $75.3 billion globally since 2020.

How can I tell if a voice message or call is using AI voice cloning?

Current AI voice cloning technology has reached what researchers call the “indistinguishability threshold,” making detection through listening alone virtually impossible for non-experts. Rather than attempting to identify synthetic voices, use verification protocols including hanging up and calling back through known numbers, requesting family safe words established in advance, and never making financial decisions based solely on voice communications. Unexpected requests for money, regardless of apparent source authenticity, should always trigger independent verification through separately-initiated contact.

Are QR code scams really a significant threat?

Yes. QR code phishing (quishing) increased 587% year-over-year, with 26% of all malicious links now embedded in QR codes according to KeepNet Labs research. NordVPN estimates that 73% of Americans scan QR codes without verification, with over 26 million people directed to malicious sites. The energy sector receives 29% of malware-infested phishing QR codes, while retail, manufacturing, insurance, technology, and financial services also face elevated targeting. Only 36% of quishing incidents are accurately detected and reported, leaving many organizations vulnerable.

What is pig butchering and how does it work?

Pig butchering (杀猪盘) combines romance scams with investment fraud through extended relationship-building before financial exploitation. Scammers initiate contact via dating apps, social media, or messaging platforms, spending weeks or months cultivating trust through casual conversation and emotional connection. They eventually introduce cryptocurrency investment opportunities, guiding victims to fake platforms displaying fabricated gains. Initial small investments and occasional allowed withdrawals establish credibility before pressure for larger amounts. The scam concludes when scammers disappear with funds or demand additional payments for fabricated fees, frequently resulting in complete financial ruin.

How can deepfake videos be used in scams?

Deepfakes enable multiple scam types including synthesized celebrity endorsements for fraudulent investments, fabricated executive videos authorizing wire transfers in business email compromise, realistic romance scam participants avoiding detection through video calls, and manufactured emergency scenarios featuring family members. Deepfake quality has improved dramatically, with videos from 500,000 in 2023 to 8 million in 2025, achieving temporal consistency and behavioral coherence that defeats non-expert detection. Infrastructure-level protections including cryptographic content signing and multimodal forensic analysis provide the only reliable authentication as human judgment becomes insufficient.

What should I do if I’ve been scammed?

Act immediately to minimize damage in fraud recovery and scam victim assistance. Contact your bank or financial institution to freeze accounts, cancel cards, and reverse transactions if possible in financial fraud response and emergency account protection. File reports with the FTC at ReportFraud.ftc.gov for consumer fraud reporting, the FBI’s IC3 at ic3.gov for cybercrime complaints and internet fraud reports, and local law enforcement for police fraud reports and criminal investigation. For cryptocurrency fraud, contact the Secret Service at cryptofraud@usss.dhs.gov for crypto scam reporting and digital asset recovery. Place fraud alerts with Equifax, Experian, and TransUnion for credit monitoring and identity theft protection. Update passwords on compromised accounts in account security recovery and credential reset procedures. Document everything including screenshots, emails, text messages, phone numbers, and transaction records in fraud evidence collection and scam documentation. Professional device cleaning may be necessary if malware was installed in malware removal and device security restoration. Don’t feel embarrassed about scam victim support and fraud counseling, as reporting helps investigators and protects others.

Can I get my money back if I’m scammed?

Recovery prospects vary significantly based on payment method, timing, and jurisdictional factors. Cryptocurrency and wire transfers prove nearly impossible to recover once completed due to irreversibility and cross-border complications. Credit card charges may be reversible through chargeback mechanisms if reported quickly. Bank transfers might be recoverable if flagged before processing completes. Gift card purchases are generally unrecoverable once codes are provided. Speed matters critically; reporting within 24 hours significantly improves recovery prospects. However, realistically, most victims don’t recover losses, making prevention vastly superior to attempted recovery.

How do criminals obtain voice samples for voice cloning?

Social media posts containing video or audio, voicemail greetings, public speaking engagements, recorded webinars, virtual meetings, phone conversations, and even brief responses to scam calls can provide sufficient audio. Just three seconds of speech enables 85% voice match quality, with longer samples achieving near-perfect replication. Criminals mine publicly available content systematically, requiring minimal data to create convincing clones complete with natural intonation, rhythm, emotion, pauses, and breathing patterns.

Are older adults more vulnerable to internet scams?

Data shows mixed vulnerability patterns. Adults age 60 and older suffered nearly $5 billion in FBI-reported losses and submitted the greatest number of complaints. FTC data reveals older adults reporting losses exceeding $10,000 quadrupled since 2020, while those losing $100,000 or more increased eight-fold from $55 million to $445 million. However, younger demographics show vulnerability to different scam types, with social media and romance scams affecting broader age ranges. Overall, anyone can be victimized, but older adults tend to experience larger individual losses, particularly in impersonation and investment scams.

What are the warning signs that I’m being targeted by a scam?

Manufactured urgency demanding immediate action, requests for payment via gift cards, cryptocurrency, or wire transfers, reluctance to meet in person or conduct video calls, pressure to keep communications secret from family or advisors, too-good-to-be-true investment returns or opportunities, unsolicited contact from strangers developing into rapid friendship or romance, requests for personal information through unexpected channels, poor grammar and spelling in communications from supposed legitimate organizations, caller ID showing unexpected or spoofed numbers, and emotional manipulation creating fear, excitement, or guilt all indicate potential scams.

How effective is multi-factor authentication against scams?

Multi-factor authentication significantly enhances security but isn’t foolproof. Phishing-resistant MFA using passkeys, biometrics, or hardware tokens provides robust protection. SMS-based codes remain vulnerable to SIM swapping attacks and interception. Email codes face compromise if email accounts are breached. MFA prevents unauthorized account access even when passwords are stolen, but social engineering can manipulate victims into providing authentication codes directly to scammers. MFA should be combined with other protective measures rather than relied upon exclusively.

Do cryptocurrency scams only affect cryptocurrency investors?

No. While cryptocurrency investment fraud represents the largest category, criminals increasingly demand cryptocurrency payment for various scam types including ransomware, romance scams, sextortion, tech support fraud, and government impersonation. Cryptocurrency’s appeal stems from transaction irreversibility, pseudo-anonymity, and cross-border capabilities rather than victims being crypto investors. Non-investors are instructed to purchase cryptocurrency through exchanges or ATMs specifically for payment, making cryptocurrency a payment mechanism for diverse fraud types rather than only investment scams.

How can businesses protect against business email compromise enhanced with deepfakes?

Implement multi-person authorization for transactions exceeding specific thresholds, require callback verification using independently-sourced phone numbers for unusual requests, establish out-of-band confirmation protocols for wire transfers, deploy advanced email security with AI-generated content detection, conduct regular employee training on BEC tactics including deepfake elements, maintain strict separation of duties preventing single-person transaction authorization, and develop clear escalation procedures for suspicious requests. Understanding that realistic voice and video synthesis renders single-person authentication insufficient drives protocol design toward verification redundancy.

What role do human trafficking victims play in scam operations?

An estimated 220,000 people are forced to execute scams in Cambodia and Myanmar alone according to UN reports. Criminal groups lure victims to Southeast Asia with false career promises, then take them hostage and compel scam operations under physical violence threats. These trafficked workers operate pig butchering schemes, romance scams, and investment fraud while their families face ransom demands in cryptocurrency. The scale of forced labor supporting scam infrastructure adds ethical complexity to anti-fraud efforts, as many perpetrators are themselves victims of human rights abuses.

How are scammers using AI to create more convincing attacks?

Large language models generate phishing emails devoid of grammatical errors that previously signaled fraud. AI produces 1,000 convincing emails in under two hours for approximately $10, contributing to a 1,265% increase in phishing attacks. Voice cloning achieves indistinguishability from authentic recordings using three seconds of audio. Deepfake video synthesis creates realistic personas for romance scams, fabricated celebrity endorsements, and business impersonation. AI enables hyper-personalization at scale, crafting messages targeting individual recipients with specific details making scams vastly more convincing than generic approaches.

What is the relationship between data breaches and targeted scams?

Major 2024 data breaches at retailers and brands exposed emails, passwords, birth dates, addresses, phone numbers, and employment information that criminals use for spear phishing campaigns. Referencing specific personal details creates credibility making fraudulent communications appear legitimate. Victims recognize accurate information about themselves, lowering skepticism and increasing compliance with scam requests. Data aggregation across multiple breaches enables comprehensive profiling supporting highly personalized social engineering. Monitoring for compromised credentials, changing passwords after breach notifications, and maintaining skepticism toward unexpected communications help mitigate these threats.

Are recovery scams common after initial victimization?