Qwen3 by Alibaba is shaking the foundations of the global AI landscape. Released on April 29, 2025, this open family of hybrid artificial intelligence models spans from 0.6 billion to 235 billion parameters, offering unprecedented scalability, multilingual capabilities, and performance that threatens the dominance of OpenAI and Google.

Table of contents

1. What Is Qwen3?

Qwen3 is a next-generation AI model family developed by Alibaba, engineered to balance high-speed execution with advanced reasoning capabilities.

Key traits:

- Model sizes: 0.6B to 235B parameters

- Languages supported: 119 languages

- Training tokens: ~36 trillion tokens

- Architecture: Hybrid reasoning with optional “thought budget”

- Availability: Most models are open source via Hugging Face & GitHub

Qwen3 models are designed to execute tasks in two reasoning modes:

- Fast Thinking: Optimized for low-latency tasks

- Deep Thinking: Ideal for logic-heavy operations, customizable via “thinking budget” settings

Some variants leverage a Mixture-of-Experts (MoE) approach for optimized performance-to-compute ratios.

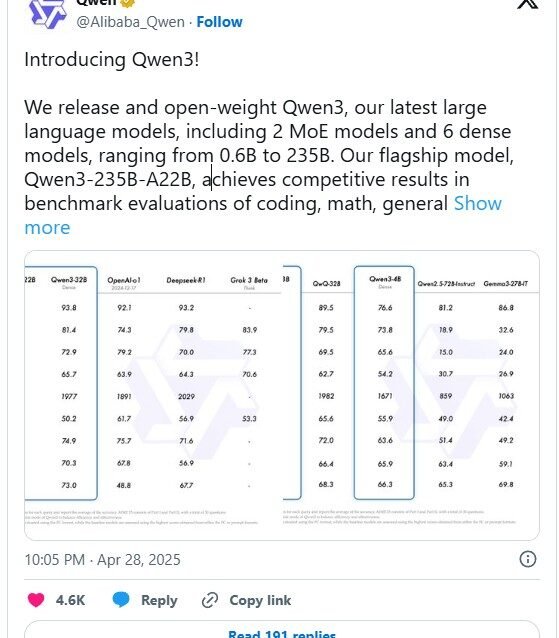

2. Performance Highlights

2.1 Qwen-3-235B-A22B

- Closed source (internal to Alibaba)

- Outperforms Google Gemini 2.5 Pro and OpenAI o3-mini in logic and code-intensive benchmarks

- Top-tier results in AIME (advanced mathematics), BFCL (formal logic), and Codeforces programming tasks

2.2 Qwen3-32B

- Largest open-source model in the family

- Beats OpenAI o1 and DeepSeek R1 in benchmarks like LiveCodeBench

- Strong capabilities in tool-calling, instruction following, and replicating structured data outputs

Benchmarks Where Qwen3 Shines:

| Benchmark | Qwen3-32B Rank | Top Competitor Defeated |

|---|---|---|

| LiveCodeBench | #1 | OpenAI o1 |

| AIME | #1 (235B only) | Gemini 2.5 Pro |

| BFCL | #1 (235B only) | Google DeepMind Models |

| Tool Calling | Excellent | Comparable to GPT-4-Turbo |

3. Technical Architecture

Hybrid reasoning control: Users can set how much “thinking budget” Qwen3 should allocate per task.

Mixture of Experts (MoE): Advanced routing of tasks to subsets of model layers, improving speed and efficiency.

Training Data Sources:

- Textbooks

- QA pairs

- Synthetic AI-generated data

- Public code repositories

- Multi-turn dialogue corpora

Multilingual Reach: Robust support for 119 languages makes Qwen3 globally deployable.

4. Strategic Implications

Alibaba’s move with Qwen3 signals:

- A challenge to U.S. AI dominance

- Innovation under export control pressure: Despite U.S. chip export bans, Alibaba demonstrates top-tier capability

- Global democratization of AI through open model release

Quote from Baseten CEO, Tuhin Srivastava:

“Qwen3 proves that China remains a powerhouse in AI, even under economic restrictions.”

5. Where to Access Qwen3

| Model | Available? | Platform |

| Qwen3-32B | ✅ | Hugging Face, GitHub |

| Qwen3-235B-A22B | ❌ | Internal Only |

Cloud Providers Integrating Qwen3:

- Fireworks AI

- Hyperbolic AI

- Alibaba Cloud (soon)

6. Comparison: Qwen3 vs OpenAI vs Google AI

| Feature | Qwen3-32B | OpenAI o3-mini | Google Gemini 2.5 Pro |

| Parameters | 32B | Unknown (estimated <20B) | ~25B |

| Tool Use | Advanced | Moderate | Strong |

| Code Generation | Excellent (LiveCode) | Good | Good |

| Reasoning Depth | Customizable | Static | Static |

| Languages Supported | 119 | 50+ | 100+ |

7. What Makes Qwen3 Stand Out?

- User-Controlled Reasoning Mode

- Open-source Deployment (32B and smaller)

- Multilingual fluency across 119 languages

- AI-native format replication (e.g., JSON, CSV, Markdown)

- High compatibility with tools and agents frameworks

8. Limitations

- Largest model (235B) is not yet public

- Lacks fine-tuned models for domain-specific applications

- Needs more evaluation in long-context summarization and multimodal tasks

9. Future Outlook

Alibaba’s roadmap includes:

- Expanding model releases to public (incl. Qwen-3-72B)

- Integrations into OSS agent frameworks and multimodal platforms

- More multilingual fine-tunes for legal, medical, and financial verticals

10. Final Verdict

Qwen3 marks a pivotal moment in the global AI race. It’s fast, customizable, and shockingly open. While the largest variant remains internal, even the open-source 32B model rivals industry giants.

For developers, researchers, and enterprises, Qwen3 offers a real alternative to closed LLM ecosystems — and possibly a glimpse into the future of Chinese-led open innovation.

FAQ – Qwen3

Q1: What is Qwen3?

A family of hybrid AI models by Alibaba, ranging from 0.6B to 235B parameters, optimized for reasoning and execution.

Q2: Is Qwen3 free to use?

Yes, smaller models like Qwen3-32B are available open-source on GitHub and Hugging Face.

Q3: Is Qwen3 better than OpenAI’s GPT-4?

Qwen3-235B has benchmarked higher in some areas, but it is not publicly available. Qwen3-32B rivals GPT-4-turbo in certain tasks.

Q4: Does Qwen3 support many languages?

Yes, up to 119 languages are supported.

Q5: How do I run Qwen3 models?

Download from Hugging Face or GitHub, or access via Fireworks AI and other integrated platforms.’OpenAI et Gemini 2.5 Pro de Google sur plusieurs benchmarks clés.